News AMD Announces Radeon VII

Page 16 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

Not that much for gaming. I prefer they will invest more transistors to increase cores/TMUs and ROPs than Tensor Cores.

For server products i dont mind if they will include any fixed function hardware, for gaming its a waste of transistors.

Not that much use in gaming yet. It makes sense that they don't have much use in gaming when tensor HW is only just showing up in consumer GPUs, and only from NVidia so far.

AMD is really unlikely to make dedicated data-center GPUs. So either they include the tech in consumer GPUs, or they miss the boat on datacenter ML parts.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

Not that much use in gaming yet. It makes sense that they don't have much use in gaming when tensor HW is only just showing up in consumer GPUs, and only from NVidia so far.

AMD is really unlikely to make dedicated data-center GPUs. So either they include the tech in consumer GPUs, or they miss the boat on datacenter ML parts.

Vega 10 and 20 can already do tensorflow (actually Vega 20 can reach V100 in FP32 Matrix Multiplication throughput), we dont need that much compute power dedicated to ML in games now. I would prefer they will invest in RayTracing more than installing fixed function HW just fot ML.

My theory - which I think likely did hold, but might not anymore now they’ll have the money from Ryzen, Epyc etc - was that they decided they had to target the places they might make money.

So the base architecture + the easily derivable mid range GPU’s were funded by the console contracts.

They then tried to mildly extend/adapt that into data center worthy GPU’s.

They certainly haven’t even really pretended to have a proper top to bottom product stack for ages.

So the base architecture + the easily derivable mid range GPU’s were funded by the console contracts.

They then tried to mildly extend/adapt that into data center worthy GPU’s.

They certainly haven’t even really pretended to have a proper top to bottom product stack for ages.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

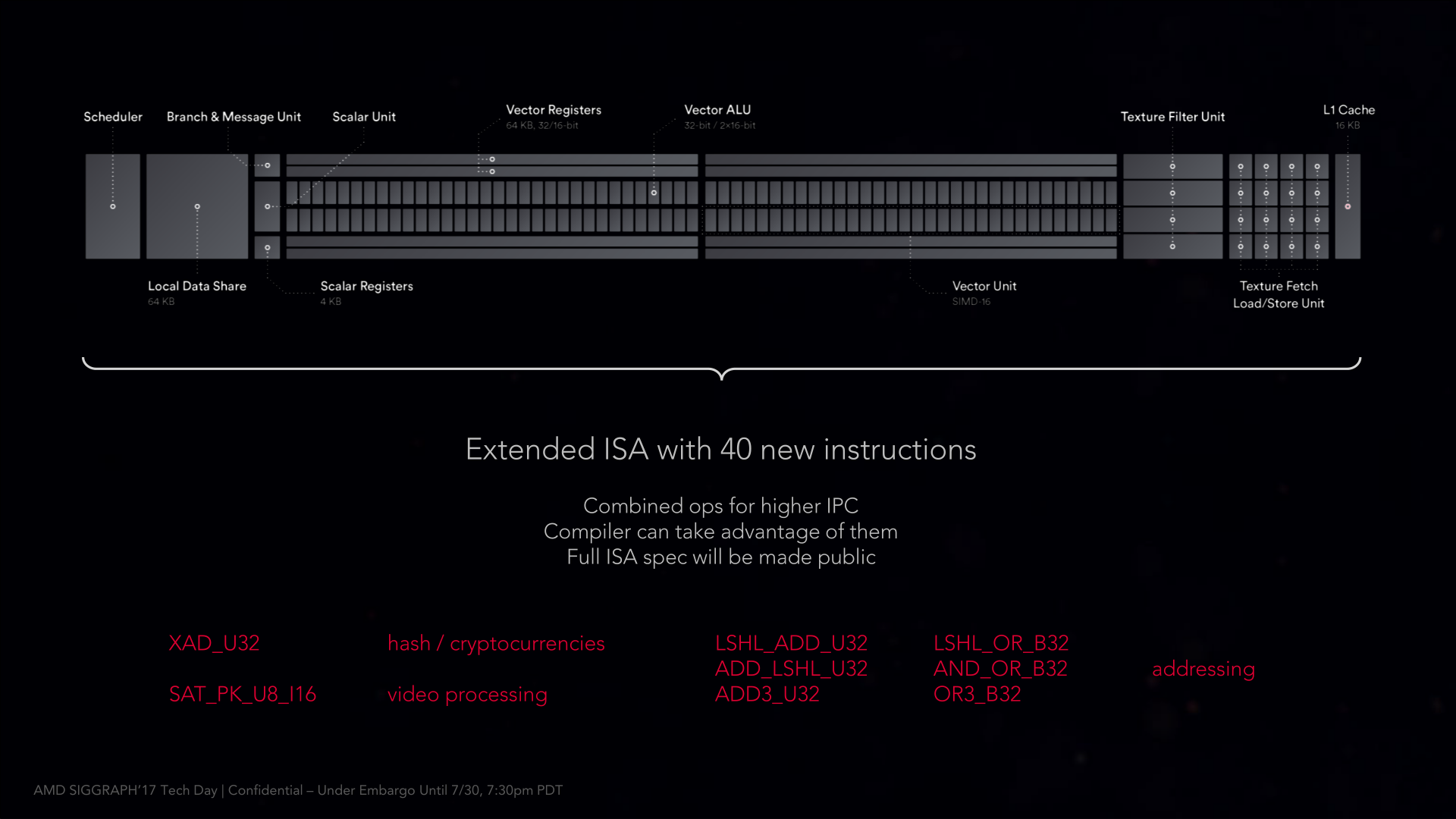

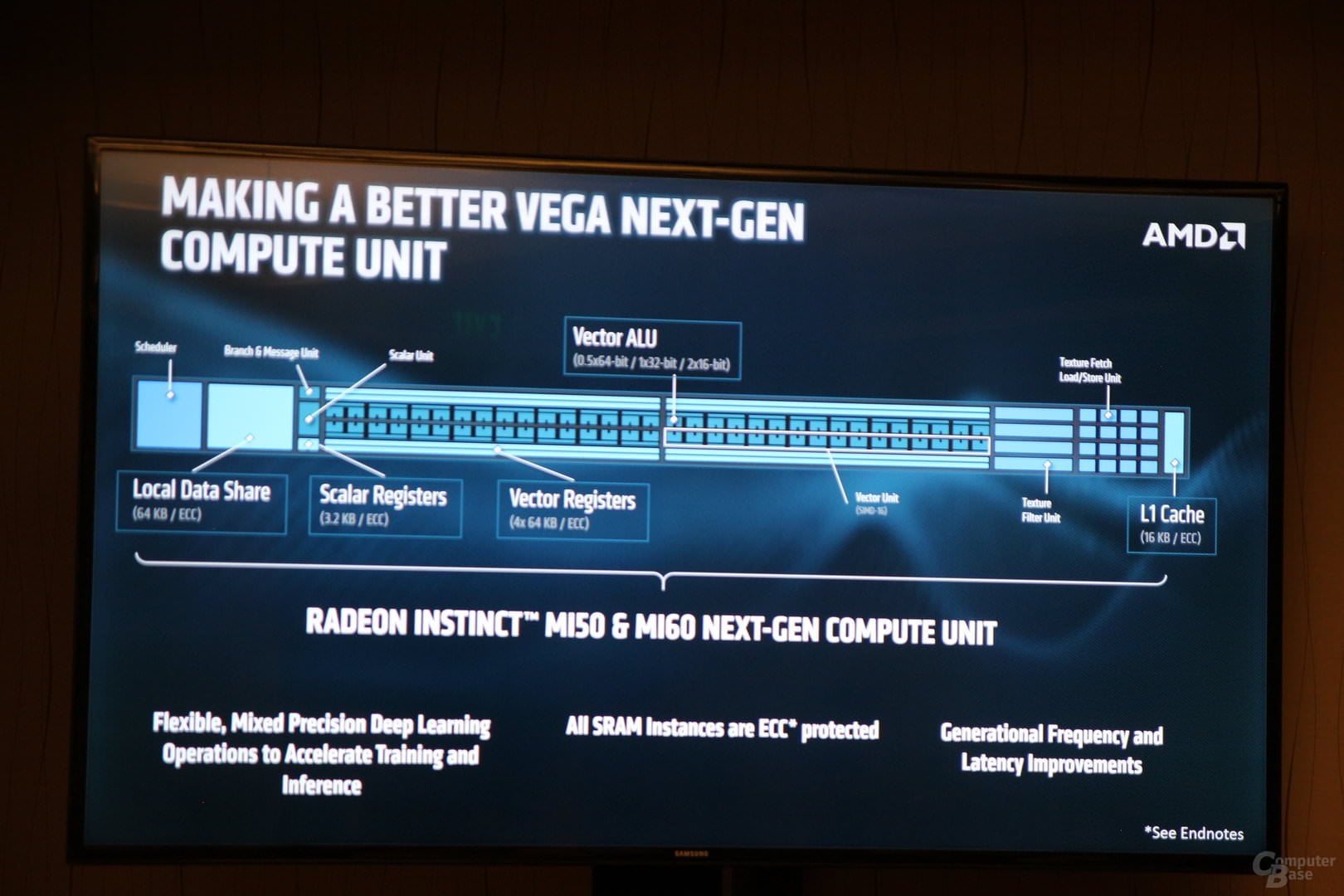

What Design changes?

NCU Changes : Increase register sizes, Decrease Cache Latency , ECC now in Registers + Caches (not only HBM memory). Also, Vector ALU now supports half-rate FP64.

Vega 10 NCU

Vega 20 NCU

And Async Compute optimization.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,197

- 13,286

- 136

What extra would be needed for that level of FP64 support? I didn't think it really required a fundamental change in the hardware, and was just more that AMD was only enabling that for enterprise customers while gimping it elsewhere because it requires validation (end to end ECC) that only the enterprise customers could justify paying for.

You've at least partly answered your own question: validation. Beyond that, I don't have the technical expertise to comment intelligently. I can only add that the last dGPU design offered by AMD with 1/2 FP64 was Hawaii (interestingly enough, Carrizo's/Bristol Ridge's iGPU also has 1/2 fp64).

Can it actually be used for that? Wouldn't that just be in line with Tensor Cores, which as far as I know are not used for ray tracing (otherwise I'd think Nvidia would be using them for that with Turing)? I don't know enough about how this new raytracing API stuff is being processed (so I'm not trying to be glib).

http://www.cadalyst.com/hardware/gr...ubles-down-future-real-time-ray-tracing-44376

Regardless, NV's tensor cores are solidly aimed at AI first (the use of tensor cores to improve real-time raytracing is an interesting hack). AMD's interest in improving low-precision compute performance for Vega20 was squarely aimed at the same applications that make use of tensor cores. It's an AI-first change.

The thing is, I thought even Vega 10 had IF, it just wasn't enabled, but it was considered an integral part of their plan moving forward (so they either only enabled it for some key customers, or maybe just for internal testing prior to planning to enable it in the future sometime). I seem to recall Raja maybe talking about it at some non-consumer show before he left the company).

I also swear I saw some AMD thing saying they could apply IF between the CPU and GPU over PCIe. I also was baffled when they said without bridges (for GPU-GPU on Vega 20) but then it showed what appeared to be bridges connecting GPUs? But maybe I'm mistaking the SLI/Crossfire bridges when "without bridge or switch" mean something else (like the old SLI bridge thing some non Nvidia chipset boards had for a time)?

Doing so without bridges would probably be part of CCIX (if using bridges, then the extra bandwidth from PCIe4.0 would not be necessary). The bridges might have been an internal hack to make things work prior to the standard being ironed-out and deployed in final hardware. I expect that AMD has been planning for IF-over-PCIe for some time, so it would make sense that Vega10 might have had some vestigial element baked into the hardware. That element was never put to any use of which I am aware. Some key customers may have made use of early versions of IF-over-PCIe as you indicated.

As an aside, I agree with @darkswordsman17 that the idea of Navi being delayed until the end of 2020 is . . . probably not accurate. AMD seems to have (repeatedly) incidated that Navi will launch by the end of 2019. Mid-2019 seems too optimistic, but Q4 2019 seems likely.

Last edited:

darkswordsman17

Lifer

- Mar 11, 2004

- 23,444

- 5,852

- 146

I feel slightly validated as AT seems to agree that AMD was clear Vega 20 was for Instinct only. In their article about AMD announcing Radeon VII, they link back to AMD's announcement of Vega 20 in 2018:

I'm full on calling shens that this was always a gaming chip. I'm sure they'll handwaive it away by claiming they just actually said datacenter first, but that's not what they actually told people and it wasn't just AT that inferred that if that's what they think it was (as in the video linked earlier, she tells the people of that site that they're the ones that claimed it wasn't a gaming chip, and they remarked no you were pretty clear and then she says the "I think we just said datacenter first"). Then with all the weirdness on the actual specs of Radeon VII and yeah this was not a thoroughly thought out product.

The other thing is that, after digging into it, I'm no longer thinking they added tensor hardware. All the new stuff is just leveraging the current NCU pipeline. Seemingly they upped the rate of some of it (improved Async Compute, added ops), although I'd guess that's more in the software call to the hardware (meaning, I'd think Vega 10 would be capable of it as well as I think the NCU in Vega 10 can do it, they just didn't have it functioning via their driver/software til now).

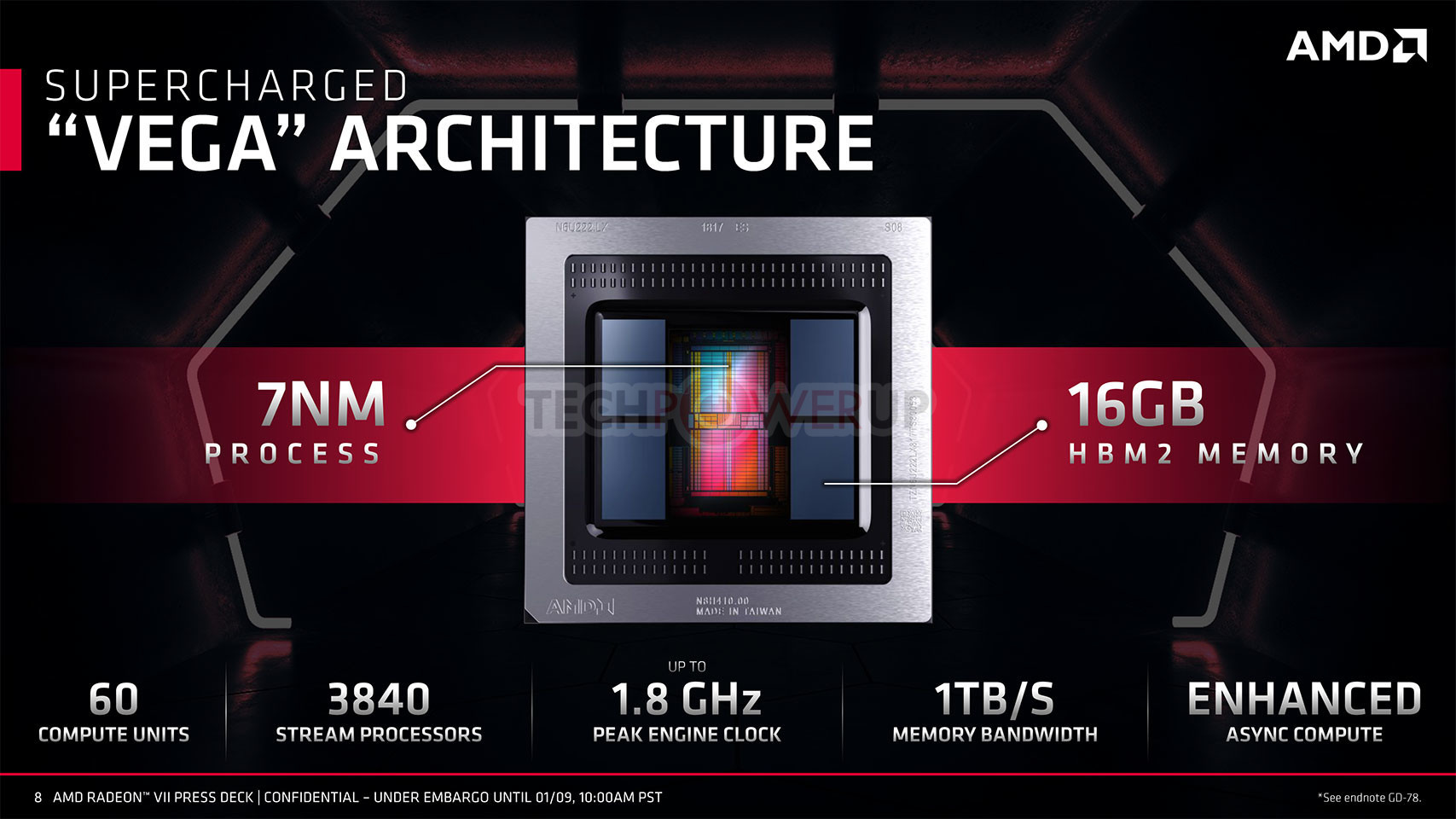

Taking into account that its has bout 5.6% more transistors, so if you take 5.6% of 331, you get ~18.5, so 331-18.5 = 312.5/484= ~65%

Which I guess that's not horrible density improvement, and not everything will scale down.

Plus the extra two memory controllers, but since the memory seems to be responsible for most of the performance improvement, wonder if they wouldn't be smart to make one with 6 stacks, and especially when paired with the 307GB/s stacks, you'd get almost 1.85TB/s of memory bandwidth or almost doubling Vega 20 bandwidth - without doubling the HBM stacks, so if you got another ~20% performance uptick I'd think maybe the costs would be almost worth it. Doubt it'd cost drastically more than 4 stacks now. Kinda crazy and wouldn't be surprised if the overall gains tepered out so it'd be more like 35-40% increase going from Vega 64 (assuming as it is now its ~25-30%) with 484GB/s to 1842GB/s (or very close to 4x), but for their next big chip, I think it could be worth it.

(Removed the images)

If you look, there's very little actual changes there. In fact the only two I can see is the "0.5x64bit" added in the Vector ALU (which I'm not sure actually took extra hardware since even though AMD wouldn't validate Vega 10, they did some "free ECC" thing since the HBM2 memory was capable of it, so have a hunch it was there in Vega 10). The other is that the Vega 20 shows "4x64KB/ECC" compared to just "64KB, 32/16bit" on the Vector registers. Oh and I guess Vega 20 is "3.2KB/ECC" compared to "4KB" in scalar registers. Everything else seems to be the same just with Vega 20 maybe having ECC".

And better Async Compute could be (I'd almost say likely to be) just in the software (driver maybe). Could definitely be wrong, about that though, but I kinda get the feeling Vega was already capable of it they just didn't have the backend support it. While digging around, I'm seeing people (that seem to know about those new ops and the like) saying that they actually mostly just bring parity with Pascal (in op support, I'd guess Vega 20 has higher rate for them and so higher performance).

Alright. Yeah knew Hawaii was their last one they did that (which was weird with Fury and then Vega since they seemed more for the market that would want that feature). I'm not sure what that means (the gap between then, as I feel like there's no technical reason?). I do remember about the one APU having it and people were like "huh?" but because the dGPUs didn't have it it was actually slightly appealing for people that might need that.

Hmm, yeah that just is the de-noising stuff, which while it might improve the appearance to the end user, I don't think actually changes the ray-tracing output for real time as much. And I'm not sold that it can provide the level of de-noising that Nvidia is touting in that image, and doubly so for real-time ray-tracing. Yeah, to me, tensor cores are limited for graphics (with it largely just being for running inference algorithms that I thought was largely just about image recognition; I think it was used for some image manipulation but the results were bizarre - was those weird fractal things where it'd take images of animals and make them into crazy shapes or something?), and is about AI/"Deep Learning"/Machine Learning/etc. Which if that could be used to improve AI in games cool and I'd prefer that over using them for graphics, but then I'd probably prefer for dedicated AI processors that could be done chiplet style in either dedicated card or added to CPU and/or GPU and consoles, etc). Yeah, that's why Vega 20 to me didn't make sense as a gaming chip, as I think it is mostly about getting into the AI/DL/ML/etc market.

Yeah, I thought they outright said IF would work over PCIe, and that since its more dependent on the end pieces having compliance, that they could kinda outdo the standard PCIe spec in throughput over the same wires becasue the important part is the signaling hardware and they could do their own IF links that would enable that. Same here, and so I think it was probably just for their own internal testing before kinda turning it on in an actual product, but yeah hard to say if they might've ok'ed it for some. Supposedly Vega 10 was actually ECC compliant so some were able to use that function. Frankly its hard to make any sense of AMD's GPU division these days. Honestly though, for what it offers, I don't think bridges/ribbon cables would be that horrible.

I was thinking, what if they made an interposer that was a bridge too (that is to put like a dedicated memory setup, so basically memory controllers, with IF, and then HBM2) right on a physical bridge between 2 GPUs, so they'd share the single pool of memory between them. Maybe add some NAND, so the HBM would be a cache but not have to wait on PCIe for storage access. In the future you could do a dedicated memory card in a PCIe slot and run fiber optic cables to each GPU (which should help with bandwidth and latency) so you could have one large pool of very high speed memory paired with a good chunk of storage (say with 10 channels, you'd have like 80+GB of memory doing 3TB/s with NAND that is also doing ~3TB/s, that could do some crazy texture streaming!).

Yeah, AMD has been pretty clear its 2019. But I think I'm done for now speculating on what they'll do/when they'll launch stuff as I've been wrong mostly lately.

Speaking of pricing, perhaps the thing that surprises me the most is that we’re even at this point – with AMD releasing a Vega 20 consumer card. When they first announced Vega 20 back in 2018, they made it very clear it was going to be for the Radeon Instinct series only

I'm full on calling shens that this was always a gaming chip. I'm sure they'll handwaive it away by claiming they just actually said datacenter first, but that's not what they actually told people and it wasn't just AT that inferred that if that's what they think it was (as in the video linked earlier, she tells the people of that site that they're the ones that claimed it wasn't a gaming chip, and they remarked no you were pretty clear and then she says the "I think we just said datacenter first"). Then with all the weirdness on the actual specs of Radeon VII and yeah this was not a thoroughly thought out product.

The other thing is that, after digging into it, I'm no longer thinking they added tensor hardware. All the new stuff is just leveraging the current NCU pipeline. Seemingly they upped the rate of some of it (improved Async Compute, added ops), although I'd guess that's more in the software call to the hardware (meaning, I'd think Vega 10 would be capable of it as well as I think the NCU in Vega 10 can do it, they just didn't have it functioning via their driver/software til now).

Taking into account that its has bout 5.6% more transistors, so if you take 5.6% of 331, you get ~18.5, so 331-18.5 = 312.5/484= ~65%

Which I guess that's not horrible density improvement, and not everything will scale down.

Plus the extra two memory controllers, but since the memory seems to be responsible for most of the performance improvement, wonder if they wouldn't be smart to make one with 6 stacks, and especially when paired with the 307GB/s stacks, you'd get almost 1.85TB/s of memory bandwidth or almost doubling Vega 20 bandwidth - without doubling the HBM stacks, so if you got another ~20% performance uptick I'd think maybe the costs would be almost worth it. Doubt it'd cost drastically more than 4 stacks now. Kinda crazy and wouldn't be surprised if the overall gains tepered out so it'd be more like 35-40% increase going from Vega 64 (assuming as it is now its ~25-30%) with 484GB/s to 1842GB/s (or very close to 4x), but for their next big chip, I think it could be worth it.

NCU Changes : Increase register sizes, Decrease Cache Latency , ECC now in Registers + Caches (not only HBM memory). Also, Vector ALU now supports half-rate FP64.

Vega 10 NCU

Vega 20 NCU

And Async Compute optimization.

(Removed the images)

If you look, there's very little actual changes there. In fact the only two I can see is the "0.5x64bit" added in the Vector ALU (which I'm not sure actually took extra hardware since even though AMD wouldn't validate Vega 10, they did some "free ECC" thing since the HBM2 memory was capable of it, so have a hunch it was there in Vega 10). The other is that the Vega 20 shows "4x64KB/ECC" compared to just "64KB, 32/16bit" on the Vector registers. Oh and I guess Vega 20 is "3.2KB/ECC" compared to "4KB" in scalar registers. Everything else seems to be the same just with Vega 20 maybe having ECC".

And better Async Compute could be (I'd almost say likely to be) just in the software (driver maybe). Could definitely be wrong, about that though, but I kinda get the feeling Vega was already capable of it they just didn't have the backend support it. While digging around, I'm seeing people (that seem to know about those new ops and the like) saying that they actually mostly just bring parity with Pascal (in op support, I'd guess Vega 20 has higher rate for them and so higher performance).

You've at least partly answered your own question: validation. Beyond that, I don't have the technical expertise to comment intelligently. I can only add that the last dGPU design offered by AMD with 1/2 FP64 was Hawaii (interestingly enough, Carrizo's/Bristol Ridge's iGPU also has 1/2 fp64).

http://www.cadalyst.com/hardware/gr...ubles-down-future-real-time-ray-tracing-44376

Regardless, NV's tensor cores are solidly aimed at AI first (the use of tensor cores to improve real-time raytracing is an interesting hack). AMD's interest in improving low-precision compute performance for Vega20 was squarely aimed at the same applications that make use of tensor cores. It's an AI-first change.

Doing so without bridges would probably be part of CCIX (if using bridges, then the extra bandwidth from PCIe4.0 would not be necessary). The bridges might have been an internal hack to make things work prior to the standard being ironed-out and deployed in final hardware. I expect that AMD has been planning for IF-over-PCIe for some time, so it would make sense that Vega10 might have had some vestigial element baked into the hardware. That element was never put to any use of which I am aware. Some key customers may have made use of early versions of IF-over-PCIe as you indicated.

As an aside, I agree with @darkswordsman17 that the idea of Navi being delayed until the end of 2020 is . . . probably not accurate. AMD seems to have (repeatedly) incidated that Navi will launch by the end of 2019. Mid-2019 seems too optimistic, but Q4 2019 seems likely.

Alright. Yeah knew Hawaii was their last one they did that (which was weird with Fury and then Vega since they seemed more for the market that would want that feature). I'm not sure what that means (the gap between then, as I feel like there's no technical reason?). I do remember about the one APU having it and people were like "huh?" but because the dGPUs didn't have it it was actually slightly appealing for people that might need that.

Hmm, yeah that just is the de-noising stuff, which while it might improve the appearance to the end user, I don't think actually changes the ray-tracing output for real time as much. And I'm not sold that it can provide the level of de-noising that Nvidia is touting in that image, and doubly so for real-time ray-tracing. Yeah, to me, tensor cores are limited for graphics (with it largely just being for running inference algorithms that I thought was largely just about image recognition; I think it was used for some image manipulation but the results were bizarre - was those weird fractal things where it'd take images of animals and make them into crazy shapes or something?), and is about AI/"Deep Learning"/Machine Learning/etc. Which if that could be used to improve AI in games cool and I'd prefer that over using them for graphics, but then I'd probably prefer for dedicated AI processors that could be done chiplet style in either dedicated card or added to CPU and/or GPU and consoles, etc). Yeah, that's why Vega 20 to me didn't make sense as a gaming chip, as I think it is mostly about getting into the AI/DL/ML/etc market.

Yeah, I thought they outright said IF would work over PCIe, and that since its more dependent on the end pieces having compliance, that they could kinda outdo the standard PCIe spec in throughput over the same wires becasue the important part is the signaling hardware and they could do their own IF links that would enable that. Same here, and so I think it was probably just for their own internal testing before kinda turning it on in an actual product, but yeah hard to say if they might've ok'ed it for some. Supposedly Vega 10 was actually ECC compliant so some were able to use that function. Frankly its hard to make any sense of AMD's GPU division these days. Honestly though, for what it offers, I don't think bridges/ribbon cables would be that horrible.

I was thinking, what if they made an interposer that was a bridge too (that is to put like a dedicated memory setup, so basically memory controllers, with IF, and then HBM2) right on a physical bridge between 2 GPUs, so they'd share the single pool of memory between them. Maybe add some NAND, so the HBM would be a cache but not have to wait on PCIe for storage access. In the future you could do a dedicated memory card in a PCIe slot and run fiber optic cables to each GPU (which should help with bandwidth and latency) so you could have one large pool of very high speed memory paired with a good chunk of storage (say with 10 channels, you'd have like 80+GB of memory doing 3TB/s with NAND that is also doing ~3TB/s, that could do some crazy texture streaming!).

Yeah, AMD has been pretty clear its 2019. But I think I'm done for now speculating on what they'll do/when they'll launch stuff as I've been wrong mostly lately.

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

I feel slightly validated as AT seems to agree that AMD was clear Vega 20 was for Instinct only. In their article about AMD announcing Radeon VII, they link back to AMD's announcement of Vega 20 in 2018:

I'm full on calling shens that this was always a gaming chip.

So you think the CEO of AMD lies in public about their intentions?

I believe her, and FWIW, as soon as I saw original story, I assumed there would be a consumer version.

It's common practice of AMD and NVidia to release higher end chips into Pro products before consumer products,

and when they release the pro product they are usually silent about consumer offshoots.

But both prefer to reuse the dies for both markets whenever they can. AMD in particular is VERY big on die reuse. Can you name one AMD product die in either CPU or GPU that pro only from AMD in the last decade?

So you think the CEO of AMD lies in public about their intentions?

I believe her, and FWIW, as soon as I saw original story, I assumed there would be a consumer version.

You believe that CEOs always tell the exact and full truth and wouldn't try to weasel-word their way around something? Even if someone ambushed her with that and really pinned her down on exact statements, she'd just say that she misspoke or that that previous information was based on out-of-date plans, blah, blah, blah, spin, spin, spin.

A consumer version of this card doesn't make a lot of sense as a mass market consumer product. At best it's pro-sumer like they Vega Frontier Edition that they originally pitched. This is like NVidia's TitanV, only AMD can't charge several thousand dollars for it since they don't have anything else in their product stack.

If Navi were available, Vega 20 would not be released as Radeon VII. It's a lot of transistors thrown at a problem that has no use for them. HBM2 is still too expensive to use in consumer products, and the Vega architecture itself just wasn't all that good for gaming.

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

You believe that CEOs always tell the exact and full truth and wouldn't try to weasel-word their way around something?

Not CEOs in general, always being honest.

Specifically Lisa Su in this instance. She seems very much a like a straight shooter and I watched the video, where she didn't seem the least bit evasive or weaselly. She almost laughed at the suggesting that it was only for Data center. Laughing in the sense how some of the media gets carried away on the tiniest hint of something.

GodisanAtheist

Diamond Member

- Nov 16, 2006

- 8,497

- 9,929

- 136

I wonder if AMD is looking to fork Vega off into a compute only card akin to the Tesla series while a "scalable" (remember that slide?) Navi arch trimmed of all the compute related fat brings up the consumer space.

AMD likely does not have the resources to run two separate lines simultaneously however, but an interesting thought nonetheless...

AMD likely does not have the resources to run two separate lines simultaneously however, but an interesting thought nonetheless...

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

So if NV cannot sell its high end GPUs , Vega II at $699 will have a real hard time. Nice because both of them have to lower their prices.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,197

- 13,286

- 136

@AtenRa it likely just means that AMD will cut short the number of cards they release as Radeon VII. They'll avoid the consumer market otherwise until Navi is ready. I fully expect Radeon VII to remain a $699 card when you consider how much more AMD can make selling those things as MI50s. They'll just cut supply to prop up the MSRP.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

AMD wants and needs to sell high margins products, Vega II at $699 is one of them. Cutting down supply because the market is not willing to buy will not make things better for them. There is no infinity demand for Datacenter GPUs, if you dont sell at both the Gaming and Datacenter markets you dont increase revenue thus you dont make profit. Simple as that.

EXCellR8

Diamond Member

- Sep 1, 2010

- 4,129

- 939

- 136

looks like some benches got leaked ahead of launch.

https://videocardz.com/79870/amd-radeon-vii-benchmarks-leak-ahead-of-launch

https://videocardz.com/79870/amd-radeon-vii-benchmarks-leak-ahead-of-launch

Radeon VII production cost $650+

Take it with a grain of salt, no references other than "our investigations".

Take it with a grain of salt, no references other than "our investigations".

Stuka87

Diamond Member

- Dec 10, 2010

- 6,240

- 2,559

- 136

Radeon VII production cost $650+

Take it with a grain of salt, no references other than "our investigations".

Already called fudzilla out on this one in another thread. They literally took the cost of memory on Vega64 and just doubled it for Vega VII.

Vega64 cost: https://www.fudzilla.com/news/graphics/43731-vega-hbm-2-8gb-memory-stack-cost-160

Vega VII cost: https://www.fudzilla.com/news/graphics/48019-radeon-vii-16gb-hbm-2-memory-cost-around-320

EXCellR8

Diamond Member

- Sep 1, 2010

- 4,129

- 939

- 136

Well, it does have double the capacity... you'd have to compare it to Vega Frontier; not the 64

DrMrLordX

Lifer

- Apr 27, 2000

- 23,197

- 13,286

- 136

AMD wants and needs to sell high margins products, Vega II at $699 is one of them. Cutting down supply because the market is not willing to buy will not make things better for them. There is no infinity demand for Datacenter GPUs, if you dont sell at both the Gaming and Datacenter markets you dont increase revenue thus you dont make profit. Simple as that.

Radeon VII is basically a loss-leader to keep the brand fresh in the consumer market. AMD knows that Polaris isn't going to be enough on its own until Navi launches. Being an AMD board partner right now must be an agonizing affair.

And I will remind you that at least the initial run of Radeon VII amounts to maybe 20k cards. If you think the pro market won't absorb an allocation like that in the blink of an eye then I have a bridge in Brooklyn to sell you.

Radeon VII production cost $650+

Take it with a grain of salt, no references other than "our investigations".

Grain of salt taken. Yes, I have heard that the HBM2 costs of Radeon VII would be $320 alone, which seems a bit . . . much. You would think that after all these years of HBM production that production costs would come down. Still, even if the cost of HBM2 on Radeon VII were only $200-$250, that would leave little room for profit after taking into account all the other costs associated with production and sales of Radeon VII.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

And I will remind you that at least the initial run of Radeon VII amounts to maybe 20k cards.

That has not been confirmed by anyone to this date.

Edit: With 331mm2 per die for Vega 20 and defect density of 0.1 sg.cm we get close to 126 workable dies per 300mm wafer. Tha means they will only need 160 wafers to gather 20k chips.

If you think the pro market won't absorb an allocation like that in the blink of an eye then I have a bridge in Brooklyn to sell you.

Without knowing how many I50 and I60 AMD is selling each quarter and without knowing the production volume of Vega 20 chips, it is futile to even try to make any conclusions.

Grain of salt taken. Yes, I have heard that the HBM2 costs of Radeon VII would be $320 alone, which seems a bit . . . much. You would think that after all these years of HBM production that production costs would come down. Still, even if the cost of HBM2 on Radeon VII were only $200-$250, that would leave little room for profit after taking into account all the other costs associated with production and sales of Radeon VII.

I dont believe 16GB of HBM 2 cost more than $100 to $150 wholesale to AMD directly from the manufacturer.

Last edited:

DrMrLordX

Lifer

- Apr 27, 2000

- 23,197

- 13,286

- 136

That has not been confirmed by anyone to this date.

And yet that's the number most-often repeated. The 5k number has been effectively debunked. That's about it.

Edit: With 331mm2 per die for Vega 20 and defect density of 0.1 sg.cm we get close to 126 workable dies per 300mm wafer. Tha means they will only need 160 wafers to gather 20k chips.

Interesting, but irrelevant.

Without knowing how many I50 and I60 AMD is selling each quarter and without knowing the production volume of Vega 20 chips, it is futile to even try to make any conclusions.

How much money do you think AMD poured into developing Vega20, in total? Certainly there is the expectation of profit to be gained from the exercise. How many Mi50s and MI60s do you think they would have to sell to make it worth the company's while?

I dont believe 16GB of HBM 2 cost more than $100 to $150 wholesale to AMD directly from the manufacturer.

. . . really? Why?

https://www.extremetech.com/extreme/283957-amd-claims-it-has-enough-radeon-viis-to-meet-demand

This article indicates that Radeon VII's memory + interposer cost would have been $285 in early 2018. You really think the cost of that arrangement would have dropped to $150 or lower just a year later?

Grain of salt taken. Yes, I have heard that the HBM2 costs of Radeon VII would be $320 alone, which seems a bit . . . much. You would think that after all these years of HBM production that production costs would come down.

It's always been plagued with production issues and has never really ramped up to the kind of high volume production that's necessary to drive prices down through economies of scale.

There are also a smaller number of companies producing it which means less competitive pressures on price.

darkswordsman17

Lifer

- Mar 11, 2004

- 23,444

- 5,852

- 146

Just noticed I had 3TB/s NAND, which uh, that's nowhere close, right? They're pushing more like 3GB/s right now right? Plus what are Infinity Fabric links rated for? Kinda curious how feasible my memory chip bridge might would be as I think that could be a really interesting idea but not sure it'd be worth it if you couldn't get anywhere close to HBM2's bandwidth over IF.

Yes, I'm sure she's told at least one lie about their intentions. I don't think it was necessarily malicious (no I'm not trying to incite some negative response or say she needs to be investigated and class action lawsuit or anything, I just want it called out so that mealy-mouth half truths does not become the norm from them), and likely might not have been a lie at the time she said it. Heck, she could spin this as Vega 10 was a gaming chip, and Vega 20 is heavily based around Vega 10, so duh, Vega was always for gaming! Not that she did that.

Absolutely true, well mostly. AMD tends to be pretty clear on there being a consumer version (as they were with Vega 10) and often their Pro versions come after the consumer ones (as we saw with Polaris, and I think Fury and Hawaii, and most of their other chips for awhile there - largely because the extra testing and stability of the Pro stuff likely took longer). The issue is that, the costs of making Vega 20 based stuff, for the relative performance increase, likely was going to make the card have to be sold at a loss in the consumer gaming market. But then Nvidia upped pricing substantially, so AMD saw an opportunity to make such a card and still make money.

For sure and that makes sense. So why were were people feeling that they were deliberate in making it clear that Vega 20 was not for gamers? That's the issue. I couldn't care less if they did or didn't make it a consumer card (honestly if they're making money from it, awesome for them, and great for the people that this is a good product for, including well to do gamers that could use the extra performance it has but didn't want to go with Nvidia for whatever reason).

I agree, she's a great CEO and I especially like how she talks. She's like a JHH with less ego, where she's enthused about the product and company and enjoys talking the technical aspects (compared to say someone like Steve Jobs who seemed to hate the technical stuff, and instead focus on "well I'm saying its better" type of stuff where there's a lot of ambiguity).

I don't think she was being evasive (she even kinda laughs it off and I think was just having a bit of fun with the situation, and perhaps a lot of it was people misinterpreting what she said last year, but there's several people that got that impression), but I do not believe that this was intended for a retail gaming card release. I'm sure things changed. If so, then just fess up. If it turns out that it is limited run and they aren't clear about that, then that is where I'd start to take issue.

The thing is, I don't think she's being nefarious, but she's definitely not being totally open either. And that's expected but still somewhat disappointing.

I don't really get that comment (about running separate lines at the same time; haven't they been doing exactly that with Polaris and Vega, and then Fiji and Hawaii before then?). Its simply about profitability. Prior to Nvidia's RTX cards, I think AMD figured pricing would be prohibitive to them selling Vega 20 for a profit in the consumer gaming market. I do not think that Vega 20 is a cheap card to make, and pushing it to other markets requires a certain amount of support that also costs money (so sometimes its easier to not sell in a market when the costs supersede what you'd benefit from the market even if the cost wouldn't outright be higher than you'd sell it for. Plus they've had other specialty cards (there was that Polaris card with the NAND onboard, and they attempted to sell Fury X2 for like VR content creators after it flopped with consumers and they ended up with excess stock that wasn't selling, or actually I don't even remember exactly what happened I just recall they tried pushing Fury X2 probably because it didn't make a good gaming card because of the price and lack of worthwhile performance for that price).

Yeah, and if those benchmarks putting it below the 1080Ti turn out to be largely representative, then I'll seriously question people buying this over RTX cards (and I've been quite critical of those for the perf/$).

Is it? Seems like you're doing what you're taking issues with others doing, which is just making up numbers to support why you think that's true. I have a hunch its somewhere in the middle. I doubt its great margins, but I doubt its razor thin to the point that $699 is the only price they can make money.

Er, and pushing stuff into the market that doesn't sell will benefit them? Does that make any sense to you? If it doesn't sell, then you'd literally only be losing money by putting it into the market. Now maybe you're arguing that well they already made them so its better than not selling at all, but that's not really the discussion unless they're flopping almost totally in the intended market and so they've got a glut of them and can probably get some money back out of them by selling much cheaper to consumers.

That's true (no infinite market), but they could probably sell the exact same Radeon VII cards to datacenters for less than the MI50/60 but still make more money than they will from selling to gamers. Why not do a Frontier Edition version that again, will have some demand, and sell for more. Or sell it as a FirePro? There's other markets they could be selling this in, where the demand will probably be equal or higher, and the selling price will almost definitely be higher. Now, perhaps this is for those markets, and they just decided to sell it cheaply to maximize the demand, but not lose money on any single card. I could see that being a possibility (AMD has intentionally aimed to disrupt pricing before). If so, then that would be awesome (provided it has quality support for those customers; I'd be interested in its 3D modeling capabilities, and potentially might be able to make the case or getting one for that usage if it does very well there). I am skeptical of that though, which is weird for them to be selling a more expensive but less capable Vega 10 based card there.

You say that but I don't think the consumer gaming market is going to matter much at all for the profitability of Vega 20. If its profitable in datacenter then it'd be profitable, if not, I doubt the gaming market would make it profitable and at that point it'd probably just be trying to keep from taking a total wash from making it.

I never know 3DMark scores so no idea if those are good or not. And while I believe FFXV favors Nvidia, that doesn't look very good. I really won't be surprised if performance on this card is all over the place, sometimes really good, and other times barely better than Vega 64.

That would be my guess. There were also rumors about it being to commemorate the retirement of some longtime AMD person. Which neat, but reminds me of their special edition or whatever attempt at moving Fury X2 cards.

I think this chip is serving its purpose. Its a pipe cleaner for AMD to get experience with 7nm, and its targeted at a market that will take any performance increase they can get at even high prices. That they can sell it to consumers is nice, but it doesn't magically make it a great product that is worth its asking price there, even if it is a lot less expensive than its intended market.

Honestly? I don't think that much (that they didn't need to spend to learn 7nm). It really seems like Vega 10, on 7nm, with some very minor tweaks, using newer HBM2, and improved software. Most of the cost was just the engineering for 7nm (and that was needed for the rest of their products, so them being able to get a worthwhile product out of it is a good thing, especially if it means that their other stuff ends up better and isn't hampered by delays from learning 7nm). But I think they targeted HPC/enterprise because they wanted to make sure that they would make money on it. Hopefully their yields and HBM2 related costs are low enough that they're getting decent margins on Radeon VII too. If Navi works out well an HBM2 version for mobile (and like Macbook Pro) could be nice.

Nice find. Yeah I'd be surprised if its not around $250 at best.

HBM2 costs certainly haven't dropped enough to make it really worthwhile or else I think we'd have seen Nvidia go that route on either mobile RTX or the Titan and 2080Ti chips, as I'm sure the bandwidth would've been worth it (they were up to like 1.2TB/s using the 307GB/s stacks which would almost double the RTX bandwidth). And it would help make their high prices a little more worth it, but the costs probably were high enough that either Nvidia would give up margins or would push their prices to even higher levels.

So you think the CEO of AMD lies in public about their intentions?

I believe her, and FWIW, as soon as I saw original story, I assumed there would be a consumer version.

It's common practice of AMD and NVidia to release higher end chips into Pro products before consumer products,

and when they release the pro product they are usually silent about consumer offshoots.

But both prefer to reuse the dies for both markets whenever they can. AMD in particular is VERY big on die reuse. Can you name one AMD product die in either CPU or GPU that pro only from AMD in the last decade?

Yes, I'm sure she's told at least one lie about their intentions. I don't think it was necessarily malicious (no I'm not trying to incite some negative response or say she needs to be investigated and class action lawsuit or anything, I just want it called out so that mealy-mouth half truths does not become the norm from them), and likely might not have been a lie at the time she said it. Heck, she could spin this as Vega 10 was a gaming chip, and Vega 20 is heavily based around Vega 10, so duh, Vega was always for gaming! Not that she did that.

Absolutely true, well mostly. AMD tends to be pretty clear on there being a consumer version (as they were with Vega 10) and often their Pro versions come after the consumer ones (as we saw with Polaris, and I think Fury and Hawaii, and most of their other chips for awhile there - largely because the extra testing and stability of the Pro stuff likely took longer). The issue is that, the costs of making Vega 20 based stuff, for the relative performance increase, likely was going to make the card have to be sold at a loss in the consumer gaming market. But then Nvidia upped pricing substantially, so AMD saw an opportunity to make such a card and still make money.

For sure and that makes sense. So why were were people feeling that they were deliberate in making it clear that Vega 20 was not for gamers? That's the issue. I couldn't care less if they did or didn't make it a consumer card (honestly if they're making money from it, awesome for them, and great for the people that this is a good product for, including well to do gamers that could use the extra performance it has but didn't want to go with Nvidia for whatever reason).

Not CEOs in general, always being honest.

Specifically Lisa Su in this instance. She seems very much a like a straight shooter and I watched the video, where she didn't seem the least bit evasive or weaselly. She almost laughed at the suggesting that it was only for Data center. Laughing in the sense how some of the media gets carried away on the tiniest hint of something.

I wonder if AMD is looking to fork Vega off into a compute only card akin to the Tesla series while a "scalable" (remember that slide?) Navi arch trimmed of all the compute related fat brings up the consumer space.

AMD likely does not have the resources to run two separate lines simultaneously however, but an interesting thought nonetheless...

I agree, she's a great CEO and I especially like how she talks. She's like a JHH with less ego, where she's enthused about the product and company and enjoys talking the technical aspects (compared to say someone like Steve Jobs who seemed to hate the technical stuff, and instead focus on "well I'm saying its better" type of stuff where there's a lot of ambiguity).

I don't think she was being evasive (she even kinda laughs it off and I think was just having a bit of fun with the situation, and perhaps a lot of it was people misinterpreting what she said last year, but there's several people that got that impression), but I do not believe that this was intended for a retail gaming card release. I'm sure things changed. If so, then just fess up. If it turns out that it is limited run and they aren't clear about that, then that is where I'd start to take issue.

The thing is, I don't think she's being nefarious, but she's definitely not being totally open either. And that's expected but still somewhat disappointing.

I don't really get that comment (about running separate lines at the same time; haven't they been doing exactly that with Polaris and Vega, and then Fiji and Hawaii before then?). Its simply about profitability. Prior to Nvidia's RTX cards, I think AMD figured pricing would be prohibitive to them selling Vega 20 for a profit in the consumer gaming market. I do not think that Vega 20 is a cheap card to make, and pushing it to other markets requires a certain amount of support that also costs money (so sometimes its easier to not sell in a market when the costs supersede what you'd benefit from the market even if the cost wouldn't outright be higher than you'd sell it for. Plus they've had other specialty cards (there was that Polaris card with the NAND onboard, and they attempted to sell Fury X2 for like VR content creators after it flopped with consumers and they ended up with excess stock that wasn't selling, or actually I don't even remember exactly what happened I just recall they tried pushing Fury X2 probably because it didn't make a good gaming card because of the price and lack of worthwhile performance for that price).

So if NV cannot sell its high end GPUs , Vega II at $699 will have a real hard time. Nice because both of them have to lower their prices.

Yeah, and if those benchmarks putting it below the 1080Ti turn out to be largely representative, then I'll seriously question people buying this over RTX cards (and I've been quite critical of those for the perf/$).

AMD wants and needs to sell high margins products, Vega II at $699 is one of them. Cutting down supply because the market is not willing to buy will not make things better for them. There is no infinity demand for Datacenter GPUs, if you dont sell at both the Gaming and Datacenter markets you dont increase revenue thus you dont make profit. Simple as that.

Is it? Seems like you're doing what you're taking issues with others doing, which is just making up numbers to support why you think that's true. I have a hunch its somewhere in the middle. I doubt its great margins, but I doubt its razor thin to the point that $699 is the only price they can make money.

Er, and pushing stuff into the market that doesn't sell will benefit them? Does that make any sense to you? If it doesn't sell, then you'd literally only be losing money by putting it into the market. Now maybe you're arguing that well they already made them so its better than not selling at all, but that's not really the discussion unless they're flopping almost totally in the intended market and so they've got a glut of them and can probably get some money back out of them by selling much cheaper to consumers.

That's true (no infinite market), but they could probably sell the exact same Radeon VII cards to datacenters for less than the MI50/60 but still make more money than they will from selling to gamers. Why not do a Frontier Edition version that again, will have some demand, and sell for more. Or sell it as a FirePro? There's other markets they could be selling this in, where the demand will probably be equal or higher, and the selling price will almost definitely be higher. Now, perhaps this is for those markets, and they just decided to sell it cheaply to maximize the demand, but not lose money on any single card. I could see that being a possibility (AMD has intentionally aimed to disrupt pricing before). If so, then that would be awesome (provided it has quality support for those customers; I'd be interested in its 3D modeling capabilities, and potentially might be able to make the case or getting one for that usage if it does very well there). I am skeptical of that though, which is weird for them to be selling a more expensive but less capable Vega 10 based card there.

You say that but I don't think the consumer gaming market is going to matter much at all for the profitability of Vega 20. If its profitable in datacenter then it'd be profitable, if not, I doubt the gaming market would make it profitable and at that point it'd probably just be trying to keep from taking a total wash from making it.

looks like some benches got leaked ahead of launch.

https://videocardz.com/79870/amd-radeon-vii-benchmarks-leak-ahead-of-launch

I never know 3DMark scores so no idea if those are good or not. And while I believe FFXV favors Nvidia, that doesn't look very good. I really won't be surprised if performance on this card is all over the place, sometimes really good, and other times barely better than Vega 64.

Radeon VII is basically a loss-leader to keep the brand fresh in the consumer market. AMD knows that Polaris isn't going to be enough on its own until Navi launches. Being an AMD board partner right now must be an agonizing affair.

And I will remind you that at least the initial run of Radeon VII amounts to maybe 20k cards. If you think the pro market won't absorb an allocation like that in the blink of an eye then I have a bridge in Brooklyn to sell you.

Grain of salt taken. Yes, I have heard that the HBM2 costs of Radeon VII would be $320 alone, which seems a bit . . . much. You would think that after all these years of HBM production that production costs would come down. Still, even if the cost of HBM2 on Radeon VII were only $200-$250, that would leave little room for profit after taking into account all the other costs associated with production and sales of Radeon VII.

That would be my guess. There were also rumors about it being to commemorate the retirement of some longtime AMD person. Which neat, but reminds me of their special edition or whatever attempt at moving Fury X2 cards.

I think this chip is serving its purpose. Its a pipe cleaner for AMD to get experience with 7nm, and its targeted at a market that will take any performance increase they can get at even high prices. That they can sell it to consumers is nice, but it doesn't magically make it a great product that is worth its asking price there, even if it is a lot less expensive than its intended market.

And yet that's the number most-often repeated. The 5k number has been effectively debunked. That's about it.

Interesting, but irrelevant.

How much money do you think AMD poured into developing Vega20, in total? Certainly there is the expectation of profit to be gained from the exercise. How many Mi50s and MI60s do you think they would have to sell to make it worth the company's while?

. . . really? Why?

https://www.extremetech.com/extreme/283957-amd-claims-it-has-enough-radeon-viis-to-meet-demand

This article indicates that Radeon VII's memory + interposer cost would have been $285 in early 2018. You really think the cost of that arrangement would have dropped to $150 or lower just a year later?

Honestly? I don't think that much (that they didn't need to spend to learn 7nm). It really seems like Vega 10, on 7nm, with some very minor tweaks, using newer HBM2, and improved software. Most of the cost was just the engineering for 7nm (and that was needed for the rest of their products, so them being able to get a worthwhile product out of it is a good thing, especially if it means that their other stuff ends up better and isn't hampered by delays from learning 7nm). But I think they targeted HPC/enterprise because they wanted to make sure that they would make money on it. Hopefully their yields and HBM2 related costs are low enough that they're getting decent margins on Radeon VII too. If Navi works out well an HBM2 version for mobile (and like Macbook Pro) could be nice.

Nice find. Yeah I'd be surprised if its not around $250 at best.

It's always been plagued with production issues and has never really ramped up to the kind of high volume production that's necessary to drive prices down through economies of scale.

There are also a smaller number of companies producing it which means less competitive pressures on price.

HBM2 costs certainly haven't dropped enough to make it really worthwhile or else I think we'd have seen Nvidia go that route on either mobile RTX or the Titan and 2080Ti chips, as I'm sure the bandwidth would've been worth it (they were up to like 1.2TB/s using the 307GB/s stacks which would almost double the RTX bandwidth). And it would help make their high prices a little more worth it, but the costs probably were high enough that either Nvidia would give up margins or would push their prices to even higher levels.

GodisanAtheist

Diamond Member

- Nov 16, 2006

- 8,497

- 9,929

- 136

Holy multi-quote Batman!

- Nvidia released the Volta arch as a pure compute line and I doubt we're going to see the Turing arch with RT cores enter the compute space. NV has forked off a completely separate arch for compute purposes only and went a different direction for pros and consumers.

If AMD keeps pursuing this with a jack of all trades approach, they will eventually be left behind in all markets.

So I simply wondered out loud if Navi will come to be a top to bottom arch rather than merely a mainstream part replacement (scalability, remember that slide?) and the VII is truely a stop gap part with very low R&D or driver investment meant to keep the brand afloat in the meantime.

Vega appears to be a fairly competent compute arch, so AMD can continue pursuing the arch exclusively in the compute space without worries about it's gaming chops

Last edited:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.