I think Mopetar's theory is interesting, but, we can see the yields:

AMD Radeon RX 6000 Series overview og hurtig levering hos Proshop a/s

www.proshop.dk

100x 6800s delievered vs only 25x 6800XTs. That would seem to imply 6800s are yielding 4x the 6800XT.

This bears what I saw on Amazon on launch day, the 6800s stayed in stock a lot longer then the 6800XTs.

This does not discredit Mopetar's theory. It is possible defects are taking 6900XTs and converting them to 6800s, leaving the 6800xt as a hole in the line up.

While it's true that it doesn't really change the theory since it's a matter of selling every die possible as a 6900XT and makes no predictions about how binned dies are treated with respect to the ratio of one to another, it would be odd for AMD to try for that kind of profit maximizing strategy while having a 4:1 ratio of 6800/XT dies which have an even worse value per wafer unless they absolutely had to bin that way to hit performance targets.

Last year at this time

TSMC reported their defect density on their 7nm node as .09 defects / cm^2. I also recall a more recent interview (I forget where it was at and who it was with) where someone from AMD said that more than 95% of their chiplets were coming back defect free, which puts the defect density at closer to .06 / cm^2. If that was long enough ago, it may have further improved. Of course just because the none of silicon is defective doesn't mean it can all hit the same clock speeds or operate within particular voltage or power usage thresholds so there's a need to bin and disable some of the cores to ensure the remaining ones can hit some performance metrics.

We can use those same figures we already have and run the calculations for Navi 21. We know the die size is 29 mm x 18.55 mm and if you plug that in to a die calculator you can get 96 - 100 Navi 21 dies per wafer. The most recent estimated defect density gives AMD and average of 70 - 73 fully functional dies and 26 - 27 dies with some defective silicon. If we use TSMC's numbers from last November it works out to 60 - 63 full dies and 36 - 37 defective dies.

Defects are essentially randomly distributed so they're as likely to hit any part of the wafer as any other. With a die shot of Navi 21 and an image editor we can figure out how much area of each die is taken up by the various components. From here we can start to estimate how many dies may fall into certain bins. Any dies with a defect in the front end are just scrap, and the same goes for anything else that doesn't have redundancy like the circuitry for the display connectors. Depending on how the L2 is connected (I'd have to look up if it's segmented off between the different shader engines or not) defects there could essentially brick a die. I know Nvidia can disable parts of the L2 cache for example.

One curious thing about these parts is that the 6800 doesn't have any disabled memory controllers or any disabled infinity cache. The memory controllers / connections aren't a huge part of the chip (about 64 mm^2 based on estimates I found for RNDA1 chips which should be using that same amount as they have the same 256-bit bus on the same 7nm node), so it's unlikely that you'd see too many defects there. However, the

infinity cache is said to take up about 86 mm^2 or about 16% of the die space. It's possible that they just built in some redundancy to the infinity cache so a defect is far less likely to cause an actual problem or has a good chance of being worked around.

Together the memory controllers and infinity cache probably account for slightly more than 25% of the die area. Depending on redundancy in the infinity cache, somewhere between 3 - 9 chips per wafer should have a defect in these areas and can't be used for any of the Navi 21 parts we know about so far. I wouldn't be surprised if these end up in some OEM only part.

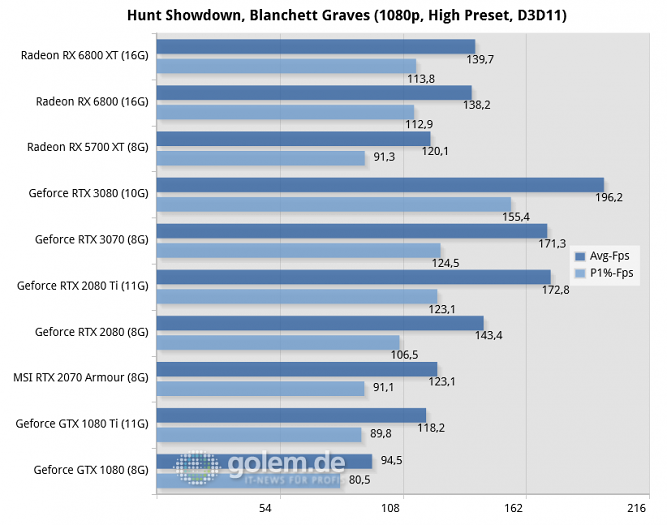

The bins for the 6800/XT can exist for a few reasons. First due to hardware defects, anything that takes out one of the four shader engines or any parts that are essential to its functioning (such as one of the ROPs) is automatically a 6800. A 6800XT would exist for other types of defects. The first is a defect in one of the WGP (think CUs in the old GCN architecture) blocks. Basically some of the shaders can't function. In this case 4 other WGPs get disabled.

The other reason for the bins is intentionally disabling some hardware because it can't hit required clock speeds or stay within power budgets and drags the rest of the chip down with it. A full die might get covered to a 6800XT if this problem occurs in the WGPs or a 6800 if it's in anything associated with one of the shader engines. Presumably a 6800XT with a defect in a WGP could get busted down to a 6800 if the shader engine has performance problems or another set of WGPs in that same shader engine has problems.

I'll need to do some digging or play around with an image editor if I can find an annotated die shot to figure out the relative area for the rest of the chip. That would help us determine how defects might naturally create the bins for the 6800/XT. The shaders should be the predominant feature on the die, so a defect should most likely hit a WGP. The layout of certain sections of the chip could influence the likelihood of poor performance, but AMD would have learned to minimize this from RDNA1.

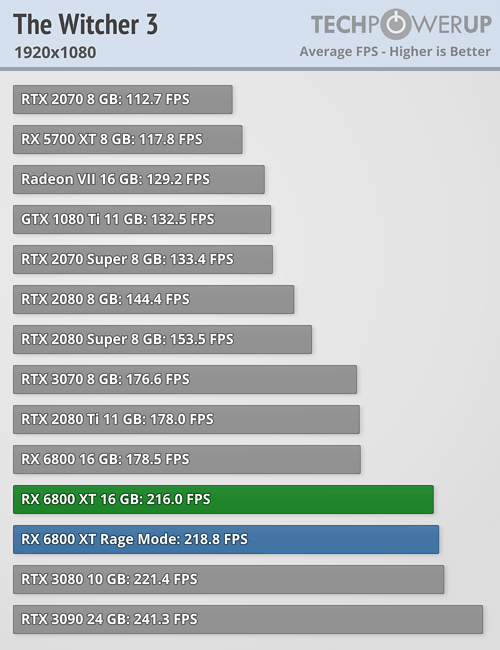

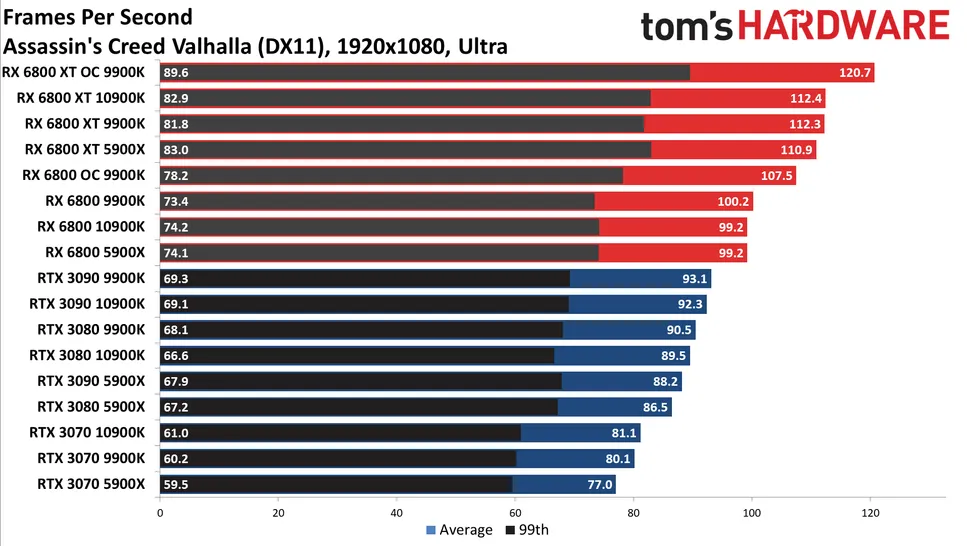

Another piece of data that we want are OC results for 6800 and 6800XT cards. The clock speeds for the 6800 are 125/145 MHz lower for the base/boost, so we need to see where they end up with an OC to determine if the 6800 is being held back due to performance issues and an inability to hit the same speeds, or if they can overclock just as well and the lower clocks are because AMD wanted a particular result for the reference spec. With the data from where we expect defects, we could make better guesses at how many chips are being artificially binned due to inability to hit required clocks and estimate how many full dies would need to get binned for this reason.

I do want to note that there's one potential flaw with the link you posted. We have no idea if it's representative of other retailers in other countries. AMD doesn't just want to evenly allocate cards, but to adjust the mix based on what's going to sell best in each market. They have historical sales data so make these kind of adjustments. Subjective observation isn't normally the best and may just be confirmation bias as much as anything else. The 6800 seeming to stay in stock longer could also be a factor of less interest or buyers only grabbing them after they realize that a 6800 XT cannot be had.

That aside, any data is better than no data. We just want to be careful not to read too much into it (or on the flip side discard it because it doesn't fit our preconceived notions well enough) until additional data points can help confirm the initial point or give a better idea of how much of variability exists between the points and whether a reasonably estimate can be produced for the average value. Without having the other pieces filled in, I would say that it makes my original estimates seem too optimistic for the number of 6900XT dies. Unless those numbers don't represent the overall ratio or the analysis doesn't point to that kind of ratio being an expected outcome, it would point to more full dies being binned.