igor_kavinski

Lifer

- Jul 27, 2020

- 28,173

- 19,204

- 146

They might if they are bored and have nothing better to do. Not a bad strategy to want your game back in the news.also I don't except Remedy to update Control at this point for example

They might if they are bored and have nothing better to do. Not a bad strategy to want your game back in the news.also I don't except Remedy to update Control at this point for example

Only games that actually heavily use RT would benefit (also I don't except Remedy to update Control at this point for example). I'd assume that implementation would be rather straight forward - just replace the denoiser(s) with this one. It likely isn't just that simple but it necessarily isn't much more complicated.

Nvidia is probably 90% of the RT market and this works with all Nvidia RTX cards not just 4 series so everyone else isn't a lot. They can manage to support DLSS and FSR, this will be similar I suspect - all games supporting it other than AMD exclusives.But then you need two different denoise paths, one for RTX cards and one for everyone else, and that's a problem if as they indicate they apply in different places, as would be the case if they are replacing multiple denoisers, with one for RTX, but everyone else is still using the multiple steps. This is VERY messy.

I think this will be limited to partner games where they get heavy funding/help from NVidia.

Nvidia is probably 90% of the RT market and this works with all Nvidia RTX cards not just 4 series so everyone else isn't a lot. They can manage to support DLSS and FSR, this will be similar I suspect - all games supporting it other than AMD exclusives.

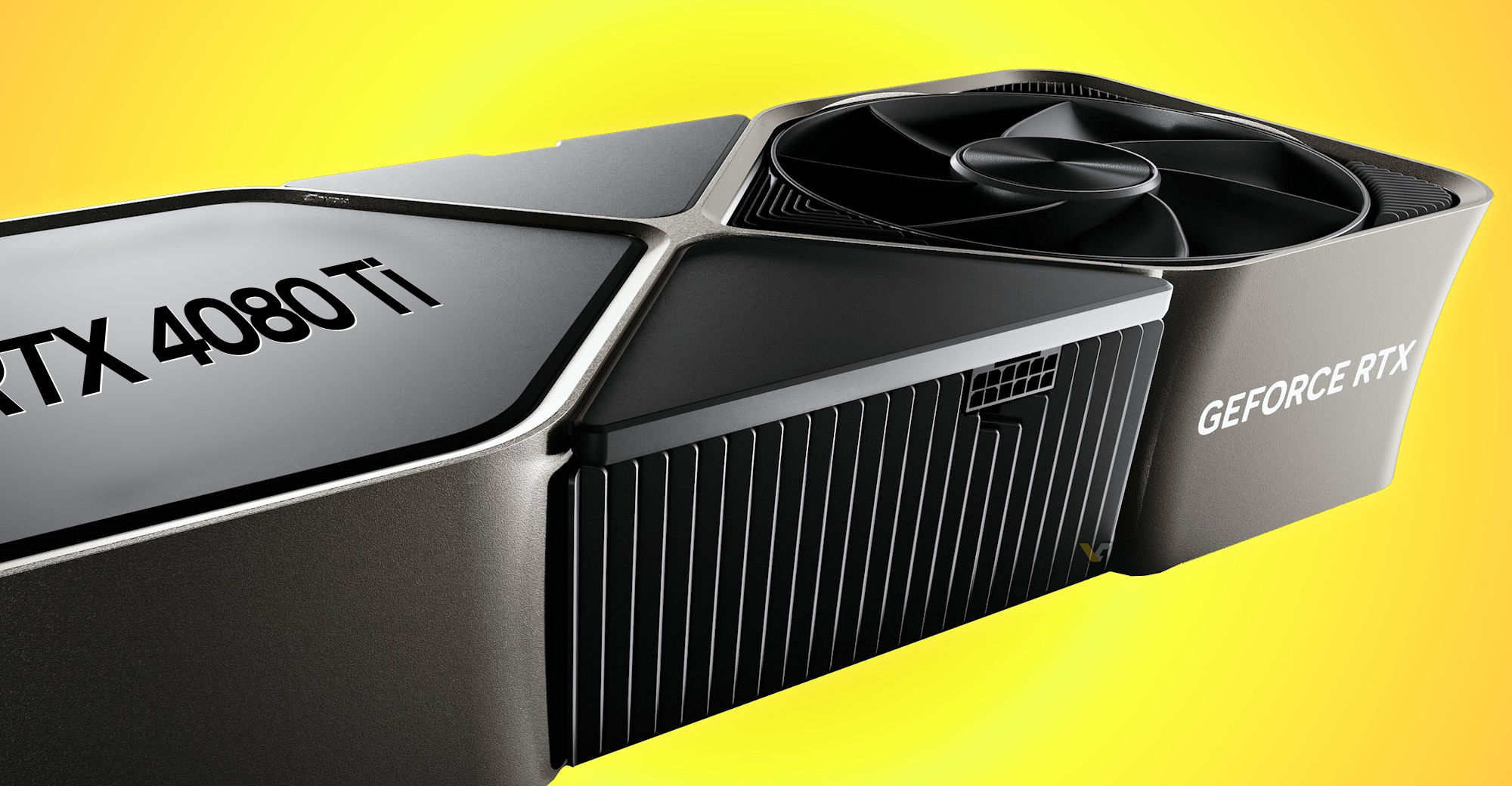

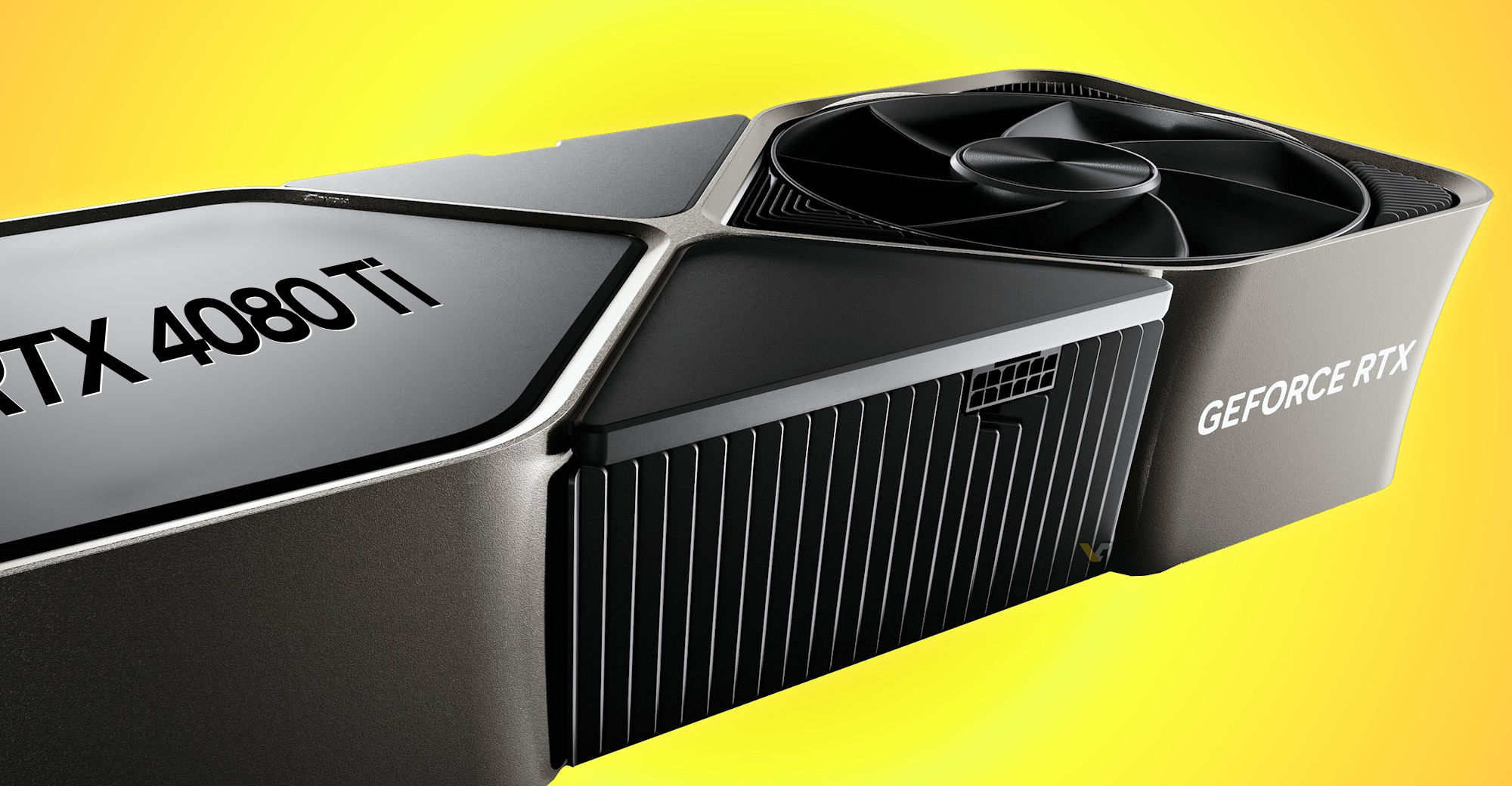

It is actually making sense based on future GPU lineups. I am maintaining table of Blackwell GPU series in the frontpage; it is nearly complete, have a look to see the reasons behind. Even with the releasing of 4080Ti with 20GB 320-bit support, there is still headroom for upcoming 5080Ti cause of 24GB 384-bit memory bus support.

NVIDIA reportedly working on GeForce RTX 4080 Ti with AD102 GPU for early 2024 release - VideoCardz.com

NVIDIA RTX 4080 Ti incoming Leaker claims NVIDIA is working on a faster RTX 4080. MEGAsizeGPU, a highly reliable hardware leaker, has hinted at the possibility of NVIDIA introducing a fresh addition to its graphics card lineup, named either the RTX 4080 Ti or RTX 4080 SUPER. According to the...videocardz.com

Sketch Rumor that nVidia is working on releasing a deeper cut AD102 part for release in Early 2024. Says price would be in the 4080's MSRP range. I dunno... the 4090 is deeply cut as it is so I'm not sure it's necessary. Presumably they would also cut the 4080's MSRP to $999 at the same time.

OTOH, there is no headroom for 4070 series even though it is being outperformed by RX7800XT. NV won't response cause if they come out 4070S with higher CUDA core counts, the performance would be too near to 4070Ti. They have to deal with it, they won't cut price officially cause NV wants to maintain the price line until 5070 comes out. AMD finally win over NV with N32 series...

When I said no headroom, I also meant headroom for future RTX5070. If my speculation is correct, RTX5070 will maintain same memory bus with 12GB GDDR6X support. Thus, there won't be much improvement in rasterization, RT & tensor performance are different story but that one have to wait...2060 Super nearly matched 2070 performance, so an update being "too near" another card is not something they are worried about.

That being said, the rumor is probably a fake. Wait for some leak from kopite7kimi.

When I said no headroom, I also meant headroom for future RTX5070. If my speculation is correct, RTX5070 will maintain same memory bus with 12GB GDDR6X support. Thus, there won't be much improvement in rasterization, RT & tensor performance are different story but that one have to wait...

Nah, I still think NV will be using GDDR6X as standard memory choice. That explains the large amount of L2 cache used to offset bandwidth deficiency against GDDR7. I know it sounds unreality especially RDNA5 will most likely be using GDDR7, but with the rumor of 512-bit memory bus and my table, that convince me that NV will milk GDDR6X for one more generation...Or GDDR7 which has 50%+ more bandwidth, which is plenty of headroom.

Nah, I still think NV will be using GDDR6X as standard memory choice. That explains the large amount of L2 cache used to offset bandwidth deficiency against GDDR7. I know it sounds unreality especially RDNA5 will most likely be using GDDR7, but with the rumor of 512-bit memory bus and my table, that convince me that NV will milk GDDR6X for one more generation...

Not exactly in the beginning of year 2025; NV will most likely launch flagship and mobile GPU at the early of 2025. And remember NV has control over 80% of GPU market share (over 95% in mobile GPU), that means NV needs millions of memory chips to support the launch. That's why the safe bet is actually support GDDR6 and GDDR6X. AMD OTOH....Rumors say 5000 series is only in 2025. By then GDDR7 will be ubiquitous.

Not exactly in the beginning of year 2025; NV will most likely launch flagship and mobile GPU at the early of 2025. And remember NV has control over 80% of GPU market share (over 95% in mobile GPU), that means NV needs millions of memory chips to support the launch. That's why the safe bet is actually support GDDR6 and GDDR6X. AMD OTOH....

Come to think of NV's current product lineup, I think NV is planning Blackwell series with GDDR6X at the beginning stage, that explain weird lineup in this generation. All RTX4000 models except 4090 are only showing little improvement over Ampere lineup. With Blackwell, NV could finally show the full potential of generation leaps...we shall see

Yeah, they also care about profit margin, with higher bus of GDDR6X & large amount of L2 cache, NV is well positioned against RDNA5 with chiplet solutions. Remember, NV has to pay more for monolithic design with N3E process, thus usage of GDDR6X somehow remedy the profit margin which NV cares the most....NVidia typically always pushes bleeding edge on available memory.

2060 Super nearly matched 2070 performance, so an update being "too near" another card is not something they are worried about.

That being said, the rumor is probably a fake. Wait for some leak from kopite7kimi.

True, but how much are the extra packaging costs for AMD - also, more complex packaging leads to a higher number of rejected GPUs (on top of defective dice). Not sure how that all squares up. That said chiplet/tiles are the only way to move forward once TSMC moves to High NA EUV. Maybe NV is waiting till they have no choice.Yeah, they also care about profit margin, with higher bus of GDDR6X & large amount of L2 cache, NV is well positioned against RDNA5 with chiplet solutions. Remember, NV has to pay more for monolithic design with N3E process, thus usage of GDDR6X somehow remedy the profit margin which NV cares the most....

Yeah, NV and AMD are both preparing new generation of GPU lineup with different solutions:-True, but how much are the extra packaging costs for AMD - also, more complex packaging leads to a higher number of rejected GPUs (on top of defective dice). Not sure how that all squares up. That said chiplet/tiles are the only way to move forward once TSMC moves to High NA EUV. Maybe NV is waiting till they have no choice.

Well, that's the plan. It has held up well with the simpler packaging being used now. Fast forward a bit into the future - and we will see. These sort of complexities are fine for something like the AMD MI300. The difficulty is getting it done on high volume GPUs. The big RDNA4 GPUs were to have more complex packaging. Now, the problem seems that it was just more time consuming to design to hit the required market window. But I am curious what the yield would be after packaging. Guess we will see when RDNA5 comes out (hopefully, as AMD knew they were running behind long before we did, RDNA5 was able to be pushed up a bit - we shall see).Chiplet-designs should produce fewer low quality GPUs as they can test and bin the chips before combining them. So they can they combine chiplets that are binned the same. And they can toss only the truly broken chiplets, instead of having to scrap an entire monolothic chip.

| AMD | Die | CU | Memory | TGP | nVidia | Die | SM | Memory | TGP | |

|---|---|---|---|---|---|---|---|---|---|---|

| ? | N43 | 36 | 10GB 160-bit GDDR6 | ? | RTX4070 | AD106 | 36 | 8GB 128-bit GDDR6 | 115W | |

| RTX4070Ti | AD104 | 46 | 12GB 192-bit GDDR6 | 150W | ||||||

| ? | N43 | 40 | 12GB 192-bit GDDR6 | ? | RTX4080 | AD104 | 58 | 12GB 192-bit GDDR6 | 150W | |

| 7900M | N31 | 72 | 16GB 256-bit GDDR6 | 180W | RTX4080Ti | AD103 | 68 | 12GB 192-bit GDDR6 | 150W | |

| RTX4090 | AD103 | 76 | 16GB 256-bit GDDR6 | 150W | ||||||

| RTX4090Ti | AD102 | 100 | 16GB 256-bit GDDR6 | 200W |

????? Navi4 is not 7000 series.I am trying to compile upcoming mobile GPU from both nVidia and AMD based on leaks, well it does make sense than desktop GPU. Basically, nVidia is going to release Ti models to counter AMD's offerings either through better specs or lower price tier. The specs are not final, so pending modifications:-

AMD Die CU Memory TGP nVidia Die SM Memory TGP 7800M N43 36 10GB 160-bit GDDR6 ? RTX4070 AD106 36 8GB 128-bit GDDR6 115W RTX4070Ti AD104 46 12GB 192-bit GDDR6 150W 7800M XT N43 40 12GB 192-bit GDDR6 ? RTX4080 AD104 58 12GB 192-bit GDDR6 150W 7900M ? N31 ? ? 175W RTX4080Ti AD103 68 12GB 192-bit GDDR6 150W 7900M XT N31 72 16GB 256-bit GDDR6 175W RTX4090 AD103 76 16GB 256-bit GDDR6 150W RTX4090Ti AD102 100 16GB 256-bit GDDR6 200W