- Mar 3, 2017

- 1,779

- 6,798

- 136

Some tidbits

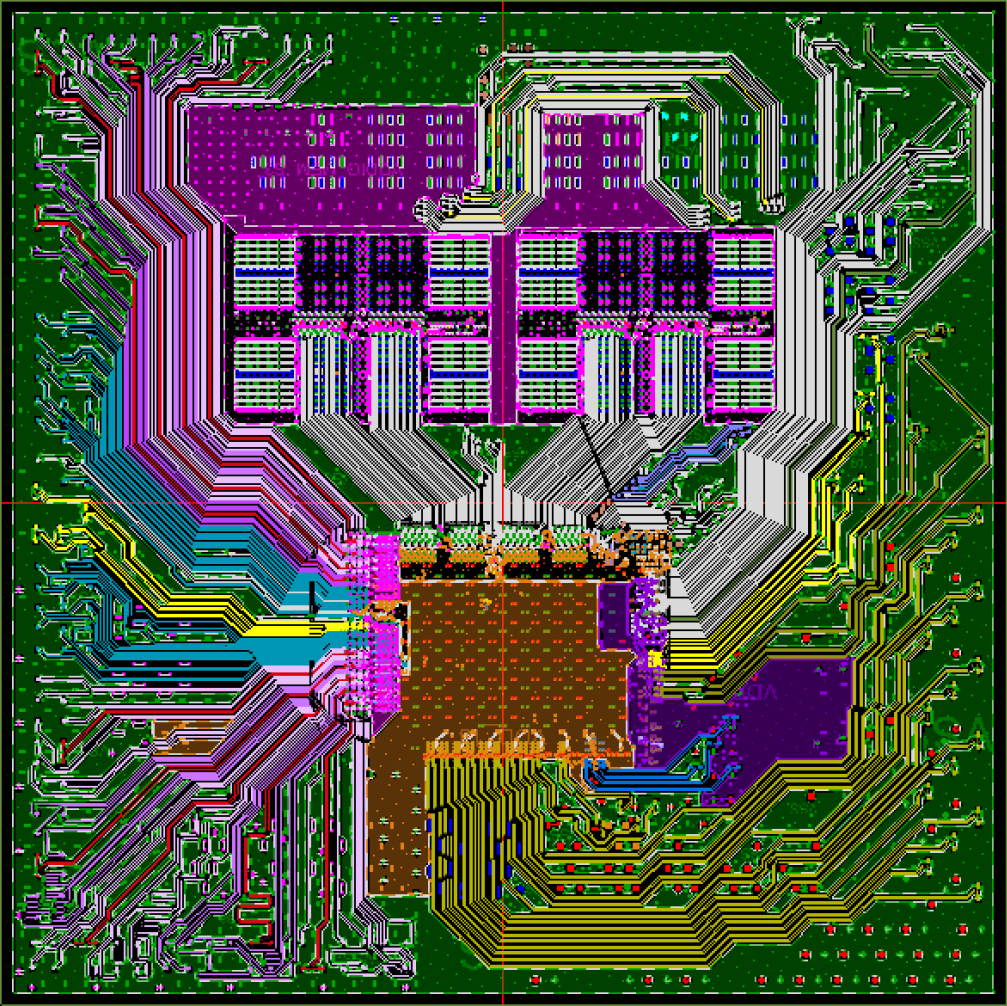

- A 15 layer Telescoping Metal stack has been co-optimized to deliver both high frequency and high density routing capability

They did add a lot more transistor to support AVX512/increasing ROB/L2/uop cache/BTB.

I bet the second GMI burnt a lot of space albeit probably a necessary forward looking block.

Zen5 will be a reset and optimize the core again a la Zen 3

Will be updated if more specs will show up. This time I doubt AMD will be more open

Last edited: