thilanliyan

Lifer

- Jun 21, 2005

- 12,065

- 2,278

- 126

Is that still ATi?

To me it is...but officially it's AMD Radeons now.

Is that still ATi?

Thanks for the information. Like everyone else, I'll be digesting this.

One question: when I got into the OC hobby two years ago, AMD users were commenting that their CPU's began making mistakes when their temps got up into the high 50's. From this I concluded that they and Intel were using fundamentally different technology.

Does anyone else remember AMD chips making errors when they hit 57-60c? Or was I somehow reading the posts incorrectly? I 'll admit that a lot more went over my head then than now.

It all comes down to (1) the physics that underlie reliability and product lifetime, and (2) the physics that underlie the power-consumption of the CPU.

1. Physics that underlie reliability and product lifetime:

Thermally activated processes (remember the Arrhenius equation from your p-chem classes) will approximately double in rate for every 10°C higher the operating temp.

A cpu at 70°C will have roughly twice the operating lifetime as a cpu operated at 80°C.

During the technology node development phase, getting the intrinsic reliability of operating lifetime of the process technology is a major challenge. Our here in the public domain you mostly hear about yields, but reliability is an equally challenging issue during those 4yrs in which the node is originally developed.

Well, what can you do if you are running out of R&D time, your node needs to be put into production asap, but the one thing holding it back is that in its current form (hypothetically speaking) your Lifetime Reliability department is telling you that the chips will die in about 12 months of they are operated at 100°C?

The easiest thing to do is to just limit the max upper-temp to a value that enables you to hit your reliability target. 12 months at 100°C is too little? No problem, at 90°C that 12 months becomes a 2 years, at 80°C it becomes 4 yrs, at 70°C it becomes 8 yrs.

Now if your customers can be convinced to restrict their operating environment such that the CPU doesn't exceed 70°C, and an 8yr expected lifetime conforms with your warranty model and internal targets (10yrs is actually the norm for the industry), then you could just go to production and not worry about the extra 6-8 months it would have taken you to get the intrinsic reliability of your process technology up to the point that you could expect an 8-10yr operating life at 100°C.

This is where/how Intel decides what TJMax is going to be. 98°C is based on how operating temps effect operating life, if 97°C was needed then that is what they would have spec'ed it at, or 99°C, etc.

By the way, it is this "reliability margin" that we OC'ers are using up and using to our advantage when overclocking.

A chip that is built with a process tech that can intrinsically support an estimated lifetime of 10yrs at 98°C means you get 20yrs at 88°C, or 40yrs at 78°C, or 80yrs at 68°C.

Well none of us OC'ers really care to have our 2600K's last 40yrs or 80yrs, so we in turn up our operating voltages (increasing voltage, any voltage, decrease lifespan of the CPU) to such an extent that we basically spend our reliability budget on increasing the Vcc.

"Hot Carrier Damage" kills the transistors by degrading them over time. This happens at any voltage, but more voltage (and more current) makes it happen even faster.

Checkout this short article for a very very cursory review of some of the issues at play in device reliability (TDDB, Hot Carrier Damage, Electromigration)

So that chip which could be expected to last 40yrs at 78°C with 1.3V might only last 10yrs at 78°C at 1.4V, and only 2.5yrs at 1.5V and 78°C.

Increase voltage AND keep your operating temps high(er) is just using up your reliability budget all the faster.

This is why OC'ers pursue lower operating temps (although they may not know why they are doing such)...its not just about the power-bill or the noise...and it is part of the reason why extreme OC'ers go to vapor-phase, LN2, and LHe temperatures before putting 1.8V and so on through their CPU's.

But at the end of the day the reason for the max temperature difference between AMD and Intel CPU's comes down to the intrinsic reliability of those CPU's with respect to thermally activated degredation mechanisms.

Intel spent more money/time/effort to improve the intrinsic reliability of their process integration at those nodes respectively.

2. Physics that underlie the power-consumption of the CPU:

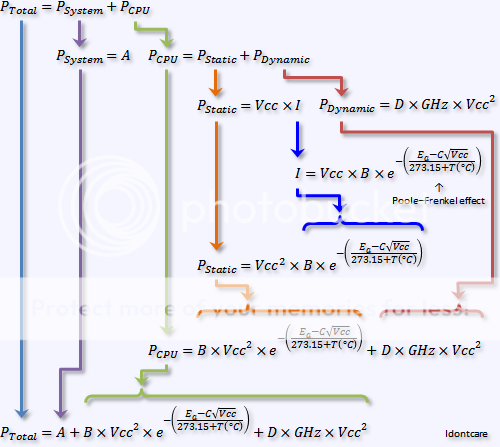

The second reason why the temperature maximum need be specified is adherence to the TDP spec. Power consumption from leakage in the silicon device is fundamentally dependent on the operating temperature of the CPU.

All else held constant (Vcc, clockspeed, etc), a hotter CPU will consume more power than a cooler one (see this thread).

So if AMD wants to spec their CPU's as being 125W TDP, for example, at a give clockspeed and operating voltage, if the max temp is 100°C then they have to dial down the clockspeed and/or Vcc such that when the CPU is at 100C it is not violating its own TDP spec because of the elevated leakage current.

My 2600K has a TDP of 95W, at 98°C the chip is burning through about 45W of just leakage (static power consumption) losses alone, but if I drop the operating temps to 68°C then the leakage power drops to 30W. That gives me a 15W TDP "surplus" to raise my clockspeeds while not exceeding the spec'ed TDP.

So AMD, by lowering their max allowed operating temperature spec, makes it easier on themselves to hit their reliability spec's as well as making it easier to bin their chips for higher clockspeeds and/or Vcc's while fitting them into the desired TDP bins.

The thing is, my Deneb (965 BE) wasn't working reliably when it was routinely running in the 60-70c range. And it was the temp, not anything else. Once I installed an aftermarket cooler, all problems were gone. Sure as hell, Tjmax with Thuban is higher. I'm running it passively in the range of 70-80c with 0 issues. Although it does consume quite a bit of more power. 100% match with IDC's findings.I routinely run my 1055t in the high 70's and crunch SETI 24/7 on that rig. I have had 0 error or invalid WU's since nov 25, and that one errored out on the GPU.

Intel is usually pretty tight-lipped about the true offset temp as well. I remember all of our speculation back in the Q6600 days about what the real tjmax was for conroe and kentsfield. And they waited a year or 18 months to even let us know what it was. Regardless, the best way to use temperature monitoring software is to have it display distance to tjmax instead of absolute temperature. That way, tjmax could be 100, 1000, or eleventy billion and it wouldn't matter at all.

Are you sure about that? My Phenom II reported per-core temperature in HWMonitor and other programs. Perhaps they use a single sensor and some sort of signal processing or other technique to estimate the temps in each core, though.

My Asus board has a reading called CPU temp, which is usually around 10 - 15C hotter than the per core readings, and is what the Q Fan controller is based on.

For example; running P95 with a ambient of around 22C, CPU temp shows 59C, while per core shows 48C or so.