Elfear

Diamond Member

- May 30, 2004

- 7,168

- 826

- 126

25x16 was always an extremely niche resolution and by no means representative of enthusiast setups. 19x12 was the enthusiast resolution before the move to 16:9. Not only was 25x16 extremely difficult to run but the screens were also incredibly expensive. Today 4K is very difficult to run but at least the screens justify their price so we are already seeing a bigger adoption of 4K than 25x16 ever had.

Like today 1440p is certainly the most logical resolution to compare high end cards not 4K. Once we are comparing SLI/CF setups then it makes sense to test such resolutions.

Fair enough. 1080p was probably more representative of even the higher-end gamers 6yrs ago much like 1440p is today. Results from TPU I posted shouldn't shift meaningfully at 1080p. My main point was using the "All Resolutions" graph didn't make sense because 1024x768 and 1280x1024 were mixed into those averages.

As far as dual GPU in a single card is concerned am I wrong in remembering that there were bigger compatibility hassles than actual SLI\CF because you couldn't merely turn off 1 GPU? Can you also point out which dual GPU had a price/performance advantage over buying 2 cards? Keep in mind the resale value of 2 separate GPUs will be MUCH higher than a dual GPU card.

For people who had the room, dual cards was almost always the better option. But that doesn't get around the fact that there was a lot of talk back in the day about the fastest card and the fact that I was replying to Happy Medium's post where he specifically said fastest "card" and not GPU.

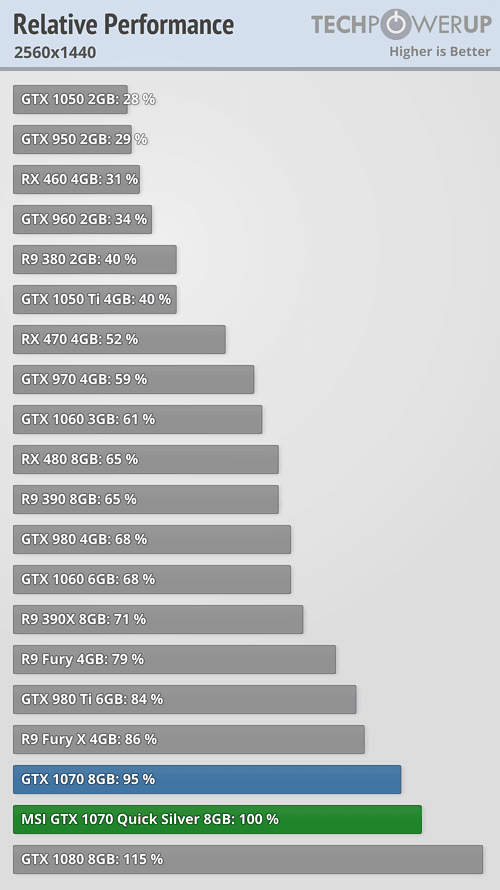

The Fury X is not in the same performance class as a 1070. AIB 1070s are about 20% faster than a Fury X with still more room to overclock and 100% more VRAM. The Fury X is basically it's own category.

Meh. The reference 1070 is only 10% faster and the average AIB is 15-16% faster. That's basically the same as the GTX 280 vs 4870, 5870 vs 480, 6970 vs 580, etc, etc. For high-end cards, 10-15% is within the same competitive tier. There may be some very highly overclocked AIB 1070s that are closer to 20% but those don't represent the average 1070.

Last edited: