Question What card to pair with a i7-4770S?

- Thread starter ibex333

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

VirtualLarry

No Lifer

- Aug 25, 2001

- 56,587

- 10,225

- 126

Suggest AMD, lower CPU overhead.

Nvidia's CPU overhead issue is going to hit you like a brick with that CPU:

You will notice on video 2 in the above testing an old rx580 outperformed a rtx3080 in fortnite at the 796 second mark with a i7-4790k, which is similar to the CPU you have.

Suggest something newer, like a rx6600. It has enough channels not to bottleneck on PCI-3.0.

Looks like you can get a brand new Sapphire branded rx6600 for around $280.

used rx6600 is around $240, which is not worth it. Buy it new with the warrantee and no time in the mines.

If you want to go cheap there are used rx570s on ebay, for less then a $100. With a fast CPU a gtx970 is roughly equivalent to a rx570. rx580s can be had for about $150. The rx590 might be a better choice though, slightly less time in the mines. I see one on ebay for $135.

Avoid Vega56, Vega64, and Radeon 7. The mines did not treat those cards well.

Problem with anything older then the rx6000 series or the rtx3000 series is it will have spent serious time in the mines. That is ok with the rx5000 and rx500 series cards, but expect a fan replacement in the future. The biggest problem is you might get one with a mining BIOS, so be sure your comfortable with flashing the BIOS if your buying used. If you buy used, check the BIOS right when you get the card to verify it is stock, and flash it to stock if it is not stock. If it breaks, file for a return.

Nvidia's CPU overhead issue is going to hit you like a brick with that CPU:

Suggest something newer, like a rx6600. It has enough channels not to bottleneck on PCI-3.0.

Looks like you can get a brand new Sapphire branded rx6600 for around $280.

used rx6600 is around $240, which is not worth it. Buy it new with the warrantee and no time in the mines.

If you want to go cheap there are used rx570s on ebay, for less then a $100. With a fast CPU a gtx970 is roughly equivalent to a rx570. rx580s can be had for about $150. The rx590 might be a better choice though, slightly less time in the mines. I see one on ebay for $135.

Avoid Vega56, Vega64, and Radeon 7. The mines did not treat those cards well.

Problem with anything older then the rx6000 series or the rtx3000 series is it will have spent serious time in the mines. That is ok with the rx5000 and rx500 series cards, but expect a fan replacement in the future. The biggest problem is you might get one with a mining BIOS, so be sure your comfortable with flashing the BIOS if your buying used. If you buy used, check the BIOS right when you get the card to verify it is stock, and flash it to stock if it is not stock. If it breaks, file for a return.

Last edited:

- Sep 28, 2005

- 21,108

- 3,631

- 126

it will be great for an emulation PC

we looking at new cards or used cards?

If its used, i would go with Leeea's advice.

If its a new card you want, then id probably get a 1660 Super or a lower AIB RX 6600 (non XT).

I forgot to mention, avoid the following cards:

rx6500 - bad

gtx1650 - worse

rx6400 - even worse

gtx1630 - nvidia needed to make AMD's rx6400 look good

The above cards are cheap, but they are also very poor value for the money right now.

Also avoid:

a380 - your CPU does not have rebar support

rtx3090 - design defect in the double sided memory

vega56, vega64, Radeon 7 - Eth miners prioritized these cards, overvolting and overclocking the HBM memory. The ones that survived have little life left.

rx6500 - bad

gtx1650 - worse

rx6400 - even worse

gtx1630 - nvidia needed to make AMD's rx6400 look good

The above cards are cheap, but they are also very poor value for the money right now.

Also avoid:

a380 - your CPU does not have rebar support

rtx3090 - design defect in the double sided memory

vega56, vega64, Radeon 7 - Eth miners prioritized these cards, overvolting and overclocking the HBM memory. The ones that survived have little life left.

Last edited:

DeathReborn

Platinum Member

- Oct 11, 2005

- 2,786

- 789

- 136

I would just like to quickly add that moving a R9 290 from a 4770K to a 3600X increased FPS by 10-20% so anything faster than a RX580/GTX 1060 will be leaving some performance on the table. I'd definitely look at 6/8GB VRAM though, 580, 1660, 3050, 6600 for example.

This is correct,I would just like to quickly add that moving a R9 290 from a 4770K to a 3600X increased FPS by 10-20% so anything faster than a RX580/GTX 1060 will be leaving some performance on the table. I'd definitely look at 6/8GB VRAM though, 580, 1660, 3050, 6600 for example.

but I feel he would still benefit from a newer card.

If we look at the 2nd video I linked, at the 13 min 10sec mark, we can see with the 4790k going from a rx580 to a rx6700 was a 20% performance game.

So while he will be leaving performance on the table, he would still benefit from the newer card. Warranty, no time in the mines, better driver support, 20% more performance, etc. If he can swing it, I would argue that is the right path for him.

If he turns up the graphics detail settings in game, the newer card will also handle that much better then the old cards.

The flip side is, he will be paying a 100% price premium for only 20% more performance, so their is certainly an argument for going used.

Last edited:

|JosephB17331|

Member

- Jul 30, 2015

- 67

- 49

- 91

Bottleneck calculator shows-

"While running graphic card intense tasks, processor Intel Core i7-4770S will be utilized 100.0% and graphic card AMD Radeon RX 6600 XT will be utilized 71.7% "

"While running graphic card intense tasks, processor Intel Core i7-4770S will be utilized 100.0% and graphic card AMD Radeon RX 580 will be utilized 93.2% "

RX 580 probably the more appropriate fit..

Regular RX 6600 (non-xt) would be a decent fit, and wouldn't be all that much overkill

"While running graphic card intense tasks, processor Intel Core i7-4770S will be utilized 100.0% and graphic card AMD Radeon RX 6600 XT will be utilized 71.7% "

"While running graphic card intense tasks, processor Intel Core i7-4770S will be utilized 100.0% and graphic card AMD Radeon RX 580 will be utilized 93.2% "

RX 580 probably the more appropriate fit..

Regular RX 6600 (non-xt) would be a decent fit, and wouldn't be all that much overkill

Stuka87

Diamond Member

- Dec 10, 2010

- 6,240

- 2,559

- 136

RX 580 is probably the best suited card.

But, it would be good to know what power supply you have.

But, it would be good to know what power supply you have.

SteveGrabowski

Diamond Member

- Oct 20, 2014

- 9,154

- 7,834

- 136

I ran virtually the same cpu, Xeon E3-1231v3, with a GTX 970 and a GTX 1660 Super and the 970 was a perfect match at 1080p. Had a bit of trouble keeping the 1660S at full usage though and lots of terrible frame drops in Elden Ring and Control for example.

For AAA gaming I'd probably go no higher than used RX 570 or maybe stretch to RX 580, though many last gen games like RDR2 I'd have full gpu usage even on the 1660 Super.

For emulation I'd do a lot of research. I seem to remember Cemu not liking AMD in the past and Cemu is probably the most intensive emulator you'll get great results with on a 4770S. Though I could play 2D games like Odin Sphere and Dragons Crown well on RPCS3 using my E3-1231v3.

But that was like 2017 or 2018 when I played Cemu a lot and things may be entirely different with AMD gpus now.

If you go Nvidia I'd be thinking 970, 980, 1060 3GB, or 1060 6GB.

For AAA gaming I'd probably go no higher than used RX 570 or maybe stretch to RX 580, though many last gen games like RDR2 I'd have full gpu usage even on the 1660 Super.

For emulation I'd do a lot of research. I seem to remember Cemu not liking AMD in the past and Cemu is probably the most intensive emulator you'll get great results with on a 4770S. Though I could play 2D games like Odin Sphere and Dragons Crown well on RPCS3 using my E3-1231v3.

But that was like 2017 or 2018 when I played Cemu a lot and things may be entirely different with AMD gpus now.

If you go Nvidia I'd be thinking 970, 980, 1060 3GB, or 1060 6GB.

He's going to be devastated when he finds out the 3090 is off the table.rtx3090 - design defect in the double sided memory

You laugh, but the Asian mining surplus cards have yet to hit the market.He's going to be devastated when he finds out the 3090 is off the table.

The rtx3090 ironically did not get LHR, so mining companies did buy them in quantity. They also hit those defective memory systems hard.

The rtx3090's have a high failure rate just from gamers. The miners likely pushed them right to the edge, and no further. The next user is going to be in for an unpleasant surprise when their card dies within a month or two if they are not very careful with it. Even if the user is careful, they are going to have to install a custom heatsink on the back side and roll the dice on how much life that memory has left.

I have a theory that the bad reputation of the rtx3090 non-Ti, the glut of Asian mining cards hitting the market about 1 or 2 months from now, will result in rtx3090s being sold <$300. Keep in mind no warranty on used mining cards.

Would you buy a used rtx3090 for $300 knowing many cards last less then a month or two in normal use?

- Sep 13, 2008

- 8,313

- 3,176

- 146

Yeah, I would probably go with an RX 480/580 8GB. A 1060 6 GB or 1070 might also be a decent idea. I might be wary of 6600 cards, due to being more prone to bottleneck of the 4770S in general, but also because they will be limited to PCIe gen 3 x8 on your system, which may also contribute to the bottleneck.

Finally, I would like to add that the 3090 does not necessarily have a design defect. It is not optimal, as some chips will get hotter than others, but I wouldn't call it defective. Anyway, I wouldn't pair anything of that high end nature with a 4770S for gaming.

Finally, I would like to add that the 3090 does not necessarily have a design defect. It is not optimal, as some chips will get hotter than others, but I wouldn't call it defective. Anyway, I wouldn't pair anything of that high end nature with a 4770S for gaming.

I would probably go with an RX 480/580 8GB.

Don't forget about the rx590! A update on its better known cousins the rx480 and 580, and far less well known. More likely to go cheap on ebay as result.

rx590 released in December 2018

rx580 released in April 2017

rx480 released in June 2016

An rx590 will have about 2.5 less years in the mines then a rx480. Also has about 10% more performance then a rx580, and 20% more performance then the rx480.

AMD has already stop issuing new game optimizations on the rx300 series, and the rx400 series will be next. But the rx500 series sold very well, and I feel it will be a long time before those get the axe.

Last edited:

I would disagree. People with cards a few days old were getting fried left and right. It was not just EVGA, and it was not just the inadequate backside cooling ( or complete lack thereof ). Something in the power supply for the memory was not right.Finally, I would like to add that the 3090 does not necessarily have a design defect. It is not optimal, as some chips will get hotter than others, but I wouldn't call it defective. Anyway, I wouldn't pair anything of that high end nature with a 4770S for gaming.

- Sep 13, 2008

- 8,313

- 3,176

- 146

I don't think there is that much difference between a 480/580/590, probably a lot less, of course it will vary by clocks and PCB/cooler design a bit. Also, RX 400 and 500 series are pretty much the same thing hardware wise, so they will likely go DOA at the same point IMO. Now AMD could do differently, but it wouldn't make sense, they are all Polaris.Don't forget about the rx590! A update on its better known cousins the rx480 and 580, and far less well known. More likely to go cheap on ebay as result.

rx590 released in December 2018

rx580 released in April 2017

rx480 released in June 2016

An rx590 will have about 2.5 less years in the mines then a rx480. Also has about 10% more performance then a rx580, and 20% more performance then the rx480.

AMD has already stop issuing new game optimizations on the rx300 series, and the rx400 series will be next. But the rx500 series sold very well, and I feel it will be a long time before those get the axe.

As for the 3090 frying issue, this was not a double sided memory issue AFAIK. I thought it was more of a QA issue, but I suppose it could be argued it was a design flaw, with using the POScaps and drivers allowing boosting too high. Still, it wasn't design defect due to double sided memory that caused this.I would disagree. People with cards a few days old were getting fried left and right. It was not just EVGA, and it was not just the inadequate backside cooling ( or complete lack thereof ). Something in the power supply for the memory was not right.

I will say the 590 could also be a decent option, the only caution here is I feel those might not actually last as long as the 580 cards, due to being the same hardware but pushed further. Or was there an actual hardware resfresh/die shrink with these, or did they just have higher clocks and beefier coolers? I cannot remember exactly. Anyway, use good judgement based on what you find available and what the price is.

Lastly, if you want to go a bit higher, the Vega 56/64 could do well (instead of a 1070 or 1080), as long as you are getting one with a good cooler. These cards of some brands had really bad cooling designs, mostly for reference and some coolers such as with Gigabtyte cards, but the Sapphire Nitro and Powercolor Red Dragon designs were excellent.

I suppose mining with the HBM2 could be an issue with some, but I seem to recall the HBM could not actually be overvolted, though the core was usually undervolted. I would focus on checking the fans of used cards instead, and of course check for general stability, and that there isn't a mining BIOS flashed. Of course, do the necessary research first.

Last edited:

Thunder 57

Diamond Member

- Aug 19, 2007

- 4,198

- 6,987

- 136

Don't forget about the rx590! A update on its better known cousins the rx480 and 580, and far less well known. More likely to go cheap on ebay as result.

rx590 released in December 2018

rx580 released in April 2017

rx480 released in June 2016

An rx590 will have about 2.5 less years in the mines then a rx480. Also has about 10% more performance then a rx580, and 20% more performance then the rx480.

AMD has already stop issuing new game optimizations on the rx300 series, and the rx400 series will be next. But the rx500 series sold very well, and I feel it will be a long time before those get the axe.

Very unlikely, as Shmee said they are both Polaris. Both are GCN4. Considering that previous GPU's were 28nm vs 14nm, Polaris could perform similarly to the 390(X) while using 100+ less watts. There is no need to cut them off just yet. They will almost certainly be removed at the same time. I wouldn't recommend a 590X as it really pushed power to get relatively little gains.

rx590 vs rx580 - 10%:I don't think there is that much difference between a 480/580/590, probably a lot less, of course it will vary by clocks and PCB/cooler design a bit.

Radeon RX 590 vs Radeon RX 580

We compare the Radeon RX 590 against the Radeon RX 580 across a wide set of games and benchmarks to help you choose which you should get.

rx590 vs rx480 - 20%:

Radeon RX 590 vs Radeon RX 480

We compare the Radeon RX 590 against the Radeon RX 480 across a wide set of games and benchmarks to help you choose which you should get.

That is pretty significant to me.

I will say the 590 could also be a decent option, the only caution here is I feel those might not actually last as long as the 580 cards, due to being the same hardware but pushed further. Or was there an actual hardware resfresh/die shrink with these, or did they just have higher clocks and beefier coolers? I cannot remember exactly. Anyway, use good judgement based on what you find available and what the price is.

No! That is wrong!I wouldn't recommend a 590X as it really pushed power to get relatively little gains.

rx590 uses 10 less watts then the rx580:

What you both missed is the rx590 is a redesign of the rx580, moving it from 14nm GF to 12nm TSMC. The 590 is just a better chip, uses less power, runs cooler, and clocks higher. The rx590 was AMDs first TSMC GPU! AMDs first high end TSMC product!

This was the tipping point, after the rx590 AMD would slowly move everything to TSMC. The rx590 is not only important historically, it is also the best rx500 series product!

Last edited:

Lastly, if you want to go a bit higher, the Vega 56/64 could do well (instead of a 1070 or 1080), as long as you are getting one with a good cooler. These cards of some brands had really bad cooling designs, mostly for reference and some coolers such as with Gigabtyte cards, but the Sapphire Nitro and Powercolor Red Dragon designs were excellent.

I suppose mining with the HBM2 could be an issue with some, but I seem to recall the HBM could not actually be overvolted, though the core was usually undervolted. I would focus on checking the fans of used cards instead, and of course check for general stability, and that there isn't a mining BIOS flashed. Of course, do the necessary research first.

The HBM series, Vega 56, 64, and Radeon 7 were beloved by miners for their fast memory. Sadly:

ps: I own a Vega56. Great GPU, still have it today. Runs great*. I never BIOS flashed it though. A good Vega56 or 64 is a great experience.

*after I finished with using it as a primary GPU I put it in a secondary computer where it spent about 1.5 years in the mines. Now that Eth mining is dead, going to gift it to a friend of mine. Thing is, before I mined on it the memory would overclock from 800 to about 910. After mining on it, it only OCs to about 850 or so. Stock bios and voltage also.

Last edited:

Thunder 57

Diamond Member

- Aug 19, 2007

- 4,198

- 6,987

- 136

rx590 vs rx580 - 10%:

Radeon RX 590 vs Radeon RX 580

We compare the Radeon RX 590 against the Radeon RX 580 across a wide set of games and benchmarks to help you choose which you should get.hwbench.com

rx590 vs rx480 - 20%:

Radeon RX 590 vs Radeon RX 480

We compare the Radeon RX 590 against the Radeon RX 480 across a wide set of games and benchmarks to help you choose which you should get.hwbench.com

That is pretty significant to me.

No! That is wrong!

rx590 uses 10 less watts then the rx580:

What you both missed is the rx590 is a redesign of the rx580, moving it from 14nm GF to 12nm TSMC. The 590 is just a better chip, uses less power, runs cooler, and clocks higher. The rx590 was AMDs first TSMC GPU! AMDs first high end TSMC product!

This was the tipping point, after the rx590 AMD would slowly move everything to TSMC. The rx590 is not only important historically, it is also the best rx500 series product!

I didn't miss a thing. The Rx 590 has a 40W higher TDp at 225W vs 185W of the 580. it was also 12nm GloFo, not TSMC. Remember when Anandtech used to review GPU's?

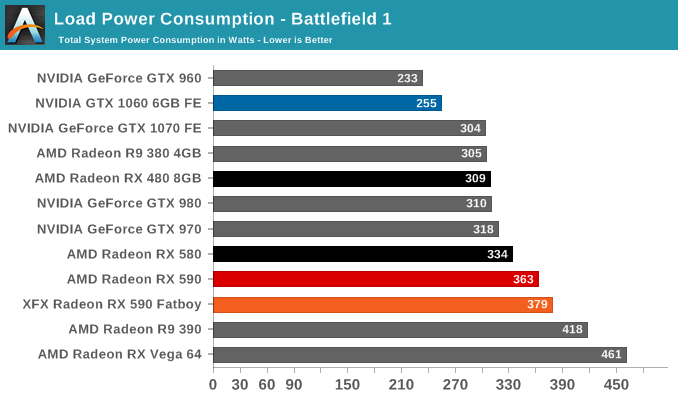

"From the wall, the RX 590 now pulls 30 to 45W more than the RX 580 in Battlefield 1. The difference in FurMark is even starker, with the RX 590 now drawing 45 to 80W more ". I'm not saying it's a bad card but Notebookcheck got it wrong.

I didn't miss a thing. The Rx 590 has a 40W higher TDp at 225W vs 185W of the 580. it was also 12nm GloFo, not TSMC. Remember when Anandtech used to review GPU's?

Lithography: TSMC 12nm FinFET

Did some digging around. Also seeing claims of 12nm GF and 11nm Samsung for the rx590. Looking for something official now.

Dug around and found a picture of the chip, sadly unlabeled.

Last edited:

DeathReborn

Platinum Member

- Oct 11, 2005

- 2,786

- 789

- 136

With current pricing I'd suggest a RX 6600 8GB & undervolt it.

www.techpowerup.com

Global Foundries 12nm

www.techpowerup.com

Global Foundries 12nm

Did some digging around. Also seeing claims of 12nm GF and 11nm Samsung for the rx590. Looking for something official now.

AMD Radeon RX 590 Specs

AMD Polaris 30, 1545 MHz, 2304 Cores, 144 TMUs, 32 ROPs, 8192 MB GDDR5, 2000 MHz, 256 bit

With current pricing I'd suggest a RX 6600 8GB & undervolt it.

https://www.tomshardware.com/reviews/amd-radeon-rx-590,5907.html

https://www.techpowerup.com/gpu-specs/radeon-rx-590.c3322

Global Foundries 12nm

Your toms hardware link discusses it a bit, it finally concludes:

They made a compelling argument off the chip size, but do not claim to actually know.As such, we're probably seeing GlobalFoundries' 12nm LP process

Your tech power up link is interesting, as it claims:

in the notes (scroll down a bit). Which I found fascinating.This chip comes in both Samsung 11nm and GlobalFoundries 12nm.

Last edited:

Thunder 57

Diamond Member

- Aug 19, 2007

- 4,198

- 6,987

- 136

Your toms hardware link discusses it a bit, it finally concludes:

They made a compelling argument off the chip size, but do not claim to actually know.

Your tech power up link is interesting, as it claims:

in the notes (scroll down a bit). Which I found fascinating.

I should clarify I wasn't 100% sure it was GloFo 12nm, just pretty confident. IIRC 12nm TSMC is more like 16nm+ and I wasn't sure if it was even available when the RX 590 came out. I searched and saw the same Tomshardware review so I felt pretty safe in saying is was 12nm Glofo. The Samsung bit of info is interesting though.

The 590 was in a tough spot though. Vega 56 was considerably faster and used the same power for the most part. The problem was getting your hands on Vega was usually a tough task at any reasonable price. If Vega had been more plentiful we may have never seen the RX 590. On a somewhat related note, it's amazing how much more power the Vega 64 used. In bench it uses 80 or so watts more than the 56! Talk about being pushed to the limit.

Last edited:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.