You are leaving off the biggest one... which was 3.1 or 6% by itself. Kinda goes back to my first sentence...

I already acknowledged that one as being legitimate. The others however, are not. So this throws a big spike into your numbers, because for your correlation with Deus Ex MD to be accurate, you'd have to show that ultra texture quality has other enhancements that affect performance in a negative manner to a level that would reach double digits.

So far you have not done this, and instead have relied on assumptions to make sense of the fact that the Fury falls flat on its ass with ultra quality textures. . I've read and seen multiple sources which suggest that ultra quality textures and 4GB GPUs don't mix well in Deus Ex MD. You're the only one saying otherwise, and given your history of hyping HBM as being able to defy the normal limitations of VRAM, then you can surely understand why I'm suspicious. So the only thing that would satisfy me at this stage would be for you to do a video upload so I can see for myself the stutters and hitching, similar to what that other guy on YouTube did.

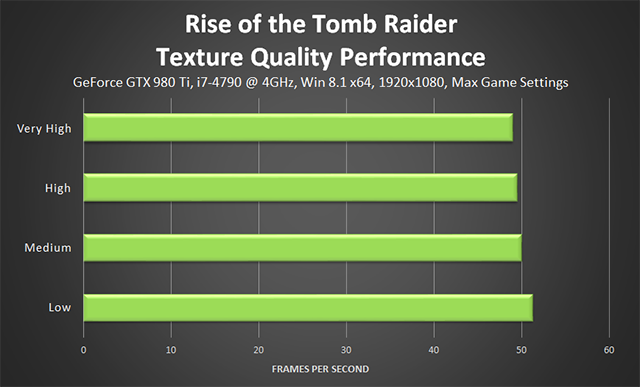

TIL guides by Nvidia aren't proper benchmarks, and that it didn't clearly show each option slightly worse than the previous...

It's not a question of whether they are proper benchmarks. Benchmarks by their nature always have some slight deviation. You run any benchmark program that tests performance, whether on the CPU, RAM or GPU, and the scores will be different each time most likely.. That's just the nature of benchmarking. The fact that you don't know this, tells me you must be new to benchmarking or something..

Now you doubt both Nvidia and my benchmarks, so sad you can't perform this test yourself. Do you even own the game and hardware? I'm starting to doubt you do since you won't just test it and instead have now called myself and Nvidia's benchmark guide liers.

I don't doubt NVidia's benches, but I doubt your interpretation of their data, and your own. Your 99th percentile for ultra quality was around 35ms, which is pretty damn high.

There is no way in hell there wasn't any stuttering or frame lag..

As for whether I own the game and hardware, yes I do. If you want proof, I can furnish it easily by showing you a pic of my rig (you will know it's mine because my specs are listed in my sig), or a screenshot of a specific location in the game of your choice to prove that I actually own the game..

The reason why I don't want to conduct any tests on my end, is because:

1) There's no doubt that a GTX 1080 can easily handle ultra quality textures. I've been using ultra quality textures since the game launched without any problems and no impact on performance that I've noticed...

2) I'm too lazy to conduct any in depth benchmarks, especially if the outcome is already known.

3) A comparison with an NVidia GPU isn't the best option, because NVidia's drivers have a different VRAM management algorithm. An AMD GPU with 8GB would be the best comparison, or perhaps someone else with a Fury.

In fact, I asked a guy on another forum with a Fury whether he could use ultra quality textures in Deus Ex Mankind Divided. He has yet to reply to me

But when he does, I'll let you know..