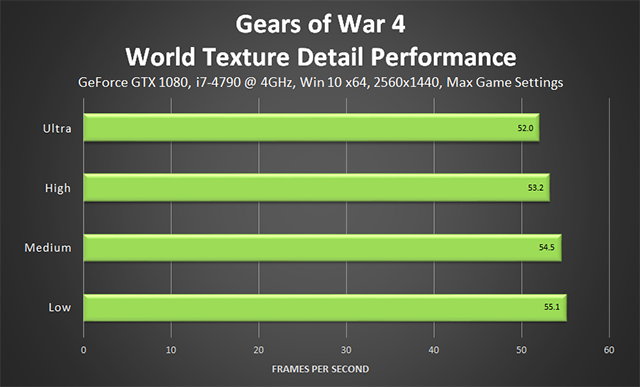

There's still one more comparison that needs to happen - it's clear that moving to Ultra textures on the Fury gets you a net loss of frametimes. What we need to see though is if that same behavior happens on a card with a bigger VRAM buffer (e.g. an 8gb card such as 390x since it is closest in speed and same architecture). If both an 8gb card and the 4gb Fury both show the same drop in frametimes from going to Ultra, then we would know that Ultra is just a taxing setting altogether. If it only drops on Fury, then it is likely because of VRAM limitations.

We need the control data - does anyone with a 390 or 390x want to contribute? I only have a 4gb 290 myself...

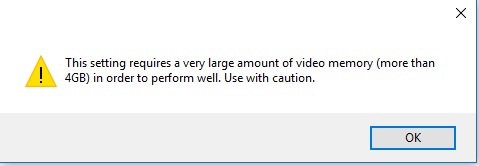

You might need that comparison, but I don't. He used the same settings with the only difference being one with ultra quality textures, and the other with low quality textures which is adequate enough.. There is nothing at all unusual about these benchmarks. Deus Ex MD WARNS you that ultra textures are for GPUs with MORE than 4GB of VRAM, so I don't know why Bacon1 is still so obstinate about this..

Last edited: