- Mar 6, 2006

- 6,490

- 1,022

- 136

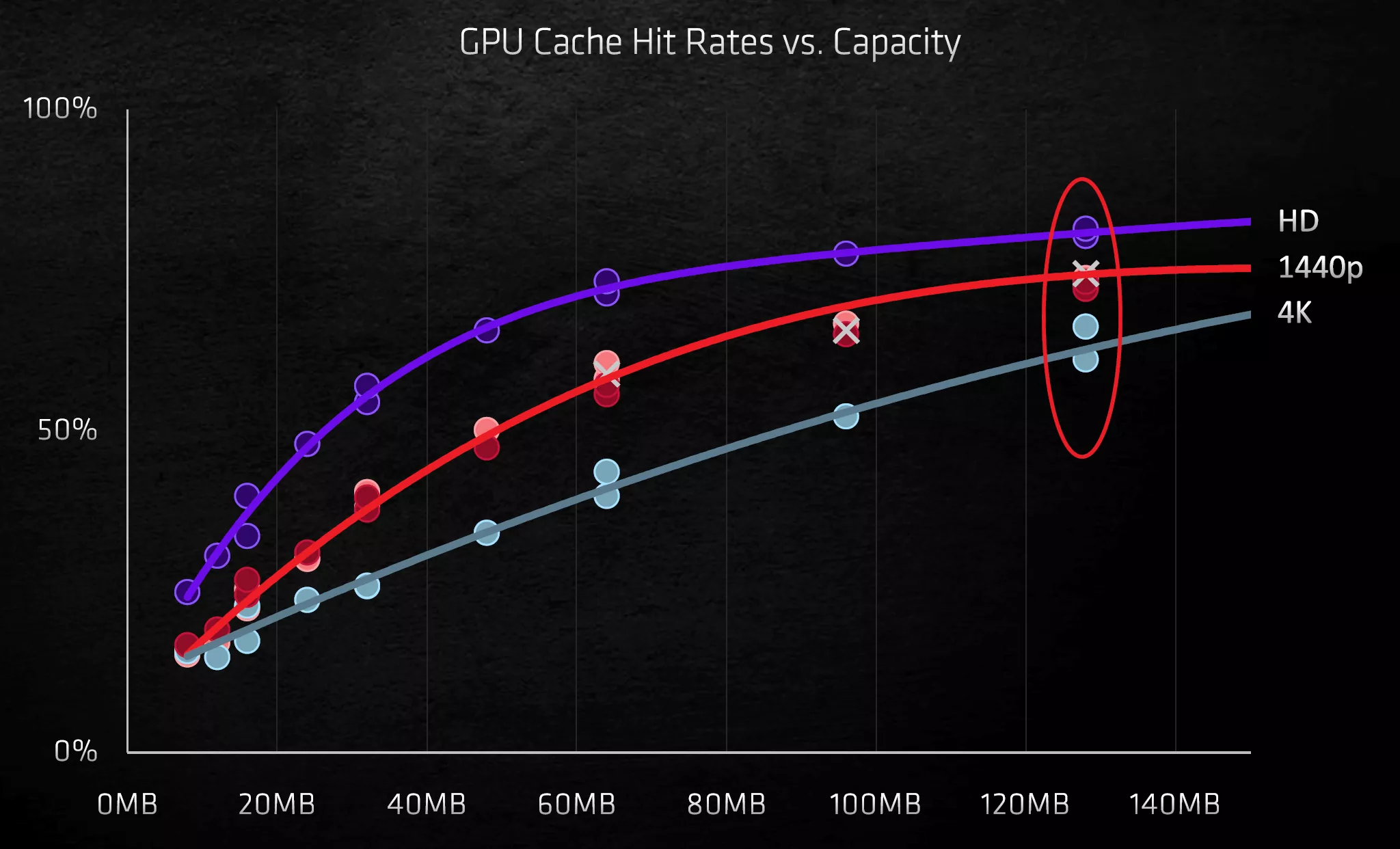

After lots of reviews from multiple sources, I get the impression that these RX 6000 cards drop off significantly at 4k vs the Nvidia RTX 3000...despite the large difference in VRAM. Performance between the two is much different at lower resolutions like 2560x1440. My thinking is that it's a limit of the 128MB cache, and the slower memory speed when it runs out.

I'm curious what other people think. There doesn't seem to be much discussion about this interesting portion of the new AMD GPUs.

The question for 4k gaming so far has been "is 10GB VRAM enough?" but I'm curious "is 128MB cache enough?"

I'm curious what other people think. There doesn't seem to be much discussion about this interesting portion of the new AMD GPUs.

The question for 4k gaming so far has been "is 10GB VRAM enough?" but I'm curious "is 128MB cache enough?"