If a CPU

sucks +100w more power again and

again and

again and the effect of that includes mild additional whole system consumption via PSU AC/DC power conversion losses / VRM & motherboard loads, etc, on top, well that's just tough. That's simply the price you pay for running a thirstier 32nm chip & platform, and then overclock it even more. Your electricity company bills you for what you draw at the wall. They / Asus / MSI, etc, don't give you an energy rebate for "pretending" your PSU or VRM's don't exist. Nor do people calculate non-PC appliance running costs by excluding the power supply out of sub-component brand fanboyism. And for corporations, the "T" in "TCO" means "Total".

Let's cut out the fake "objectivity" pretense. The people pushing these lame "PCO" (Partial Cost of Ownership) "measurements" (but only for AMD CPU's) are mainly those who try and artificially skew AMD's high figures downwards whilst still quoting Intel's "84w TDP" ratings, even though the same hardware.fr "calculations"

result in "46w" i5-3570's or "54w" 2500K's, etc, whose figures are conveniently never similarly used in same context. Usually the same people who compare Intel "240v at the wall Intel loads" vs "12v Fritz Chess AMD loads", who talk about undervolting AMD's alongside "

you can knock 23% off with regular non-Prime loads" one minute then promote "105w" LinX over-volted AVX "power virus" Intel loads the next, etc. The same people who quote hardware.fr charts

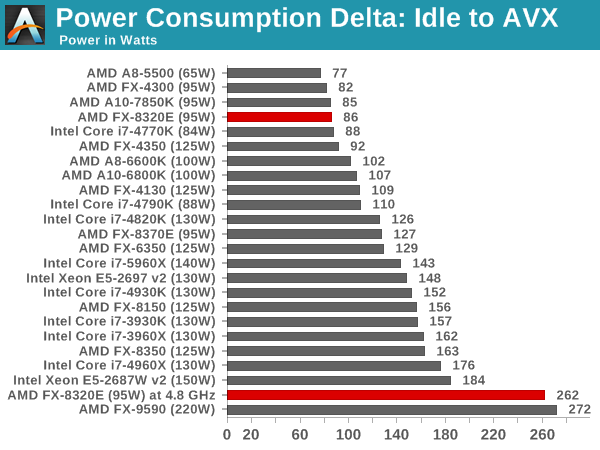

like this one to death, page after page, day after day, yet "accidentally forget" to quote other charts like

this or

this from same page even once. Nor does the "12v" reading show the FX's

consistently using more on a platform level (even in idle state), another 20-30w that 'needs' to be "shaped" out of the equation (even though motherboard platform is a direct result of what CPU you choose, hardly "unrelated")...

This so called "proper" way of testing power measurement is so inconsistently applied, and the 'correct presentation' bias so obvious it's laughable, especially given "at the wall" figures were deemed accurate back when the "space heater" roles were reversed and

AMD's 100w lower power + better single-thread difference was declared "a very potent combo" along with happy discussion of AMD's $531 launch prices... Obviously no one cared about perf/watt and AMD never priced anything above $200-$340 i5-i7's when their market share was larger... :sneaky:

This is my hobby, and $300 is a pittance, as far as I'm concerned. Spread that out over the 4 or 5 years I'll likely have this system, and it's the equivalent of a night out to dinner, once per year.

Yea, except for *very* rare circumstances, I just dont get this quibbling over 50 or even a hundred or two dollars extra for a cpu for a system that will be used for several years, especially when a portion of the extra cost will be recouped in power savings.

^ These +1000.

ANY other component - BenQ vs LG monitor, Asus vs MSI motherboard, Kingston vs GSkill memory, Seasonic vs Corsair PSU, a $100 vs a $30 case, Epson vs Canon vs HP printer, Coolermaster vs Thermaltake cooler, Sony vs Panasonic TV, LG vs Toshiba Blu-Ray player, etc = zero emotional hysteria, even with $150 "same component" price differences (like a 1TB Samsung Pro 850 vs a 1TB Crucial BX100). But AMD vs Intel CPU's - and it's suddenly a shaking with fervor religious cult down to the last $10 as if your food money and imminent starvation of your children depends on it...