You confused me. You said the exact same thing as me, but in different words. I just said that the complexity of the memory controller determines how well it can hit higher clocks because you can easily source 5-8Gbps GDDR5 chips. The more complex the memory controller, the harder it is to achieve higher clocks speeds. That's been the general rule for AMD/NV for several generations, but it's not always 100% true as I've shown with 384-bit 7970 hitting 365GB/sec!

You forgot that having a 512bit bus doesnt necessarily mean a more complex memory controller. You've shown an example that

some cards are able to hit high clocks. But just how many can run stable at those clocks? That is the impressive part because all nVIDIA parts run at ~7GHz reference speeds another ~1GHz headroom.

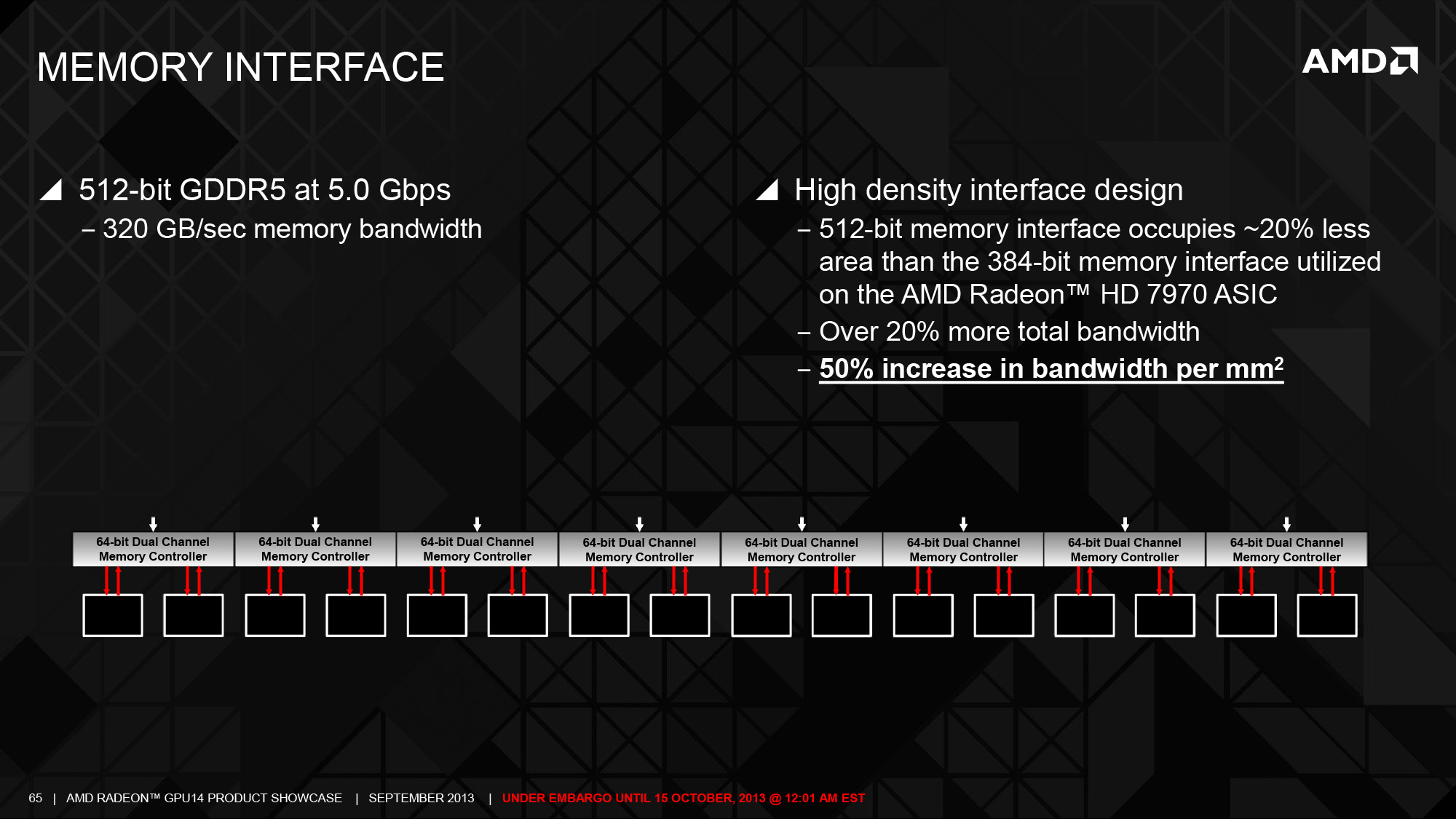

No, they would not. That's the #1 misconception for Hawaii. AMD reduced the memory controller's die size area by 20% from Tahiti's 384-bit bus and because the controller is 512-bit, they used less power hungry / slower GDDR5 chips. The end result is 50% increase in memory bandwidth/mm2. That's engineering winning 101. Your suggestion that AMD would have been better off with a 256-bit or a 384-bit memory controller on 290X doesn't fly. 290X keeps up with 780Ti despite VASTLY superior DP performance, similar perf/watt, and a 438mm2 die size vs. 561mm2 die size for Kepler GK210!

A 256bit memory bus will always mean less memory controllers (smaller die size and complexity), less PCB complexity and i.e. potentially less layers, less power required for the memory bus/ic because you only need 8 of them compared to 10, 12 and so forth.

Why doesnt it fly? As long as it has enough bandwidth, the GPU would definitely be smaller, less transistors and cheaper. Every cent counts. The PCB itself too would be cheaper. If theres no performance benefit in going with a 512bit bus over a 256bit where bandwidth is assumed same why would anyone go with the former? because it sounds nice on paper?

And by power hungry, just how much is that difference?

So it's clear that when comparing efficiency per mm2 of 2 head-to-head competing architectures (290X vs. 780Ti), Hawaii completely smashed its direct competition!! AMD engineers designed a crazy efficient 512-bit memory controller which allowed for 290X to be just 438mm2, or just 24% larger in die size than a 7970, but pack 50% more memory bandwidth and 37.5% more functional units (SPs and TMUs), with 100% the ROPs! That's incredible in hindsight.

This sounds like some sort of AMD PR. Comparing die sizes is also futile mainly because AMD may have gone with a more dense/compact layout plus the architecture is completely different. The more meaningful comparison should be, "..they've added 50% more memory with 37.5% more functional units by adding approx. 44% additional transistors..". ~44% increase in transistors for ~50% across the board which sounds about right. Die size won't be increased by 50% either because the amount of space taken up by each block is different.

It is impressive in terms of how well its faring still to this day (games that favor this arch also helps) but it is not impressive when you start playing with its headroom and the kind of power consumption figures it has. Why do you think it has a bad rep even to this day?

From an

engineering point of view (SP/DP/compute/& perf/mm2), 290X is by far superior to a 780Ti. Just think about it, a 550mm2 is what 390X is rumoured to be which is another way of saying if you scale Hawaii 290X to 550mm2, how would the 561mm2 780Ti compare? It wouldn't stand a chance! All this time NV has been 'lucky' that AMD wouldn't have the b**lls to make a 500mm2+ GPU. Once that happens, NV's 15-20% historical advantage is going to disappear.

The only reason they haven't done that is a) yields, b) too power hungry c) cost. Theres greater risk involved when it could perform less than its competition and AMD cant afford those ever (hence why they took the small die strategy). Same thing can happen with nVIDIA but they can take one or two missteps thanks to their superior financial position.

Its interesting how you compare Kepler with Fiji and call that impressive.. Its like saying "look how great maxwell is vs tahiti!". Its also uncharacteristic of you to start using terms like luck(?)..

NV might hold an advantage in more efficient colour compression, but not the design of the memory controller. As I already said, 290X matches or beats 780Ti in performance despite a 438mm2 die size, but still packs a ton of DP performance, and a 512-bit memory controller. Despite a 561mm2 die, 780Ti could only manage a 384-bit memory controller, far inferior SP and DP performance and can't even outperform the 290X!

Maybe because bandwidth is not the primary contributor for overall performance? I have no idea what DP performance and die size has anything to do with memory controller performance. One good metric is how high they can be clocked and we all know nVIDIA parts are clocked at high stock memory clocks (and have plenty of headroom). AMD's memory controller may well be smaller and use less transistors is also their advantage.

About the only thing 780Ti can rightfully claim over 290X from an engineering point of view is about an

11% advantage in perf/watt at 1440/4K. That's nothing, considering AMD's engineers designed a way better all-around gaming+compute chip at only $550, gave it 4GB of VRAM and packed it in a die size just 78% the size of 780Ti's. That's why members on our forum who are so quick to write off AMD by comparing Maxwell against the outdated R9 200 series and not understanding just what AMD engineers were able to achieve with a 290X are going to be for a major surprise when 390X drops.

Last time I checked, nVIDIA holds a dominant position over the discrete GPU market share along with the workstation/GPGPU market..? Designing a better all around product is good on paper, but for the application is it really required? Does Hawaii need DP capabilities that it has now? What if having a 1/32 rate on Hawaii resulted in the card being ~200W only? What then? I feel really bad for them because they must be selling most of their R290 at

cost price!

Plus you cannot stop the comparisons to maxwell either because it is here now with many different types of SKU and AMD has yet to release anything.

Just wait until 390X - it should level the Titan X in perf/mm2, SP and DP compute performance, and provide > 50% the memory bandwidth and you'll see just how good AMD can design the memory controller.

That's why this idea that Pascal will use HBM2 but AMD won't is some fluffy BS alright.

Im not sure about the last statement but the FP64 capability in Fiji might hurt in terms of power consumption if the same ratio is kept as Hawaii. I can't think of many games that even require DP. It'd actually be more beneficial for them to just limit them like maxwell to save power for their gaming line.

And Id have no idea if their memory controller is better or not. Why? Its HBM vs GDDR5. Not even comparable. Once we get Pascal based cards, maybe then there could be some meaningful comparisons between the two.