- Apr 3, 2006

- 5,310

- 6,997

- 136

RISC-V gets mentioned more these days, as a contingency if ARM gets unruly, with NVidia in the process of buying them.

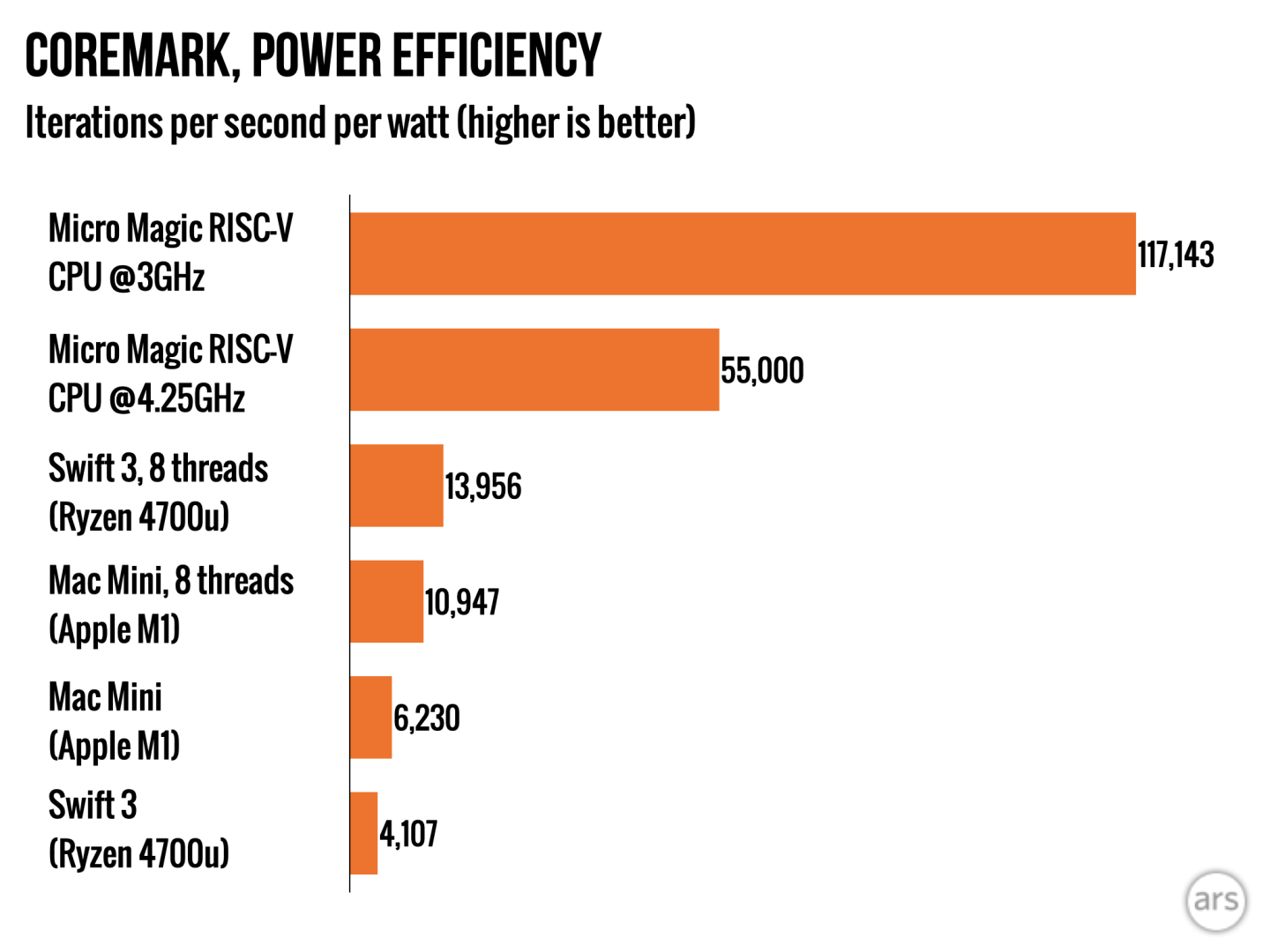

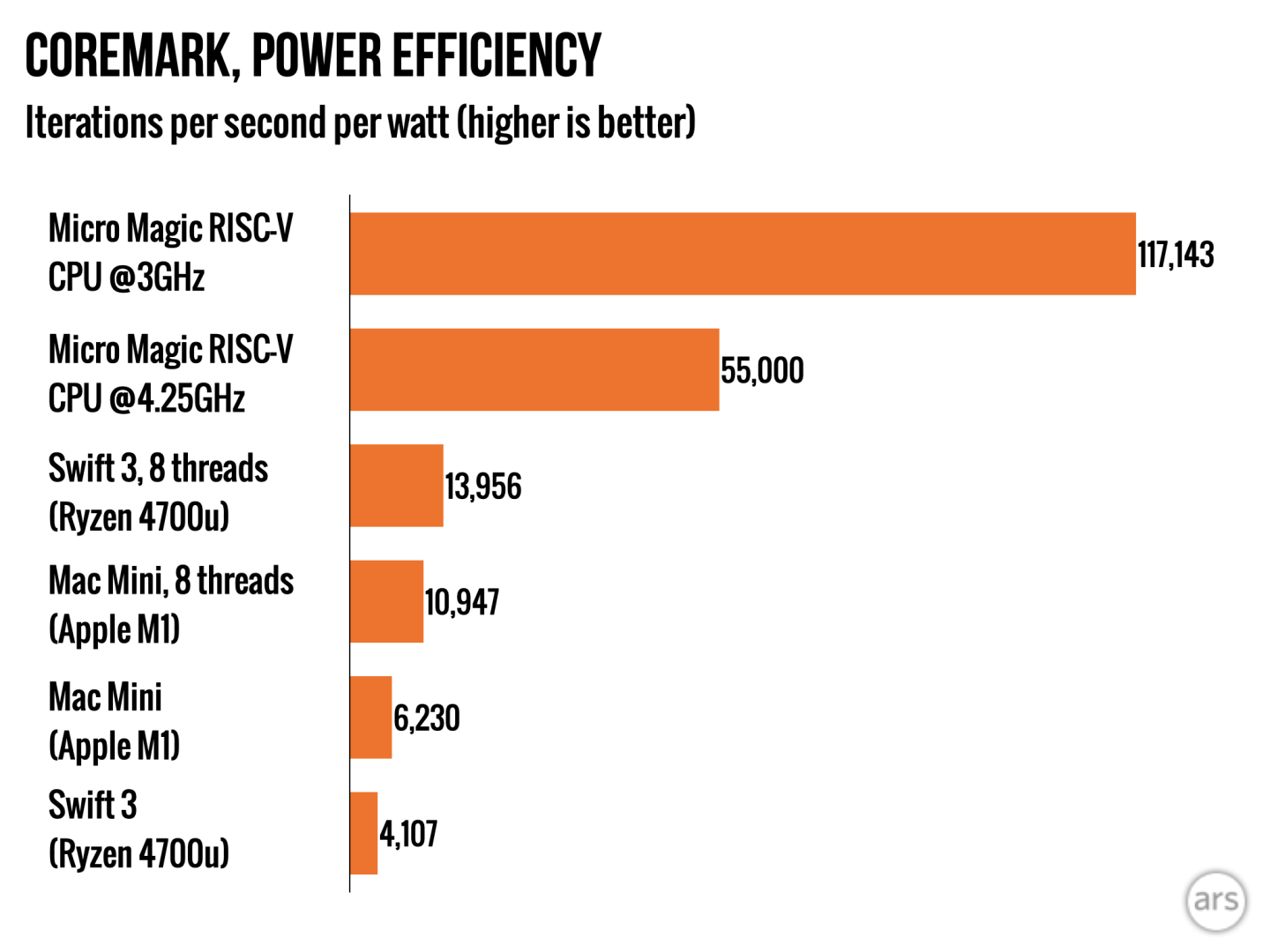

But what we seldom see are interesting RISC-V implementations. But there are some extremely impressive perf/watt claims about a new Risc-V design from Micro Magic:

arstechnica.com

arstechnica.com

So are we at the beginning of the rise of RISC-V?

But what we seldom see are interesting RISC-V implementations. But there are some extremely impressive perf/watt claims about a new Risc-V design from Micro Magic:

New RISC-V CPU claims recordbreaking performance per watt

Micro Magic's new CPU offers decent performance with record-breaking efficiency.

arstechnica.com

arstechnica.com

So are we at the beginning of the rise of RISC-V?