What about if AMD will create shared front-end for whole CCX? This would bring some advantages out of CMT while still using SMT for back-end.

1) This could save some transistors and increase throughput.

2) It allows HW control over threads within CCX. It can eliminate crazy windows scheduler shuffle.

I myself was previously toying with the thought of

moving some of the front end's functionality onto the IOD even. Problem is always data locality, you don't want to move critical data too far away from where it's actually needed to keep latency down.

Maybe the decoder could be situated before the core specific front end, so that all instructions hitting L2/3$ are already in the optimized internal uop format. Branch prediction, specifically TAGE that relies on long histories to work ideally, could also profit from being handled centrally. But aside latency to make efficient use of such a topology the task scheduler would need to be moved from OS into hardware, and that's something which for AMD's RTG repeatedly turned out to be a hindrance instead an advantage compared to Nvidia's driver controlled scheduling. On the other hand centralized hardware scheduling would allow for clean separation of INT and FP units as well as making HSA more feasible again. But as of now all of that is not feasible and won't happen.

Unlikely after a mere 20% area reduction unless their SIMD unit design is incredibly area efficient - they just doubled FP with Zen2 as it is, adding AVX-512 without that increase would be similar to having AVX2 before Zen2.

512bit FP can still happen by the way of combining the two 256bit FMACs already there. The issue with AVX-512 are all the additional instructions that are then also usable with 128bit and 256bit FP and likely need quite some area as well.

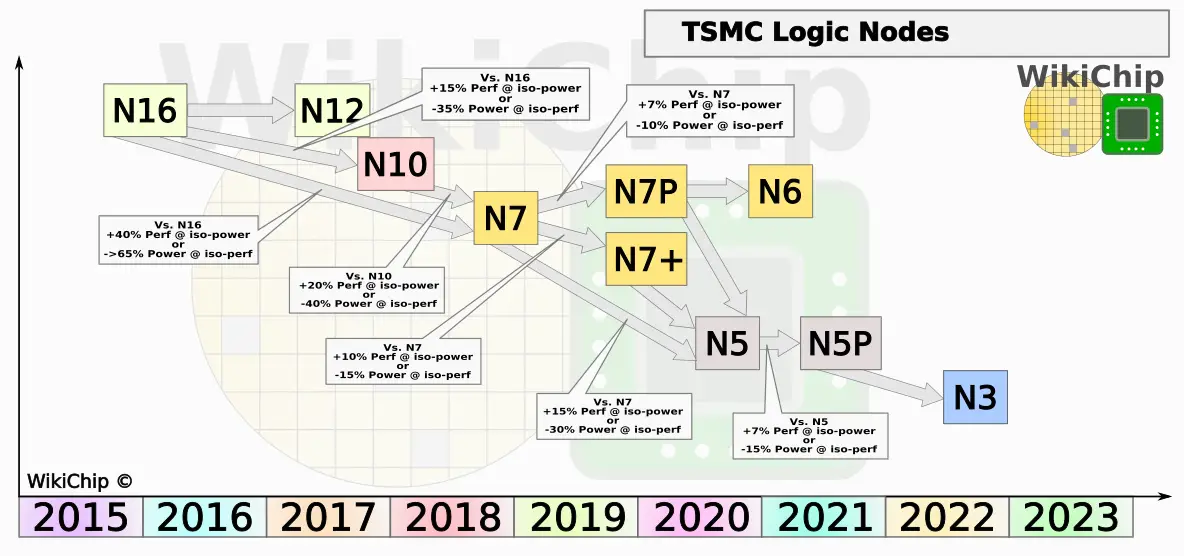

Zen 4 on 5nm with up to 50% higher density (compared to 7nm+, up to 80% compared to 7nm) could allow a bigger increase in transistors again, allowing another doubling of the FP unit with accordingly widened data paths/loads/stores etc.

It was mentioned before that many changes to the Zen 2 core were initially planned for Zen 3, so I'm expecting the Zen 3 core to be a much more polished coherent implementation of many parts that were premiered in Zen 2 (aside FP the newly introduced TAGE branch predictor is a primary candidate for such).