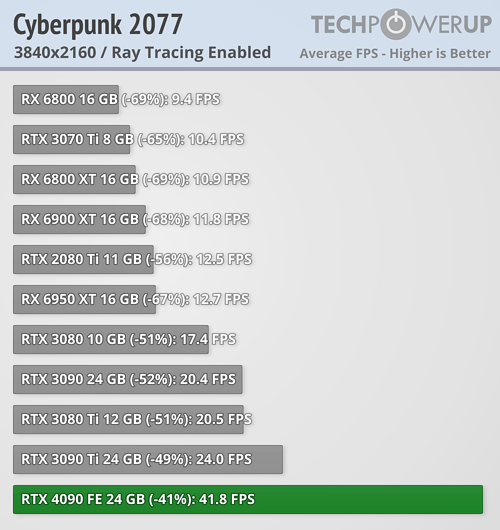

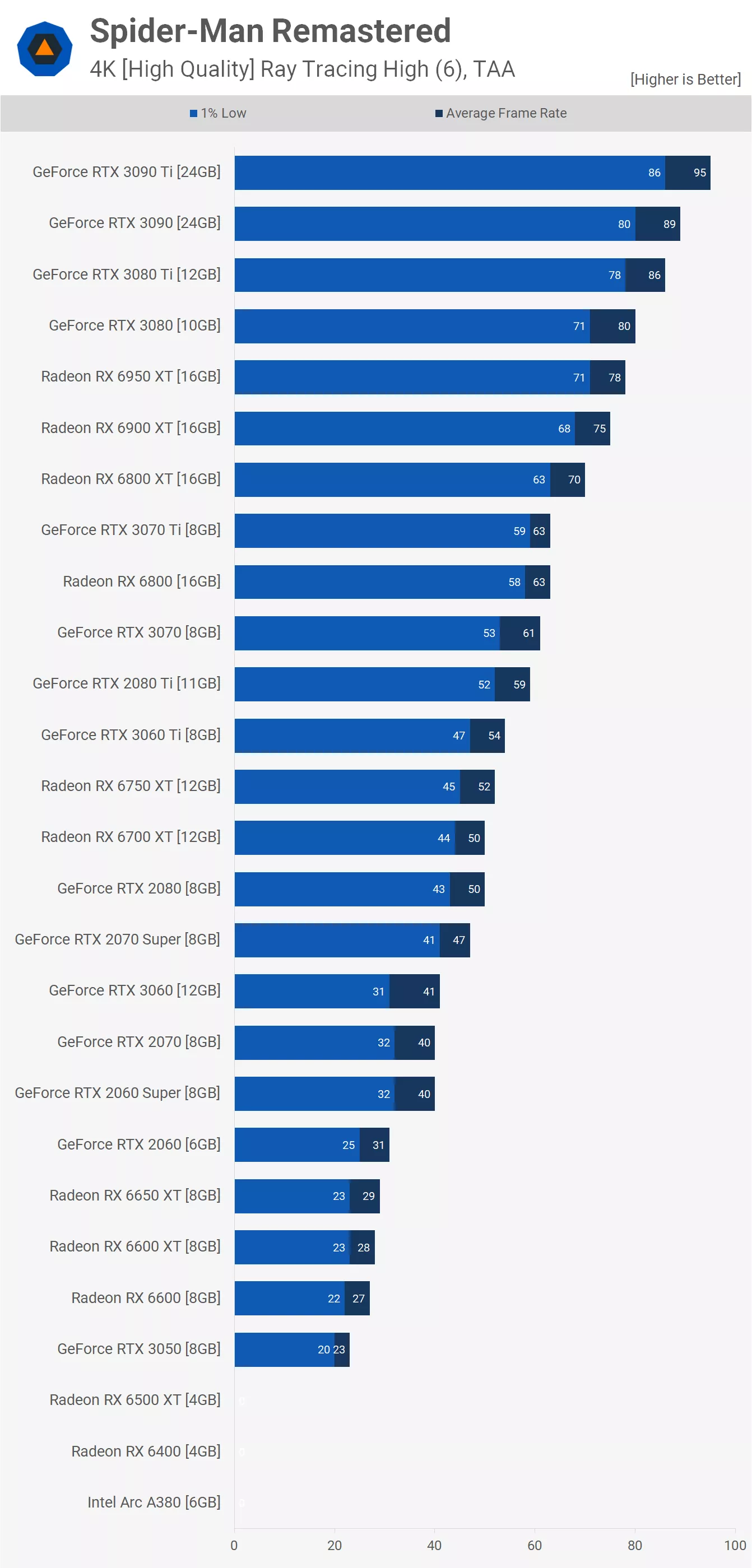

Cyberpunk was on consoles too, yes, but it wasn't an exclusive and it made full use of the capabilities of high end PCs. Spider-Man is a PC version of a PS5 version of a PS4 game that makes little use of RT. We will see what performance will be like in future titles, but based on AMD's data, it doesn't look like RDNA 3 will be remotely competitive.

Type anything good? I was very optimistic about RDNA 3 right up until yesterday, in this very thread, and I got called out on it after the hype train derailed, lol. I also generally like AMD's hardware. They haven't been doing so well recently in the desktop market, though, so my recent posts are naturally a bit negative.

I thank you for the reply.

From my POV, a

bit negative is an understatement. Why are you even contending my post about Spiderman then? Why not be positive on the idea that all PS5 ports will do well on AMD cards, instead of hand waving it away as moot or worse? Why move the goal post so that only certain RT titles and effects matter? If you are suggesting that titles that don't use every possible effect should be discounted, why? Is Spiderman not fun? Have reviewers and players not remarked about how cool and value added the reflections are? I rarely see anyone mention the better shadows, or proper/correct lighting in games they play. Yeah, a couple of IQ aficionados but that's about it. That's your cue you silly outlier people that seem to disproportionately post in tech forums.

If games use those RT effects, and many of us don't turn them on, what did we lose? I only use reflections, the rest cost me way too much performance on my 3060ti for what I consider a terrible trade off.

I hope you can see where I am going with this. Even your remark suggesting AMD themselves doesn't expect to be remotely competitive is negative hyperbole. They just announced partnerships with game engines. I drew a very different conclusion than you did from that info. What I took away, is that their future games will optimize for the ray tracing AMD hardware uses. That can only help performance in those titles.

And let me close my remarks with saying again, I think it is absurd to use 2077 as the poster child. The game is so badly optimized, they are still releasing major updates all this time later. For a single player game! SMDH. Not what I'd call a great standard bearer. And everyone knows it runs poorly on AMD hardware, period. It makes it look like an agenda driven talking point to constantly hold it up. Because it reads like sloganeering when it is used and other examples are discounted. No one is denying that AMD is behind on one of the most important graphics techs. However, like all graphics tech in the past, like TressFX, hairworks, PhysX, etc. it is entirely possible for a particular game to implement and favor one vendor over the other. In this case it is likely to close the gap in those titles, as opposed to leading. Not remotely competitive is negativity for its own sake. There is no way we can know what the performance in those titles will be like yet.

That's just like, my opinion man.