I got some sleep and played around with the 50% improvement, I think I'm thinking this clearly. If not, please correct.

| CU | Watt | FPS | Perf/Watt/CU |

1 | 40 | 225 | 100 | 0.0111111111 |

2 | 40 | 150 | 100 | 0.0166666667 |

3 | 40 | 225 | 150 | 0.0166666667 |

4 | 80 | 225 | 300 | 0.0166666667 |

5 | 80 | 300 | 400 | 0.0166666667 |

6 | 80 | 275 | 366 | 0.0166363636 |

7 | 64 | 275 | 293 | 0.0166477273 |

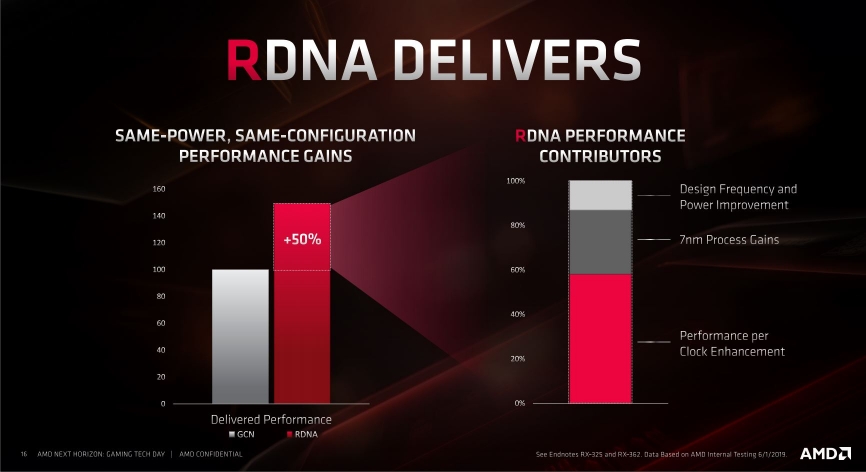

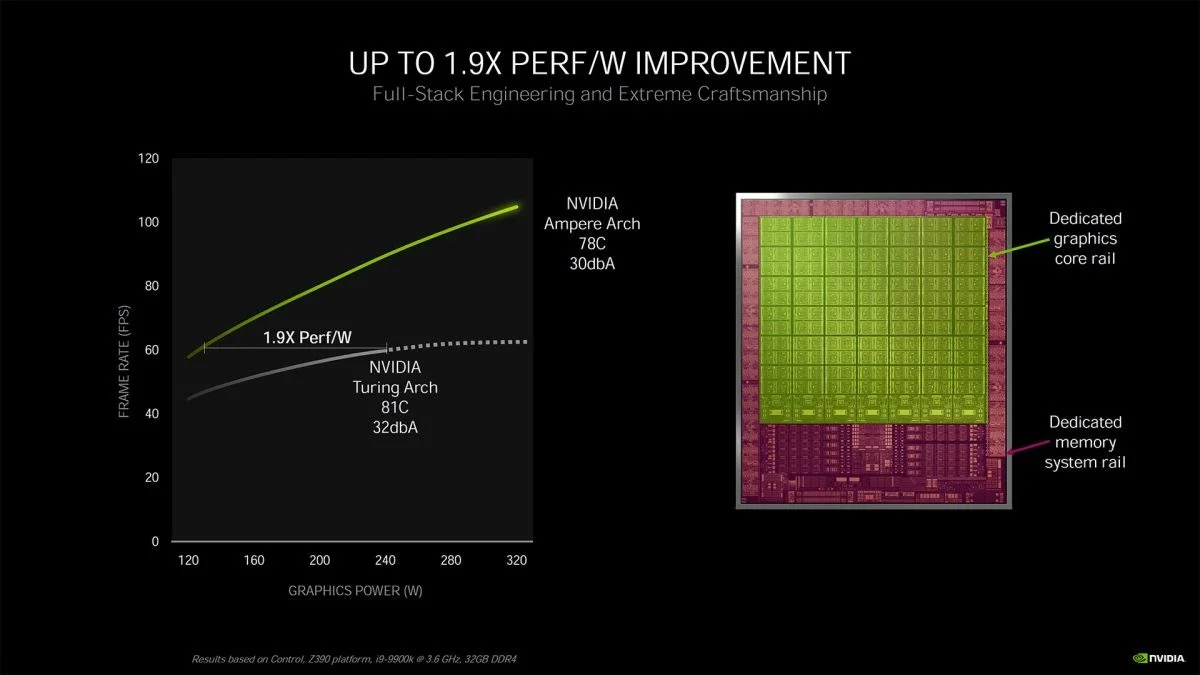

So, row 1 is the baseline 5700xt with an imaginary 100 fps, last column is the efficiency normalized to per core. Then just to convince myself that my numbers are correct, row 2 and 3 shows 2 different ways to get a 50% improvement. 2 is if the power usage have a 50% improvement (150 + 75 = 225), with everything else the same. We get 0.0167%, which is 50% better than 0.011%. Row 3 is if I kept the power the same and then bump the performance by 50% (100 + 50 = 150), we see the same 0.01667%. Now that I convinced myself that the excel is valid, then let's take AMD's word about a 50% improvement and apply it to potentially what big Navi could be. Row 4, is big Navi with 80 CU and with the same Watt, we need a FPS of 300 to get to the 50% improvement. That's really high. But we also know that they'll be bumping up the power, so arbitrarily say 300 Watt. Row 5 would require a 400 fps to get to the 50% improvement per CU. Row 6 is if we dial down the power to 275 Watt. All crazy high FPS, so maybe it's not a 80 CU. Row 7 is for a 64 CU at a reasonable 275 Watt, which still require a 293 fps to get to the 50% improvement. The 3080 is only around 200 fps...so what's up with 50% improvement?

Here's what the numbers would look like if we're not normalizing to the CU and instead just look at the entire GPU.

| Watt | FPS | Perf/Watt |

1 | 225 | 100 | 0.4444444444 |

2 | 150 | 100 | 0.6666666667 |

3 | 225 | 150 | 0.6666666667 |

4 | 300 | 200 | 0.6666666667 |

5 | 275 | 184 | 0.6690909091 |

Row 4 and 5 is big Navi with some Watt and FPS estimate. These are much more reasonable numbers, but then the 50% is not from IPC improvement, but rather from 40 -> XX increase in CU mainly.