.

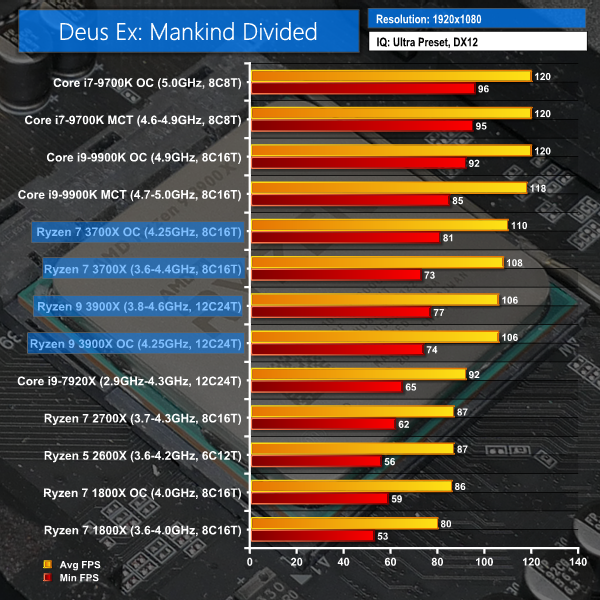

You can't argue that larger L3 doesn't reduce the number of trips to memory required. The only question is by how much they are reduced. That's why headline figures mean diddly squat.

Seconded..

In the graphs below we can clearly see L3 cache size influence, the 2400G has higher clock but only 4MB L3 (in respect of the R5 1400/1500X).

In games at roughly 10% lower frequency the R5 1400 (8MB) is only 3% behind while the 1500X (16MB) is 10% ahead despite its lower frequency, those %ages extend even further in the applications graph.

https://www.hardware.fr/articles/973-22/indices-performance-cpu.html