Fix your text, I can't see it.Deliberately delaying launch for no other reason than to wait for Intel makes no sense, but I don't think anyone is arguing that.

In the case of the hypothetical 2800X, it might be that AMD just missed their performance target, but has a sound plan in place to improve yields and release 2800X as soon as they have production rate and inventory for launch. If they could be certain there was no 8-core Intel coming, they might have saved themselves the effort and focused all the attention on the next generations. But they probably assumed it would arrive. So, in this scenario, a decision to proceed with 2800X can be seen as a response to Intel.

Competitive analysis is very much in play. AMD does extensive competitive scenario planning, as recently revealed in an interview with SVP Forrest Norrod, where he points out that they planned EPYC 2 based on what they estimated Intel would do on 10nm. And, now that Intel is stumbling, you can be sure that AMD is adjusting to make the most of the situation.

While this may be the case, it may be a sign of sluggishness, which is a bad trait. Agility, as in the ability to respond quickly to competition, is often lauded in business. So I think it makes much sense to speculate on what competitors plan to do in response to the known or suspected plan of the other.

And to me, speculating on the hardware level is the most fun.

Speculation: AMD's response to Intel's 8-core i9-9900K

Page 5 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

I do not really feel that Intel needs to release an 8 core at the moment. The 8700 and the 8700K do quite well in comparison to the 2700x. It is mainly because the base and boost clock is so high.

Also, to be in the same power envelope as AMD, Intel has going to require lowering the voltages and clocks. And then it will be difficult to sell these I9-9900 at a price that is interesting for customers.

Only people who favor Intel above all and all reason, would buy such a chip.

When the 7nm series from AMD comes out, then Intel is going to feel all the heat and they have no longer any choice.

While Intel doesn't "need" them, they need something for this year. What do you expect them to do, just release nothing for desktop this year? This isn't about competing with AMD, as much as it is about Intel having new desktop product this year.

I also don't buy your pessimistic view of an 8 core CFL. It should run as 6 cores up to near 5GHz as well as 6 core Coffee Lake does, by default it will have to scale back clock speed when running all 8 cores, but that should still put it easily ahead of Ryzen and 6 core CFL at highly threaded tasks.

On top of that, I expect dedicated overclockers with good motherboards and good cooling will likely get all 8 cores running close to 5 GHz, and that should make a lot them very happy.

I bet a lot of people are excited about an 8C CFL.

cbn

Lifer

- Mar 27, 2009

- 12,968

- 221

- 106

8C/16T, large iGPU with HBM type memory, but this is mostly for laptops.

(Essentially they will move a certain part of the mainstream off the desktop by doing this....not overcrowd desktop even more).

Sure, that's the fantasy part. Now the realistic response?

All they have to do is combine a 12nm 8C/16T CPU die and one of their GPU dies via Infinity fabric.

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

All they have to do is combine a 12nm 8C/16T CPU die and one of their GPU dies via Infinity fabric.

Infinity fabric off die, is the equivalent of PCIe links. Which is good because because PCIe is the normal way to connect with GPUs.

But you are moving the goal posts, because connecting a GPU via PCIe to a GPU, is NOT a iGPU like you were talking about before.

cbn

Lifer

- Mar 27, 2009

- 12,968

- 221

- 106

Infinity fabric off die, is the equivalent of PCIe links. Which is good because because PCIe is the normal way to connect with GPUs.

But you are moving the goal posts, because connecting a GPU via PCIe to a GPU, is NOT a iGPU like you were talking about before.

It would definitely be an iGPU because the memory would be shared with the CPU. (ie, it would be an APU)

William Gaatjes

Lifer

- May 11, 2008

- 23,116

- 1,551

- 126

While Intel doesn't "need" them, they need something for this year. What do you expect them to do, just release nothing for desktop this year? This isn't about competing with AMD, as much as it is about Intel having new desktop product this year.

I also don't buy your pessimistic view of an 8 core CFL. It should run as 6 cores up to near 5GHz as well as 6 core Coffee Lake does, by default it will have to scale back clock speed when running all 8 cores, but that should still put it easily ahead of Ryzen and 6 core CFL at highly threaded tasks.

On top of that, I expect dedicated overclockers with good motherboards and good cooling will likely get all 8 cores running close to 5 GHz, and that should make a lot them very happy.

I bet a lot of people are excited about an 8C CFL.

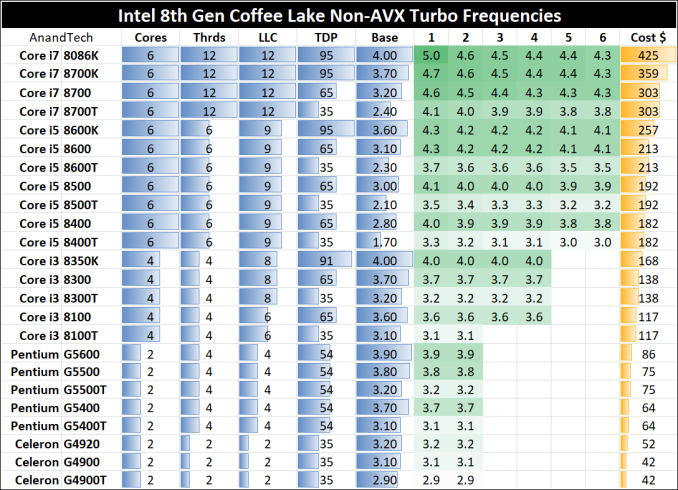

Does the 8700k really run 6 cores a 5GHz with turbo boost ?

Is Intel also not boosting up depending on electrical limits and power dissipation limits ?

And having a favorite core that clocks the highest ?

And clocking down depending on the amount of actively loaded cores ?

I would really be surprised of the 8700K does 5GHz on all cores at once at 100% load.

If that would be the case, to run 8 cores up to 5GHz will come at a cost. And that cost should not make it a marketing failure.

Which makes me wonder , which socket form Intel has at least a 125W TDP ?

From the ark side.

Peak power is gong to be very high then. Even with the great 14nm process Intel has.Thermal Design Power (TDP) represents the average power, in watts, the processor dissipates when operating at Base Frequency with all cores active under an Intel-defined, high-complexity workload. Refer to Datasheet for thermal solution requirements.

I do not want to derail this fine thread.

It is just that it has happened before that Intel came up with a cpu that made their other so called better options instantly obsolete and a laughing stock.

And that is why i think intel will postpone any launch for now.

edit:

I was so surprised , that i looked it up and the 5GHz turbo boost is for a single core for the 8086K.

8700K is 4.7GHz.

I will not continue derailing. I will happily await what will happen with the 7nm ryzens.

Last edited:

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

Does the 8700k really run 6 cores a 5GHz with turbo boost ?

Is Intel also not boosting up depending on electrical limits and power dissipation limits ?

And having a favorite core that clocks the highest ?

And clocking down depending on the amount of actively loaded cores ?

I would really be surprised of the 8700K does 5GHz on all cores at once at 100% load.

If that would be the case, to run 8 cores up to 5GHz will come at a cost. And that cost should not make it a marketing failure.

Which makes me wonder , which socket form Intel has at least a 125W TDP ?

You can look up the boost table for 8700K, 8core should run 6 cores, just as well as that at default.

Running all 6 cores ~5GHz on 8700K requires overclocking, but this has been very successful.

Running all 8 cores ~5GHz on 8C CFL will also require overclocking, and while less successful than 8700K, it should still deliver on a high percentage for good motherboards, with good cooling. More people will have to back off to 4.8GHz all core or maybe even 4.7GHz all core, but that will still be great performance beating anything on mainstream socket.

You should realize that high quality motherboard are capable of delivering well above specified CPU TDP power. Extreme overclocking 8700K with LN2 has 200+ watts going to the CPU.

So I am really not getting why you think 8 core overclocking will be so difficult. It will be harder than 6 core because you need more power and cooling. But high quality Motherboards can easily deliver the power, and liquid cooling should handle that as well.

I am baffled by your pessimism.

I would consider such a setup a dGPU that shares memory and bandwidth with the CPU. Which I don't see the point of since a dGPU with it's own memory will be faster.It would definitely be an iGPU because the memory would be shared with the CPU. (ie, it would be an APU)

William Gaatjes

Lifer

- May 11, 2008

- 23,116

- 1,551

- 126

You can look up the boost table for 8700K, 8core should run 6 cores, just as well as that at default.

Running all 6 cores ~5GHz on 8700K requires overclocking, but this has been very successful.

Running all 8 cores ~5GHz on 8C CFL will also require overclocking, and while less successful than 8700K, it should still deliver on a high percentage for good motherboards, with good cooling. More people will have to back off to 4.8GHz all core or maybe even 4.7GHz all core, but that will still be great performance beating anything on mainstream socket.

You should realize that high quality motherboard are capable of delivering well above specified CPU TDP power. Extreme overclocking 8700K with LN2 has 200+ watts going to the CPU.

So I am really not getting why you think 8 core overclocking will be so difficult. It will be harder than 6 core because you need more power and cooling. But high quality Motherboards can easily deliver the power, and liquid cooling should handle that as well.

I am baffled by your pessimism.

That is the thing.

I was not talking about overclocking. Just the regular processor doing what it is supposed to do by default.

Not everybody overclocks.

And when they do, it takes effort and mostly financial effort.

That would make the cpu costly as described by you above. Expensive motherboard, memory, cooling system and power supply.

You can be baffled, but oem manufacturers have to build machines and want to make a profit.

Hard to justify an expensive machine now that AMD has a good product that will do fine for most users.

Looking at AMD for the ryzen to turn back to the topic, the X370 / X470 boards made for overclocking are in general more expensive than the for example B350(and B450 when it finally appears ?) boards and that makes sense of course. The A320 is for bottom of the barrel.

cbn

Lifer

- Mar 27, 2009

- 12,968

- 221

- 106

I would consider such a setup a dGPU that shares memory and bandwidth with the CPU. Which I don't see the point of since a dGPU with it's own memory will be faster.

The APU would have HBM. So it wouldn't be slower than a dGPU with GDDR5/6 or HBM (see first quote in post #103).

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

The APU would have HBM. So it wouldn't be slower than a dGPU with GDDR5/6 or HBM (see first quote in post #103).

Connected where? Because one die gets off die latency penalty all the time. It really makes much more sense in a split die arrangement to give each die it's own memory.

In an Actual APU, you would have on die access through the cache, so both the CPU/GPU get essentially full speed access to the memory with no off die penalty, but split die is not an APU, and in that case you really want a memory controller in each part.

Wouldn't both the CPU and GPU in this setup have to run at lower clockspeeds to keep the product within reasonable range for AIOs and uSFF PCs?Connected where? Because one die gets off die latency penalty all the time. It really makes much more sense in a split die arrangement to give each die it's own memory.

In an Actual APU, you would have on die access through the cache, so both the CPU/GPU get essentially full speed access to the memory with no off die penalty, but split die is not an APU, and in that case you really want a memory controller in each part.

cbn

Lifer

- Mar 27, 2009

- 12,968

- 221

- 106

Connected where? Because one die gets off die latency penalty all the time. It really makes much more sense in a split die arrangement to give each die it's own memory.

In an Actual APU, you would have on die access through the cache, so both the CPU/GPU get essentially full speed access to the memory with no off die penalty, but split die is not an APU, and in that case you really want a memory controller in each part.

The CPU would have the dual channel DDR4 controller and the GPU die would have the HBM controller.

So (for example) a person could have a 8C/16T APU laptop with 8GB/16GB/32GB DDR4 + 4GB (total system memory 12GB to 36GB).

William Gaatjes

Lifer

- May 11, 2008

- 23,116

- 1,551

- 126

I wonder if HBM2 memory is a good choice for a CPU itself, the CPU has more erratic memory access in comparison to a GPU that usually access memory in strides.

I have always understood form detailed explanations about HBM2 that HBM2 is not very good at erratic access although pseudo channels helps to hide that a bit.

A good memory controller with lot of cache should help.

The gpu and cpu could be on one die, but it would be a lot of pads on the die to be connected.

For cost effective binning, a separate cpu die and gpu die next to HBM2 would be better. And can still be called an APU if AMD could use EMIB from Intel which is of course just a dream i hope to see happening.

And after that, a future possible HBM2 memory with logic inside the logic die not only to access the dram stacks but also to do some basic clearing or setting of bits autonomously. Making a Memset or memcpy very easy to do.

I have always understood form detailed explanations about HBM2 that HBM2 is not very good at erratic access although pseudo channels helps to hide that a bit.

A good memory controller with lot of cache should help.

The gpu and cpu could be on one die, but it would be a lot of pads on the die to be connected.

For cost effective binning, a separate cpu die and gpu die next to HBM2 would be better. And can still be called an APU if AMD could use EMIB from Intel which is of course just a dream i hope to see happening.

And after that, a future possible HBM2 memory with logic inside the logic die not only to access the dram stacks but also to do some basic clearing or setting of bits autonomously. Making a Memset or memcpy very easy to do.

cbn

Lifer

- Mar 27, 2009

- 12,968

- 221

- 106

Wouldn't both the CPU and GPU in this setup have to run at lower clockspeeds to keep the product within reasonable range for AIOs and uSFF PCs?

It depends on the TDP range, but one nice thing about an APU compared to a CPU + dGPU is that the APU can shift its entire TDP budget to either the CPU or iGPU. (a dCPU + dGPU cannot do this.)

Think about how nice it would be do Blender purely on the iGPU (with the full TDP available) while having high bandwidth access to system memory (rather than having to go over PCIe bus like a dGPU would).

Likewise imagine being able to get the full TDP budget to the CPU for OpenShot. (or the full TDP budget to the iGPU for Hit Film Video editor*)

*Unlike OpenShot which uses the CPU only for rendering, Hit Films does the opposite and uses only the GPU for rendering.

Last edited:

William Gaatjes

Lifer

- May 11, 2008

- 23,116

- 1,551

- 126

Makes me wonder : How about the emib die being the connection between the gpu and the hbm2 stack but also have the hbm2 logic die circuitry in it.

It will be too expensive for most people to be willing to buy anyway.Makes me wonder : How about the emib die being the connection between the gpu and the hbm2 stack but also have the hbm2 logic die circuitry in it.

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

The CPU would have the dual channel DDR4 controller and the GPU die would have the HBM controller.

So (for example) a person could have a 8C/16T APU laptop with 8GB/16GB/32GB DDR4 + 4GB (total system memory 12GB to 36GB).

You just keep shifting your target, your are back to a bog standard discrete CPU and discrete GPU, or at best something like KabyLake G. Neither of which is anything like an APU/iGPU.

An APU/iGPU have the GPU/CPU on the same die. That is the whole point. You don't get to redefine the term when you use it incorrectly.

cbn

Lifer

- Mar 27, 2009

- 12,968

- 221

- 106

An APU/iGPU have the GPU/CPU on the same die. That is the whole point. You don't get to redefine the term when you use it incorrectly.

That is not what AMD is planning with the Exascale APU processor:

http://www.computermachines.org/joe/publications/pdfs/hpca2017_exascale_apu.pdf

https://www.overclock3d.net/news/cp...a_exascale_mega_apu_in_a_new_academic_paper/1

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

That is the thing.

I was not talking about overclocking. Just the regular processor doing what it is supposed to do by default.

Not everybody overclocks.

And when they do, it takes effort and mostly financial effort.

That would make the cpu costly as described by you above. Expensive motherboard, memory, cooling system and power supply.

You can be baffled, but oem manufacturers have to build machines and want to make a profit.

Hard to justify an expensive machine now that AMD has a good product that will do fine for most users.

Looking at AMD for the ryzen to turn back to the topic, the X370 / X470 boards made for overclocking are in general more expensive than the for example B350(and B450 when it finally appears ?) boards and that makes sense of course. The A320 is for bottom of the barrel.

If you don't overclock, then the 9900K or whatever it is called will be the fastest CPU on a mainstream socket without overclocking, and it remains a great product.

I think your previous baffling statements are finally reconciled. It isn't about anything actually wrong with the 8 core CFL, it is about your preference for AMD. Got it.

CatMerc

Golden Member

- Jul 16, 2016

- 1,114

- 1,153

- 136

I'm not talking binning, I'm talking going into the design and using the new 12nm libraries, working on the physical implementation of the design. Pinnacle Ridge is simply Summit Ridge with 12nm transistors, doesn't use the new libraries nor does it do much if at all on the physical design optimization side. It would simply be too costly for the volumes AMD sells.If they have been binning and saving the top 1% that can hit 4.5ghz...then they can charge 399$ for it.

That makes financial sense to me.

12nm transistors on their own already provide substantial power savings at the lower end of the frequency curve, where Raven Ridge lies. I suspect they won't do much more physical optimization than Pinnacle Ridge if at all. That kind of work would be more important for bringing up the Fmax.On its own I agree. Except if there is a problem bringing 7nm to market. However, if AMD has decided to go ahead with it as Plan B, then releasing it, if it is ready, against Intel's 8-core, may make sense, even if 7nm is on schedule. And as I noted before, AMD presumably needs to do the 12LP design work on the Zen+ CCX anyway for the APU refresh ("Picasso"). So there is substantial overlap there.

That's a long term plan and not at all ready for any kind of real usage. You'll see it in enterprise grade hardware long before you see it in consumer.That is not what AMD is planning with the Exascale APU processor:

http://www.computermachines.org/joe/publications/pdfs/hpca2017_exascale_apu.pdf

https://www.overclock3d.net/news/cp...a_exascale_mega_apu_in_a_new_academic_paper/1

Last edited:

I do not really feel that Intel needs to release an 8 core at the moment. The 8700 and the 8700K do quite well in comparison to the 2700x. It is mainly because the base and boost clock is so high.

Also, to be in the same power envelope as AMD, Intel has going to require lowering the voltages and clocks. And then it will be difficult to sell these I9-9900 at a price that is interesting for customers.

Only people who favor Intel above all and all reason, would buy such a chip.

When the 7nm series from AMD comes out, then Intel is going to feel all the heat and they have no longer any choice.

Have you actually looked at the power draw of the 2700X under full load? It can exceed 140W. Check the stilts testing, or gamers nexus. The 8700K under the same test draws 95W, so there is definitely TDP headroom there to add a further 2 cores.

8 cores is important for Intel to not be usurped by AMD in the numbers game, and for the outright desktop performance crown in general. Intel hates being in second place, in case you haven't noticed.

Have you actually looked at the power draw of the 2700X under full load? It can exceed 140W. Check the stilts testing, or gamers nexus..

So far i saw no serious review that state 140W...

What is most heavy for Ryzen is not even Prime 95 but actually X264 encoding, at wich point the chip can drain as much as 120-125W but certainly not 140.

https://www.hardware.fr/articles/975-3/consommation-efficacite-energetique.html

As for a 8C CFL there s not that much headroom since the 8086K get as high as 110-115W on Prime 95 (with AVX2), same as a 8700K.

https://www.computerbase.de/2018-06...ition-cpu-test/2/#abschnitt_leistungsaufnahme

William Gaatjes

Lifer

- May 11, 2008

- 23,116

- 1,551

- 126

If you don't overclock, then the 9900K or whatever it is called will be the fastest CPU on a mainstream socket without overclocking, and it remains a great product.

I think your previous baffling statements are finally reconciled. It isn't about anything actually wrong with the 8 core CFL, it is about your preference for AMD. Got it.

This is very cheap. I always have a critical view and if a manufacturer does something i do not like... Well, i will always write about it.No matter if it is AMD, Intel or Nvidia.

I guess you looked at my signature and made assumptions based on that.

Before i buy any kind of hardware, i do some research into what i am going to do with it and how much i am willing to spend on it.

for my A10-6700 system, i just needed a few years ago a pc to do moderate taks. And it sufficed greatly.

For my new R5 -2600 system, i have more in mind. And so far, i am not regretting buying it.

The system i game with, does everything i want at HD resolution 1080p and with all graphic settings maximized.

It does so easily. And when i need to run non gaming software it also does easily.

It is obvious that you have this Intel /AMD bias. I do not. I never mentioned that AMD is superior in every which way or Intel is not, that is your distorted view of reality.

If people want to make a game pc, and are willing to spend and ask me for advice, i always say buy the 8700K and even delidded if possible, because it is the best for gaming you can get.

And for some other tasks as well. Although i now mention the 8086K as well.

If they ask me , i want the fastest graphic card in general, i always promote the 1080TI from Nvidia.

But as i always say, it comes at a price.

AMD can provide a very good system that costs less and in 90% of the general use cases you will not know the difference between having an AMD or Intel.

The whole point i was making in my post was not that Intel should not have an 8 core cpu at all. It is just that they have so many cpu to be sold.

And i fear there will be another stream of reviews where people say Intel is competing with Intel. While AMD is competing with Intel as well.

Alas, you can think what you like.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.