I'm not going to provide an opinion on made up specs. I'm going to wait for the actual cards.

But hey, if anyone here is hoping for a miracle it's me. Let's hope at least one side delivers something compelling this coming round. :thumbsup:

-But the whole idea of this thread is to provide opinions on made up specs soooooo....

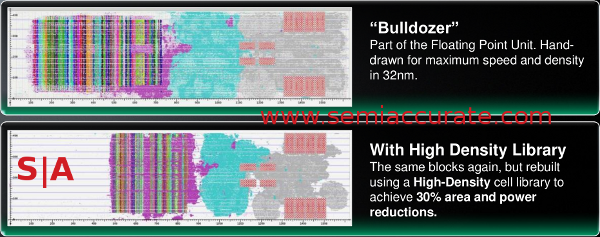

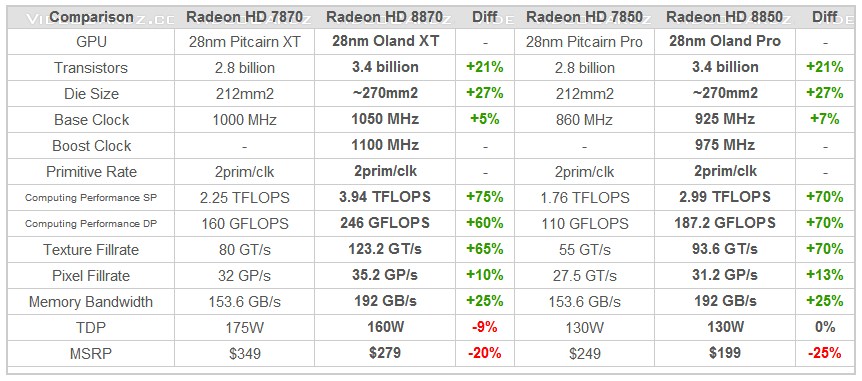

That being said, there is definitely room for AMD to squeeze this node dry as evidenced by the extremely conservative clocks they put out with the 7xxx series. If between tape out and production the node matured to the point where people were getting 20-40% overclocks, then there is really no saying where it will be by the end of the year.

And yet the glaring absence of the 7990 would indicate that it hasn't gotten THAT much better (not enough improvement to up the performance while lowering the power to put up a good fight against the 690).

I think the jump will be slightly better than what we saw with the 40nm refresh for sure (which was disappointing all around), but people are setting themselves up for disappointment if they think its going to be a massive jump in performance on AMD's side.

I would cry tears of joy if AMD threw their "small die" strategy to the curb and went all in with monolithic again. They're finally neck and neck with NV again after all these years, lets make it a good show!