NV white paper gives a 50/50 ratio of ray tracing to rasterization as typical in Hybrid rendering, which occurs over about 80% of the total frame time.

Using DLSS as a representative DNN workload (purple), we observe that it takes about 20% of the frame time. The remaining 80% time is doing rendering (yellow).

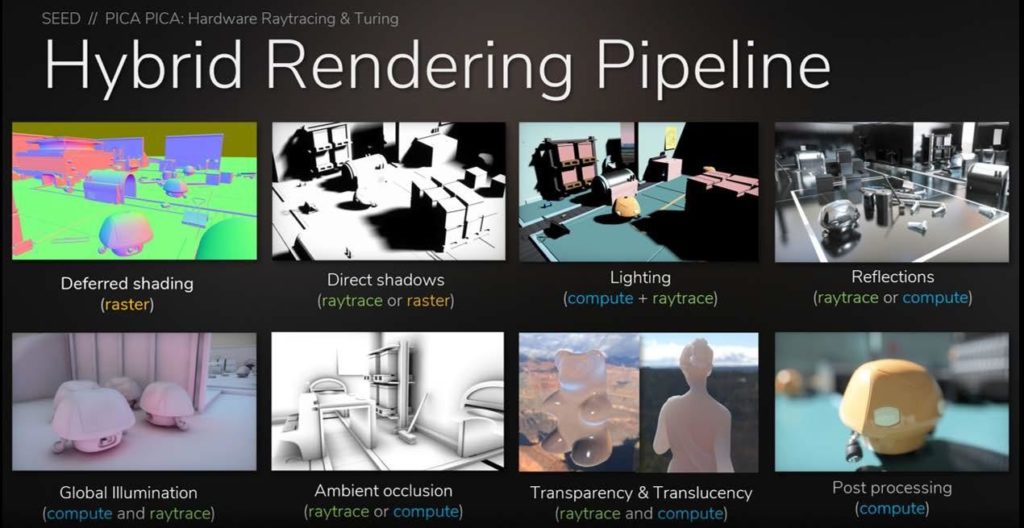

Of the remaining rendering time, some time will be spent ray tracing (green) while some time is spent in traditional rasterization or G-Buffer evaluation. The amount of time will vary based on content. Based on the games and demo applications we’ve evaluated so far, we found that a 50/50-time split is representative. So, in Figure 43, Ray Tracing is about half of the FP32 shading time. In Pascal, ray tracing is emulated in software on CUDA cores, and takes about 10 TFLOPs per Giga Ray, while in Turing this work is performed on the dedicated RT cores, with about 10 Giga Rays of total throughput or 100 tera-ops of compute for ray tracing.

A third factor to consider for Turing is the introduction of integer execution units that can execute in parallel with the FP32 CUDA cores. Analyzing a breadth of shaders from current games, we found that for every 100 FP32 pipeline instructions there are about 35 additional instructions that run on the integer pipeline. In a single-pipeline architecture, these are instructions that would have had to run serially and take cycles on the CUDA cores, but in the Turing architecture they can now run concurrently. In the timeline above, the integer pipeline is assumed to be active for about 35% of the shading time.

Given this workload model, it becomes possible to understand the usable ops in Turing and compare vs a previous generation GPU that only had one kind of operation instead of four. This is the purpose of RTX-OPS—to provide a useful, workload-based metric for hybrid rendering workloads.