It doesn't but the discussion isn't limited to just consumer spending, is it?

So the point you are trying to make is that some XYZ NV card with ABC die size is performing well for its ABC die size and DEF power usage. Ok, that's nice but unless we are shareholders or electrical engineers, so what? Now tell me how that NV card stacks up in price/performance and overall performance against a

$230 R9 290.

After-market R9 290 such as the one I linked ~ R9 290X reference.

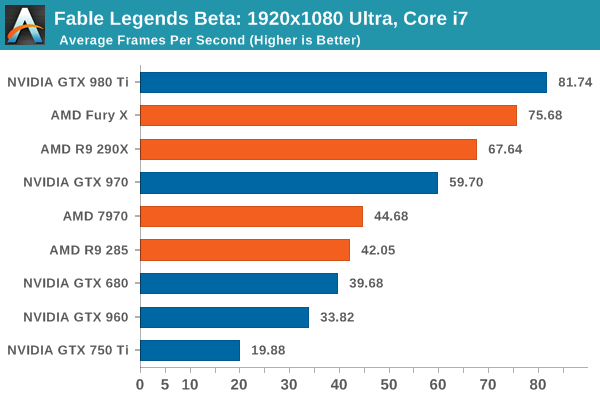

77% faster than a GTX960 at 1080P

84% faster than a GTX960 at 1440P

https://www.techpowerup.com/reviews/ASUS/R9_380X_Strix/23.html

Even if GTX960 had a 9mm2 die size and used 1W of power, at $170 is it a good buy for gaming against a $230 after-market R9 290? Absolutely not.

GCN 1.0 parts don't support freesync, that's only 1.1 and up. So, 79xx/R9 280/x don't support it.

So from a feature set point of view, 380/380x are excellent replacements for 280/280x.

True but in all honesty, I think FreeSync on R9 380/380X is a pointless marketing gimmick. Think about it, most gamers don't have GSync or FreeSync monitors. If you are going to go out and buy a FreeSync monitor today, chances are you will want an overall better monitor than what you currently have. To achieve that you'll want to get something decent, not some $199 mediocre TN 1080P panel. By this point I wouldn't be surprised that anyone who is upgrading from some older 19-23" 1080P 60Hz monitor is going 1080P 144Hz or 1440P 60Hz or 1440P 144Hz, etc. What are the chances someone is going to upgrade to such a FreeSync monitor and not have another $50 to step up to an R9 290/390?

If you are building a rig from scratch, then R9 380X makes even less sense. Let's say it costs you $800-1000 to build a new rig with a new monitor. What would you take an $800 rig with an R9 380X or an $860 rig with an R9 390/970? The higher the price of your rig, the less $50 extra for a much faster GPU matters.

I keep hearing that there's no reason to buy a 380/380X with the 280/280X so cheap.

Well, here is a reason to have a GCN1.2 card.

I don't think anyone said there is no reason at all. Obviously the most obvious reason is that you simply cannot buy 280/280X in most countries in the world today at reasonable prices which means R9 380/380X are the default choice on the AMD side.

Also, if someone can find an R9 380 for a good price (

$115-125), that's a legitimate enough reason since some gamers cannot spend $200 on a 280X.

But, let's look at the perspective a bit here.

R9 280X launched for $299 more than

2 years ago. Those models also often came with free games and XFX ones with lifetime warranty. Further, I remember distinctly that

HD7970 1Ghz+ models like MSI TwinFrozr dropped as low as $270 on the release of R9 280X.

So let's see now - what's better for a PC gamer, to wait 2+ years to just get to R9 280X level of performance at $229-239 OR to have purchased an HD7970Ghz for $270-280 2 years ago? The opportunity cost of waiting 2 years to get to basically the same spot as an HD7970 1Ghz with some trivial HTPC features is too high.

You can bring all kinds of HTPC features into play but that's like putting lipstick on a pig because someone who paid $280-300 for an HD7970Ghz/R9 280X has enjoyed gaming on it for

2+ years already. What makes it even worse is that there have been sooooo many deals on after-market R9 290 cards for $250-260, it's mind-boggling. In that context, a $229 R9 380X isn't anything special.

Another major point is that anyone who was opportunistic and purchased an HD78xx/79xx card going way back to early 2012 could have been mining bitcoins. That means almost no matter how you slice it, there were plenty of opportunities to better time the purchase of an HD7970/7970Ghz/R9 280X/R9 290. If someone was an HTPC focused user, why wouldn't they have just bought a GTX960 almost a year ago? They would have especially since many of us knew that R9 380X wouldn't have HDMI 2.0 or as an evolved UVD.

It's easy to look at R9 380X and find 1-2 points that makes it better than some of those AMD cards I mentioned earlier, but guess what it's been almost 4 years since HD7970 came out. Compare a GPU from January 2008 to HD7970 of January 2012 (the same 4 years) and look at the dramatic difference in perf/watt, features, and VRAM. How does an R9 380X stack up compared to that? Meh. At this point the only reason R9 380X has a tiny amount of appeal isn't because it's a good graphics card for 2015, but simply because NV's 960 2GB-4GB are often even worse for gaming for the price. If we simply compare the time, context and how R9 380X stacks up against the 2-year-old R9 280X, the card is nothing but a disappointment.

I am not surprised because I truly believe that the new market sweet-spot for mid-range cards is the $290-350 segment moving forward. Prices have increased over time and our expectations are changing. Today the best 'value' cards are R9 290/290X/390/970, nothing below that segment, unless one is a casual or finds a super deal.

It's still possible to purchase an R9 290 for

$220 and

$235 and those cards come with a free racing game. In that context, the R9 380X still makes no sense. Drop PowerTune to -20%, do a TIM swap, undervolt, and the reference R9 290 will still beat any R9 380X without much effort.

Also, let's not ignore that R9 290 still have 64 ROPs and massive amounts of memory bandwidth. How is R9 380X going to stack up against R9 290 in DX12 games? Probably not very well.

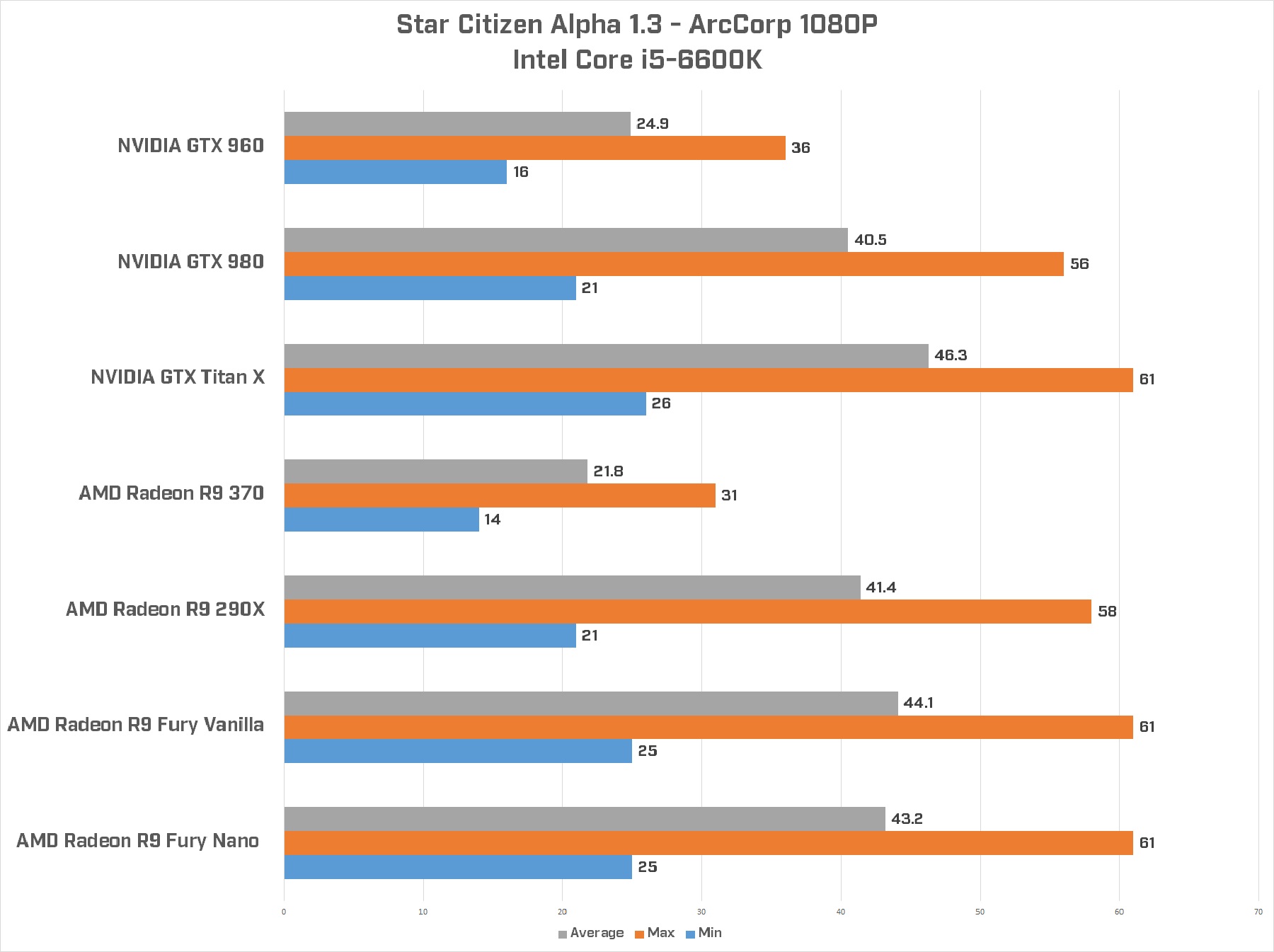

Early benchmarks for R9 290 series in Star Citizen highlight how this card is in a league of its own for price/perf.

Oh, and I actually missed that there is an after-market

XFX IceQ Turbo R9 290 for $230 on Newegg. This card peaks at

low 70C. RIP $230 R9 380X until that card is for sale.