So what's your point? There'll always be poorly optimized titles, regardless of whether you play on consoles or PC.. Nothing new about that. In the majority of multiplatform titles, you get a better gaming experience by playing on PC than you do on consoles.. There's no cure for developer incompetence unfortunately.. And most consoles titles still run at 30 FPS.. 30 FPS is acceptable on consoles, but it has never been so for PC. Optimizing a game for 30 FPS is much easier than doing so for 60 FPS..

My point is this is yet another example of a poorly optimized and broken console-to-PC port. Sure, it has great graphics but the level of PC hardware it requires to match a $200 console is cringe-worthy. Just because a game is gorgeous looking does not give it a waiver from being labelled poorly optimized when considering the context. My other point was a reflection that despite you consistently claiming that console hardware has little to no benefits over PC parts when it comes to extracting low-level performance, time and time again this has been proven wrong this generation. First, an i3 + GTX750Ti could play most XB1/PS4 games and generally an i5 and a GTX950/960 is needed just to provide a similar IQ/performance to XB1/PS4. What makes Forza Horizon 3 such a startling stand-out example is the horrendous level of performance on a PC with an i7 + GTX970/R9 390 at 1080p given the gigantic gulf in hardware advantage.

The other point you made that optimizing a game for 30 fps is much easier is unproven. First, at 1080p 4xMSAA, neither the 970 nor the 390 can provide a locked 30 fps. XBOne otoh manages to more or less do just that with a GPU

3X slower. Second, there is little doubt that once XB Scorpio comes out, this game may even run at 4K or 1080p 60 FPS, something that today requires a $700-1200 1080/Titan XP.

During the Xbox 360 and PS3 era (which was perhaps the longest console cycle ever), most games could easily be maxed out (or near maxed) with midrange hardware. I remember I had my GTX 580 SLI setup for about 3 years during those days, which is very rare for me. I didn't see much use in upgrading, as the games were so easy to max out.

Do you realize that NV themselves rated GTX580 as 9X faster than the GPU inside PS3?

Assuming a 50-80% SLI scaling, your setup was between

9X (no SLI scaling) to 16X faster than PS3. There is nothing special about $1000 USD of 580 SLI destroying 90%+ of 2010-2011 console ports considering XB 360/PS3 came out in 2005/2006. I am not sure why you bring up the comparison of GTX580 SLI being adequate for end of generation Xbox 360/PS3 games and the current context of Quantum Break or FH3. In contrast, Forza Horizon 3 came out about 3 years since Xbox One did and it's wiping the floor with a $700 780Ti. You are saying that's legit?

But when the PS4 and Xbox One hit the deck, suddenly PC gamers required more powerful hardware to get an above console experience..

You are just stating the obvious without providing any of the details. You actually set yourself up by claiming that GTX580 SLI didn't need to be upgraded until PS4/XB1 launches but you forgot that a

single GTX580 is much faster than the HD7790 in Xbox One?

1920x1080 4xMSAA, GTX580 is

53% faster than HD7790.

https://www.computerbase.de/2013-03/amd-radeon-hd-7790-test/2/#abschnitt_leistung_mit_aaaf

Considering GTX970/390 cannot even do 1080p 4xMSAA 30 fps locked on the PC when paired with an i7 5820K @ 4.4Ghz, how do you think a GTX580 3GB would do? It would bomb.

But one could argue that is how it should be. This is the normal consequence of technological advancements in the gaming industry and PC hardware market.

Yes, it is how it should be except once again you are missing the details. It wasn't unusual for Xbox 360 or PS3 to have similar level of graphics/performance to a 2005-2006 PC with a $599 7800GTX 256MB in it. That's because the GPUs inside those consoles are very similar in performance. Did you know that GTX780 is almost 2.5X faster than HD7790?

https://www.computerbase.de/2013-10...80x-test/6/#abschnitt_leistungsratings_spiele

According to TPU, when using modern games, R9 390 ~ 970 @ 1080p, are 24-26% faster than GTX780. That means R9 390/970 are 247% x 1.24 = 306% or 3.06X faster than HD7790 (~ XBox One's GPU).

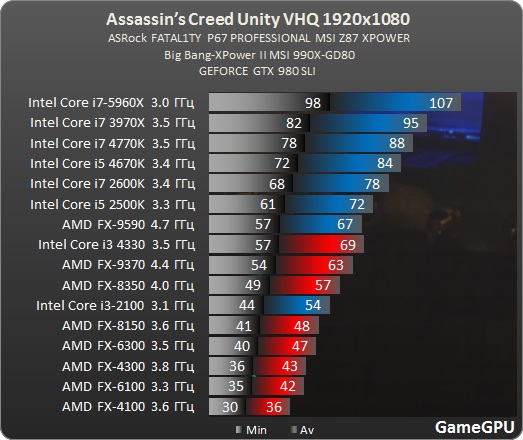

Even if we were to assume that 1.75Ghz Jaguar 8-core has identical IPC to the Bulldozer FX8150, its performance compared to an i7 5820K would be close to

~4X lower in a

well-threaded game engine.

8150 = 48 fps * (1.75ghz/3.6Ghz) => 23-24 fps Xbox One's CPU

3970X = 95 fps

In summary:

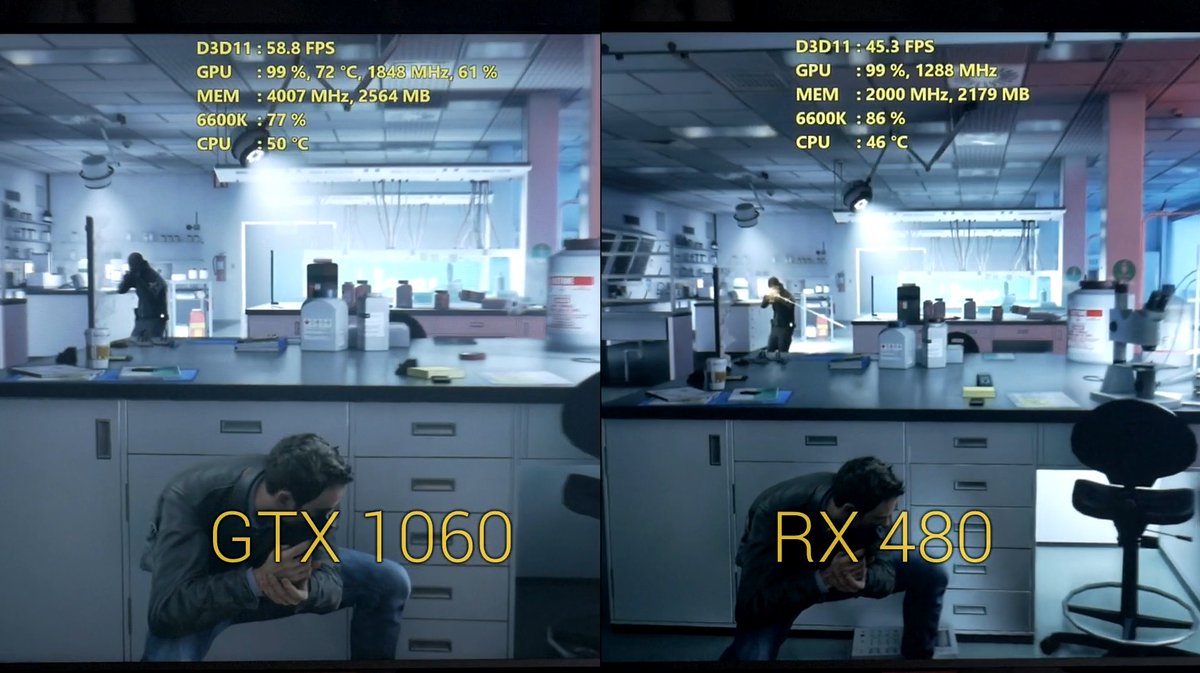

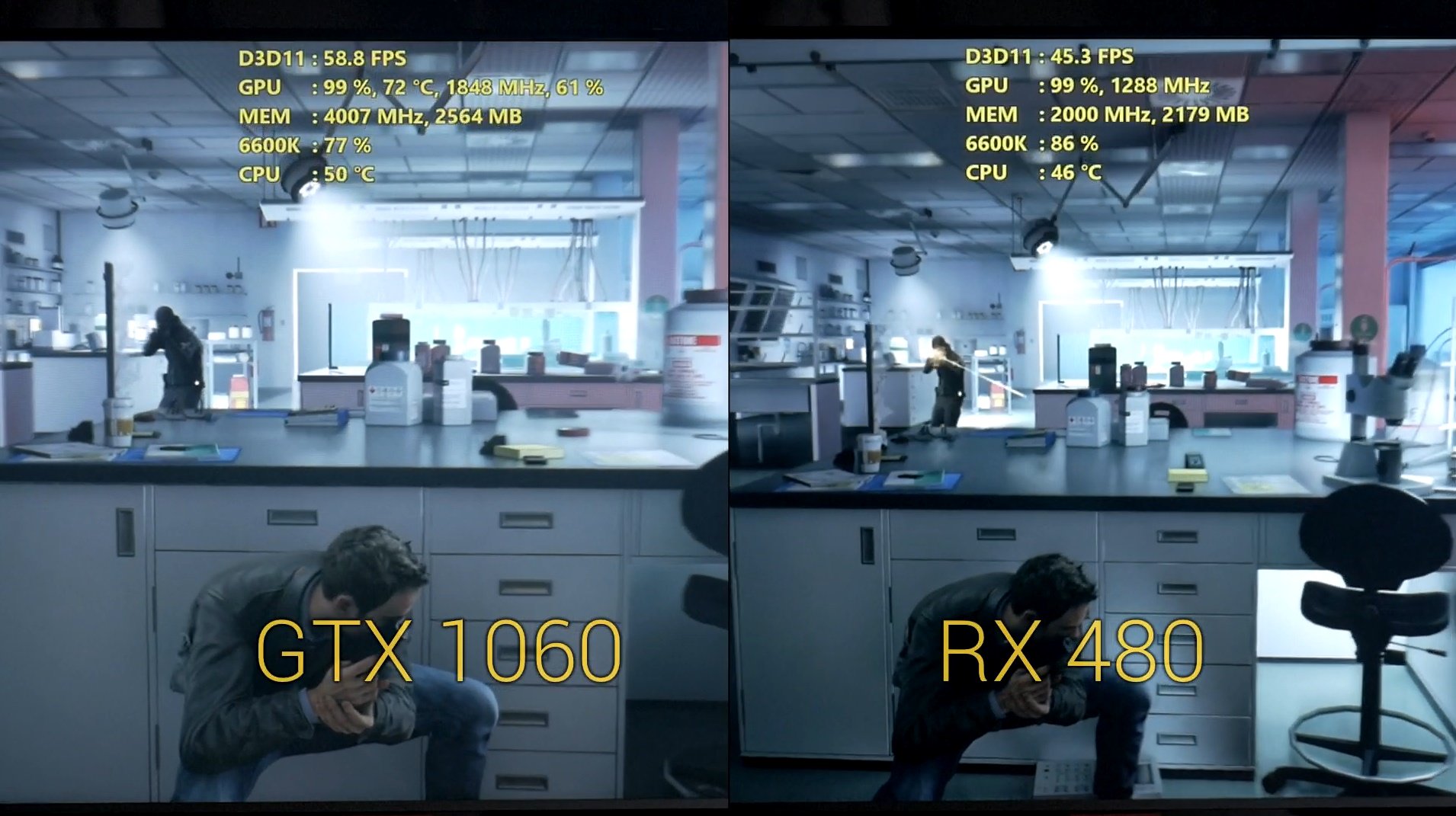

That means GTX970/390 is ~ 3X faster than Xbox One's GPU and i7 5820K at stock clocks would be 4X faster. However, per Digital Foundry's video, R9 390/970 are struggling and drop to

low-40 fps range at 1080p on an i7 5820K OC without 4xMSAA.

Take VRAM for instance. During the Xbox 360/PS3 days, one could get away with having a GPU with only 1GB of VRAM, with 2GB perhaps being optimal. But with the PS4 and Xbox One, you need perhaps a minimum of a 3GB framebuffer in most titles to use the same textures as the consoles, and depending on whether the developer uses higher grade textures for the PC version, you could require as much as 8GB for the framebuffer.

This is irrelevant because R9 390 or RX 480 have 8GB of VRAM. You did not bother comparing the CPU and GPU performance differences - I did it for you above.

Is this an issue of optimization? No it isn't.

100% it is. Given the performance advantage a modern i5 6600K/6700K/i7 5820K (etc.) and R9 390/970 have over Xbox One, the game should run at

least 2X faster. Since the game runs at 1080p 30 fps 4xMSAA on Xbox One, it should run at 1080p 60 fps 4xMSAA on PC hardware that's 3-4X faster if the port has been well-optimized.

It's just a consequence of technological advancement. That's what you don't seem to understand RS.

I disagree. You yourself have criticized how weak and under-powered modern consoles are and yet now you are reverting to the exact same thing I spoke about that "PC Master Race" does -- you justify the CPU/GPU demands because "It's expected that we should upgrade every 2-3 years." It would be totally different if this particular game ran at 720p 0xMSAA with medium settings on Xbox One and had trouble hitting 1080p 4xMSAA on an R9 390/RX 480/GTX1060.

When it comes to bad game performance, not everything can be explained by poor optimization.

In this case, it can be. 1 thread pegging modern i5/i7 CPU usage to 90-100%, horrendous performance on GPUs that are 2.5-3X faster than HD7790/XBox One's GPU. The developer needs to work closely with AMD/NV to improve the game's performance via patches and drivers. But I suppose AMD/NV wouldn't mind if 780Ti/GTX970/980 bombed against GTX1060 and for AMD if RX 480 was 40-50% faster than R9 390 (!).

Same thing with Kepler. NVidia misjudged the technological trajectory of the gaming market, and as a result, Kepler lost out big time compared to GCN due to it's much weaker compute abilities.. And yet many here like to blame NVidia for ceasing to optimize their drivers for Kepler, as though that could have prevented anything..

Except 2 things: (1) NV magically improved performance in Project CARS and The Witcher 3 on Kepler cards after Kepler owners complained -- so we have a proven history of NV's drivers adding huge performance gains post-launch; (2) Kepler's performance degraded dramatically against GCN during the same 1.5-2 year period when GCN and Maxwell remained a lot closer. Both Kepler and Maxwell have static schedulers and neither architecture was ever a compute monster. Both of these architectures don't even have async compute and I don't recall much proof that Maxwell is a superior compute architecture than Kepler. Right now a GTX780/780Ti trail R9 290/290X by 20-30% in modern titles.

Of course this has nothing to do with what we are talking about.

Generally speaking though, games are a lot more complex and sophisticated than they used to be, and thus require more hardware to get an "above console" experience. That's perhaps the biggest reason why people turn to PC gaming, alongside customization and modding.

You are trying to make an argument that older GPUs such as GTX780Ti/R9 290/290X/970 shouldn't play modern titles well at 1080p since technological advancement means future games get more demanding/complex and these GPUs/architectures were never meant to play those future titles well. This argument would be all fine and dandy EXCEPT that a 1.75Ghz 8-core Jaguar + HD7790 is miles behind in performance to GTX780/780Ti/970 GPUs and architecturally are not any better than Hawaii. Then how do you explain such mediocre performance on 970/R9 390? You are saying HD7790 is more advanced for modern game engines?

The good news though, is that DX12 should definitely help to lengthen the hardware cycles, as developers will be able to squeeze more performance out of the hardware than before. But when the Xbox Scorpio hits the deck, there will probably be another upheaval

Notice how Quantum Break, Forza Horizon 3 and Gears of War Ultimate all launched with performance issues? It's starting to form a trend that Xbox One exclusives are poorly optimized when ported to the PC.

Since the developer is already acknowledging stuttering and performance issues, I am surprised you are not acknowledging that FH3 has performance issues.