airfathaaaaa

Senior member

- Feb 12, 2016

- 692

- 12

- 81

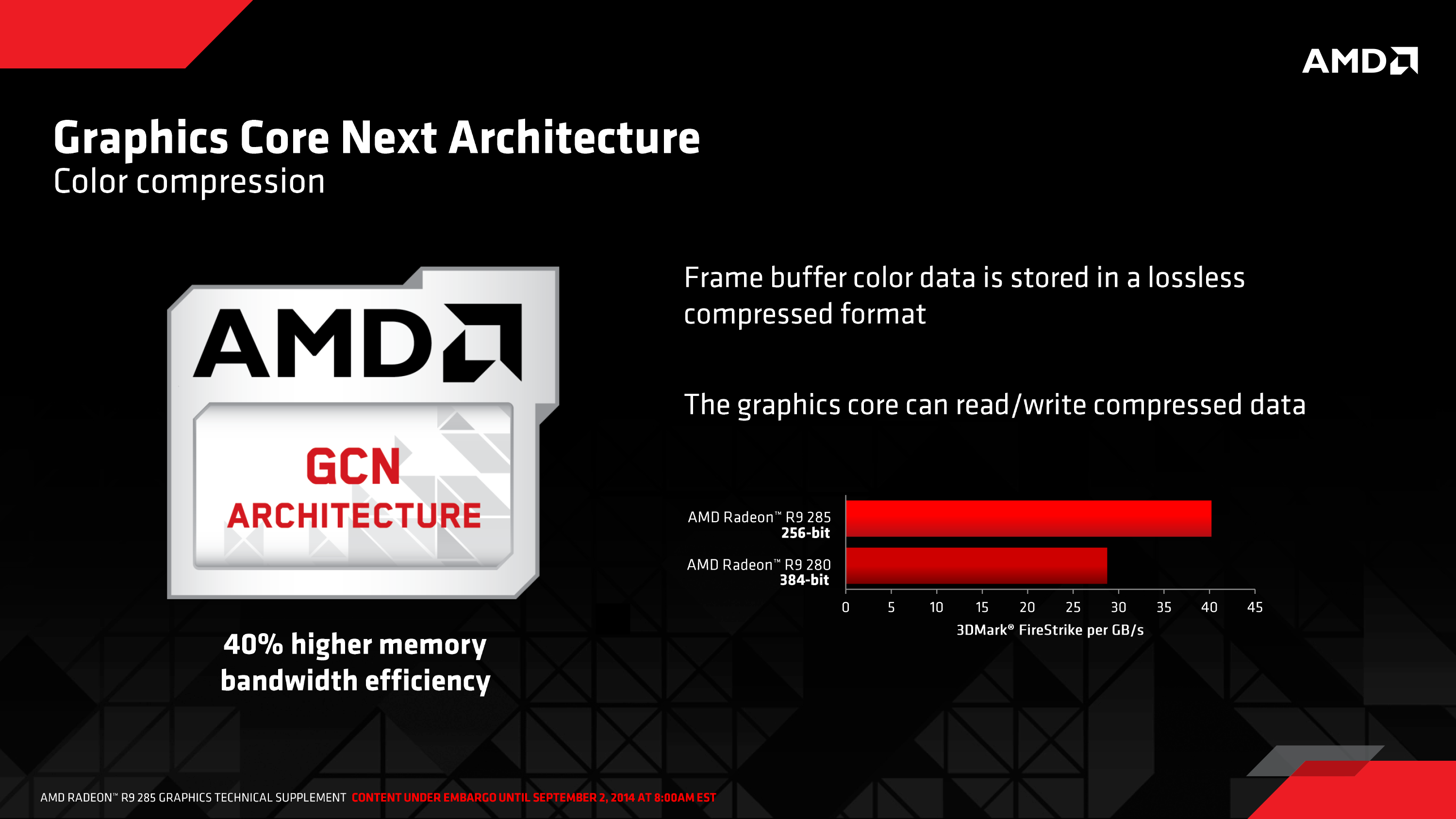

Yes, we can always find some cases. But lets be honest, its not going to work without the bandwidth. And to say AMD doesn't gain from more memory bandwidth was outright silly.

when your rop's are bottlenecking the card instead of the memory OC'ing the memory wont help neither will by installing a bigger bus this was the problem with the fury line