Ubisoft titles have performance issues even on consoles. They prioritized CPU performance for this generation, despite the Jaguar cores being weak for the kind of world simulation they'd aimed for, and as a result you had things like AC:Unity which was a hot mess at launch. Moreover, on the GPU front, no matter how much advancements they claim they've made, their game engines have made little progress in performing well on AMD hardware. Except perhaps R6 Siege, Ubisoft has the poorest track record when it comes to good AAA performance in this generation of consoles.

Honestly, could care less about the consoles. The CPUs in those things are anemic pieces of crap that shouldn't be anywhere near anything to do with serious gaming. That said, another reason for games like AC Unity not performing well on AMD hardware is because those games have very high levels of geometry, which is AMD's weak point compared to NVidia.

Do you play CS:GO? You seem to be forgetting about the role the CPU plays at lower resolutions.

No I don't, but the point is, a GPU's load shouldn't necessarily decrease with resolution provided that the CPU can continue to increase the framerate to very high levels.

If everything is the same as Fiji, of what use is this then?

Why are people so eager to know if Vega has four Shader Engines, or eight(based on the die shot mock-up), for example, if everything is the same as Fiji?

OK I see what you mean. I just found it coincidental that Vega has the same ratio of shaders to ROPS and TMUs that Fiji has.

The drivers can't extract the same level of performance from Fury X to Fury, which they can from 390X to 390, in the most well-optimized CryEngine game at the moment, and the best performing multi-platform racing game. Do you believe that it's just a software scaling issue, or a hardware scaling issue? What you say is certainly true, that a detailed analysis is not possible by just comparing FPS numbers, but I'm inclined to believe that it's the latter.

To me it's a software scaling issue, caused by the drivers and the DX11 API. Dirt 4 like many DX11 games seems to hammer the lead thread, while the others have much smaller loads. This sort of behavior hurts AMD more than NVidia because AMD's drivers have higher overhead. That said, the benchmarks from that website you quoted from seem to conflict with reports from other websites like PCgameshardware.de, which show a larger spread between Fury X and other AMD cards.

There should be no reason why a Fury X should fall behind to RX 480 levels in certain instances, if there were no problems with it in terms of hardware.

The only reason why Fury X would ever fall behind the RX 480 would be in very high geometry workloads. That's really what AMD's weakness is if anything, and why they underperform relative to NVidia in massive open world games such as what Ubisoft currently favors.

Assassin's Creed Origin might be DX12, so it will be interesting to see how it performs on AMD hardware.

It is precisely because of the fact that you have to resort to low level APIs and architecture-specific optimizations to get the best out of Fiji that I say it's unbalanced, which is the general consensus if you lurk around at B3D. Why do you think Fiji improves in performance relative to its direct competitor, the 980Ti, as you increase the resolution? It's because it becomes less geometry bound and more pixel bound.

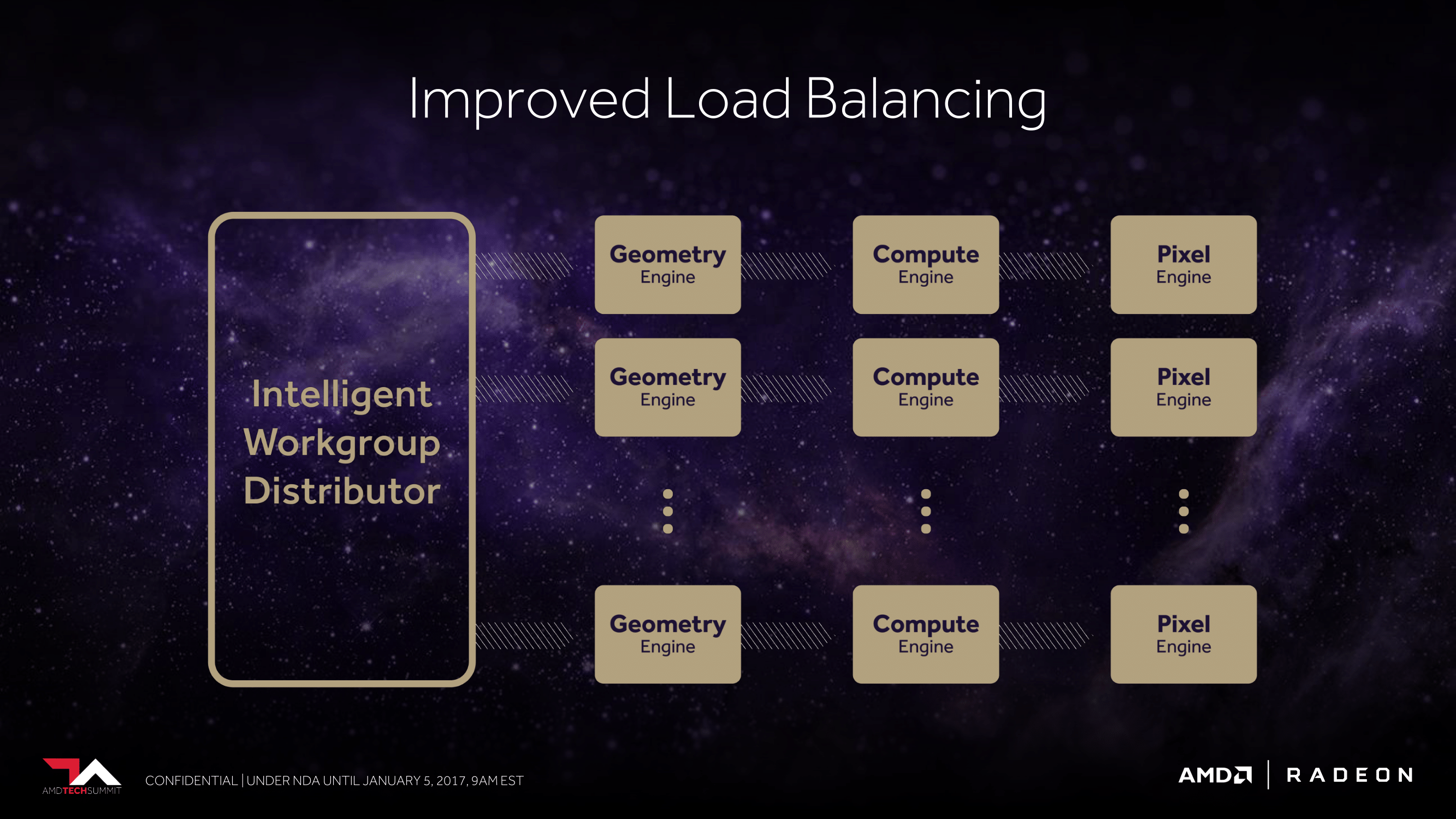

I agree with you here. If anything is unbalanced about Fiji (or AMD cards in general really), its the lack of geometry prowess. Unfortunately I don't think that Vega will really remedy this issue, judging by the benchmarks that

PCgameshardware.de recently did of the Vega Frontier model. In AC Syndicate (which has very high levels of geometry), the performance is around that of a GTX 1070. RX Vega will be faster, but it will likely not catch up to a GTX 1080 in these kinds of workloads.