wilds

Platinum Member

- Oct 26, 2012

- 2,059

- 674

- 136

Interesting!Downloaded the .ini's and saves and set my GPU name correctly. Hope this is better!

Spec: 5775C @ 4.2 GHz | 3.8 GHz cache | 1.9 GHz L4 | 32GB 4x8GB 2400 MHz CL10 | 1080 ti

Corvega:

View attachment 60657

49.1 fps - 13,358 draw calls

Diamond City:

View attachment 60658\

63.7 fps - 8237 draw calls

Now on the 5800X3D thread when Broadwell was brought up, someone said that the L4 isn't necessarily that fast but unsure whether they meant latency, bandwidth, or both.

Okay, but while AT's benching uses JEDEC memory which is unfair to (especially) newer CPUs there is something about Civ6 which really loves Broadwell C. And thanks to @ZGR we can say that the same isn't really the case for Fallout4 (and by implication Skyrim SE etc.). Would still like to see Civ6 480P benches for Ryzen 5800X3D eventually.That was probably me, and I'm pretty sure it's both.

i7-5775C L4 cache performance

Hi guys,can anyone clarify the huge latency (as I see it) of L4 cache obtained on that system: My dummy expectations were for 20ns, why 42ns?In my view L4 performs poorly - only 20ns better than RAM.forums.aida64.com

Plenty of Comet Lake and Coffee Lake systems have DDR4 setups outperforming that L4.

Well, credit where credit is due, MajinCry created these bench threads and came up with the saves.For me, Corvega is just the first step. I get lower FPS in Fanuel Hall with ultra shadow settings than Corvega, and large settlements make both loads look like a walk in the park. But it's good progress.

A simple look at threading using Process Explorer showed me one thread near 100% and a second one at 70% or so. One might be draw calls, the other NPCs but it's probably not that simple.

We can always hope that Bethesda fix their engine to scale better, but I'm not holding my breath.

1. FO4 does not seem to benefit exceptionally from extra vcache. 5800x3d is not stomping 12600k/12900k.

3. FO4/Skyrim seem to benefit more from single thread perf increases from gen to gen. If I look at TPU game averages at 720p, I'd assume my old 5ghz 7600k -> 5600x would be 8% increase in FPS, and 5600x->12700k/5800x3d 15%. Those numbers turned out to be 30% and seemingly around 50% here for the latter. So reviewer game averages even at low resolution seems to constantly underestimate benefits gen on gen vs FO4.

Yes it does. Look at the 5700x/5800x results - the cache increases performance on the 5800x3d by almost 40% to 60% although the boost clocks(4.5ghz) are lower than the 5700x/5800x(4.6ghz/4.7ghz). Because of the age of the engine and the age of the game(2015),at least some of the modern intel cpus do benefit from some optimisations(Skylake) - a highly tuned Alderlake CPU is only about 20% to 30% better than a highly tuned Skylake derived CPU.

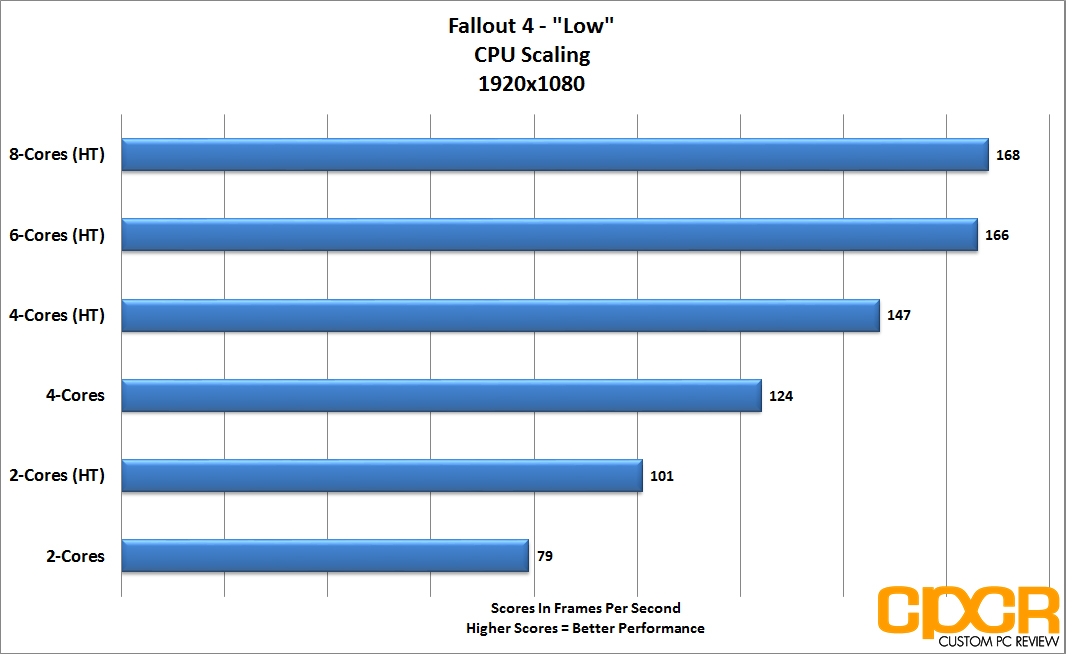

Also Fallout 4 can use more than four threads(but not very well):

Fallout 4 PC Performance Analysis | Custom PC Review

www.custompcreview.com

Ok that's interesting.

Can someone point me to these infamous save files and the .ini? No time to do a thorough search, but the test seems quick enough and I'd like to test my 2700k@5Ghz+3060ti vs the 8600K@5Ghz+1070 for the lolz.

Also has anyone tested with DXVK?

In my experience, with controlled testing for Oblivion/SSE and going off of memory with FO4 (so less reliable), 5600x was 30% faster than 7600k @ 5ghz. I didn't do controlled testing for FO4 regrettably so I'm not going to say my recollection MUST be right. Let me rephrase your points to see if I'm understanding what you are saying.

1.12600k is getting 95FPS.

2. Skylake would be getting 76fps (95/1.25).

3. Skylake outperforms 5600x. (Hard to accept 5600x loses to Skylake in FO4 when my 5600x beat my old 5ghz 7600k by 30% in SSE/Oblivion. Somewhere along the lines here things aren't making sense for me.)

4. Kaby Lake would be getting 84fps (76*(5/4.5)). Alder Lake would be only 13% faster than Kaby Lake. So then 9900k offered ~0% perf increase over 7600k.

As for multicore usage:

Running around FO4 is one thing. Corvega (and the worst parts of FO4 to run) are another. We can try to test this by turning off 2 cores. If Fallout4 did use more cores even in Corvega and that caused decreased in FPS with cores disabled, that is not the case for Skyrim and definitely not the case for Oblivion. The perf increase 7600k->5600x was 30% for Oblivion and Skyrim so it can't be cores. Or perhaps the situation in Fallout4 is very different from Skyrim/Oblivion even if they manifested similarly larger than expected performance improvement. If more cores helped FO4 Corvega, it makes no sense to me to have Kaby Lake be on par with 9900k.

Or alternative explaination:

I hallucinated FO4 gains but did get 30% gains in SSE/Oblivion, when in fact FO4 was not any faster on 5600x and actually slower. Hard pill for me to swallow but possible.

Thanks for the reply. I'm going to think about whether I should get 5800x3d or wait for next gen or both for a long time now. :^)I’m going to have to look into this SSE oblivion. Thanks for the heads up.

FYI on my 3900x I did test performance by dropping cores. I used process lasso to be able to remove them from fallout in real time. The fps numbers became less stable with worse lows before I saw a direct loss of max fps.

So the performance loss with less cores is there it’s not going to show up in a screenshot of the fps at one moment in time. We would need a frame time graph to get an accurate impression of smoothness and performance.

In my experience, with controlled testing for Oblivion/SSE and going off of memory with FO4 (so less reliable), 5600x was 30% faster than 7600k @ 5ghz. I didn't do controlled testing for FO4 regrettably so I'm not going to say my recollection MUST be right. Let me rephrase your points to see if I'm understanding what you are saying.

1.12600k is getting 95FPS.

2. Skylake would be getting 76fps (95/1.25).

3. Skylake outperforms 5600x. (Hard to accept 5600x loses to Skylake in FO4 when my 5600x beat my old 5ghz 7600k by 30% in SSE/Oblivion. Somewhere along the lines here things aren't making sense for me.)

4. Kaby Lake would be getting 84fps (76*(5/4.5)). Alder Lake would be only 13% faster than Kaby Lake. So then 9900k offered ~0% perf increase over 7600k.

As for multicore usage:

Running around FO4 is one thing. Corvega (and the worst parts of FO4 to run) are another. We can try to test this by turning off 2 cores. If Fallout4 did use more cores even in Corvega and that caused decreased in FPS with cores disabled, that is not the case for Skyrim and definitely not the case for Oblivion. The perf increase 7600k->5600x was 30% for Oblivion and Skyrim so it can't be cores. Or perhaps the situation in Fallout4 is very different from Skyrim/Oblivion even if they manifested similarly larger than expected performance improvement. If more cores helped FO4 Corvega, it makes no sense to me to have Kaby Lake be on par with 9900k.

Or alternative explaination:

I hallucinated FO4 gains but did get 30% gains in SSE/Oblivion, when in fact FO4 was not any faster on 5600x and actually slower. Hard pill for me to swallow but possible.

Here are my results on 2700k+3060ti with msi afteburner osd on top (doesn't seem to affect performance)

However I am not sure I did everything correctly because if you launch the fallout.exe with the provided prefs.ini the game launches in a tiny window and can't see jack. So I used the launcher and used full screen 1080p ultra settings. Hope this will suffice.

Will try to test my 8600k+1070 out of curiosity, during the week.

Oh and dxvk does not work with this game unfortunately. Yes I deleted all enb related files. I just wanted to get a similar screenshot without the enb data of course, just to see the ballpark difference, in msi ab data.

Here are my results on 2700k+3060ti with msi afteburner osd on top (doesn't seem to affect performance)

However I am not sure I did everything correctly because if you launch the fallout.exe with the provided prefs.ini the game launches in a tiny window and can't see jack. So I used the launcher and used full screen 1080p ultra settings. Hope this will suffice.

Will try to test my 8600k+1070 out of curiosity, during the week.

Oh and dxvk does not work with this game unfortunately. Yes I deleted all enb related files. I just wanted to get a similar screenshot without the enb data of course, just to see the ballpark difference, in msi ab data.

For fun I am stress testing AI spawning, which is what I did on the 5775C as well. The 5775C crashed with around 9x more human AI. The 5800X3D is doing over 20x human AI and game is not crashing!