- Jul 28, 2015

- 2,495

- 571

- 136

Previous Thread

The most significant hit to framerates in large games, open world games especially, is caused by excessive draw call counts. And this isn't a new thing; as far back as 2006 with Oblivion, performance would utterly die during raining weather, as NPCs would take out their light-casting torches. This is due to the game using Forward Rendering, where the entire scene is redrawn for as many times as there are active lights. So if the game would only issue 2,000 draw calls, it would now be making 8,000 draw calls should three NPCs equip their illuminating torches.

Luckily, game developers invented Deferred Rendering, where only the objects a light affects are redrawn. This reduced draw calls significantly. If we take the above scene, and somehow swapped Oblivion's renderer for a deferred one, we'd see, for example, 2,030 draw calls should those three NPCs have their torches out.

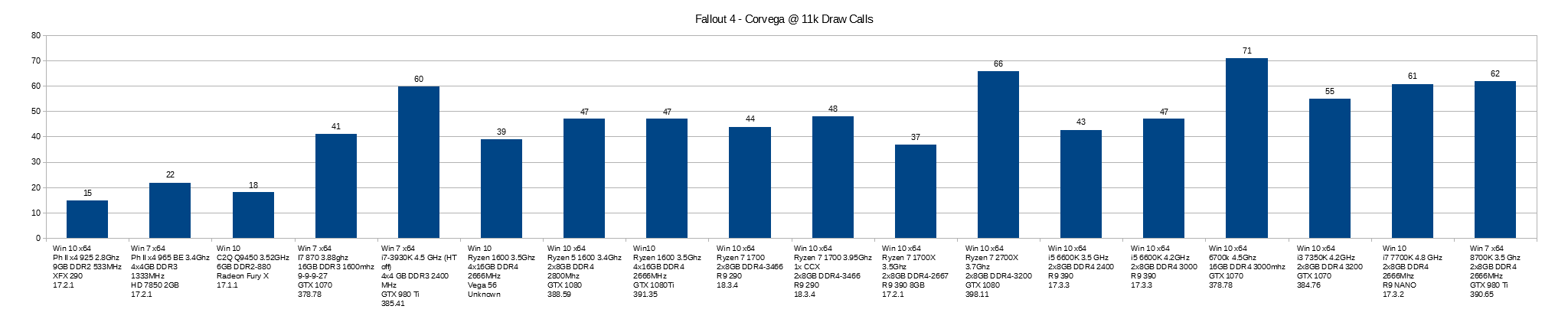

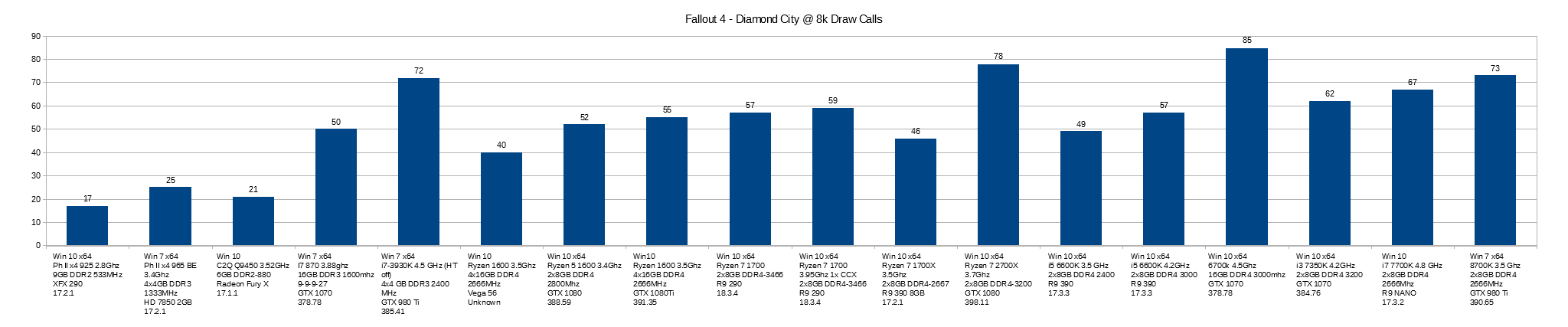

But even deferred rendering is not enough these days. In Fallout 4, there are areas where your framerate would plummet. And why is this? Draw calls. The worst offender is in player-made settlements, where the draw calls can reach 20,000 in number. But that's not part of the game in and of itself, so instead, I picked two particularly problematic areas: Corvega, and Diamond City. The former due to it issuing the most draw calls out of any area in Fallout 4 (11,000 draw calls), and the latter due to it issuing many draw calls (8,000) while also having NPCs interacting with each other.

And here are the results, from an array of systems. First is the worst offender, Corvega:

Second, is Diamond City:

We see a couple very interesting things. The first, is that we see Fallout 4 loves fast RAM more than anything else. The second, is that NVidia's driver has around 30% higher framerates than AMD's, no matter the architecture. For Ryzen to pull 60fps in Fallout 4's intensive scenes, blazing fast DDR4 is required, as is an NVidia card. An Intel xLake CPU can just about keep at 60fps when partnered with an AMD card, but when partnered with an NVidia card, the game can reach above 70fps in these draw call limited scenes.

When paired with a Ryzen processor, the difference in driver overhead is especially pronounced, with 50fps being a hard to reach target when partnered with an AMD GPU. But an NVidia one? And with fast RAM? Ryzen then appears to give the xLake architecture a run for it's money. Outside of that particular configuration, however, and Ryzen is sorely lacking in draw call performance.

Edit1: This behaviour also coincides with the results from Part 2, where we saw Ryzen performing around 25% slower than Skylake in a draw call benchmark. With Fallout 4, we see Ryzen performing 21-23% slower than Skylake when in draw call intensive scenes.

The most significant hit to framerates in large games, open world games especially, is caused by excessive draw call counts. And this isn't a new thing; as far back as 2006 with Oblivion, performance would utterly die during raining weather, as NPCs would take out their light-casting torches. This is due to the game using Forward Rendering, where the entire scene is redrawn for as many times as there are active lights. So if the game would only issue 2,000 draw calls, it would now be making 8,000 draw calls should three NPCs equip their illuminating torches.

Luckily, game developers invented Deferred Rendering, where only the objects a light affects are redrawn. This reduced draw calls significantly. If we take the above scene, and somehow swapped Oblivion's renderer for a deferred one, we'd see, for example, 2,030 draw calls should those three NPCs have their torches out.

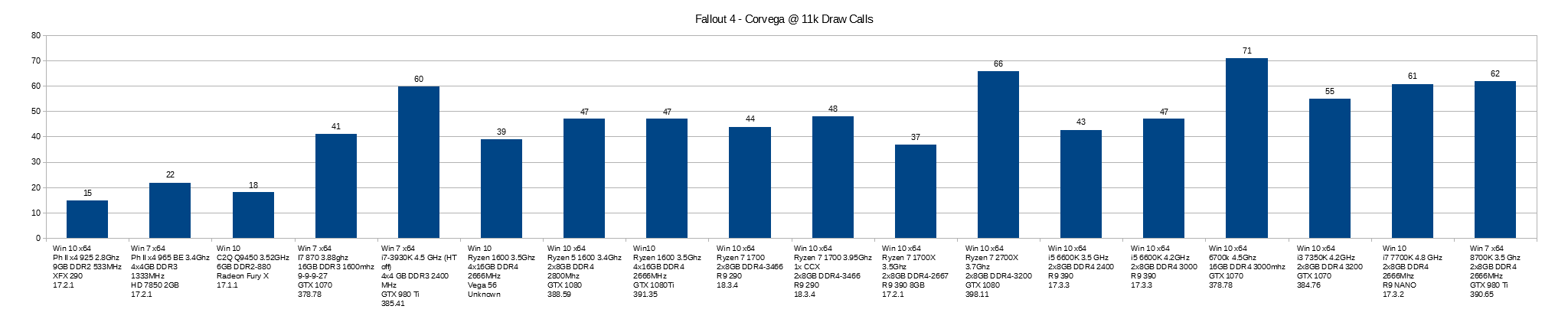

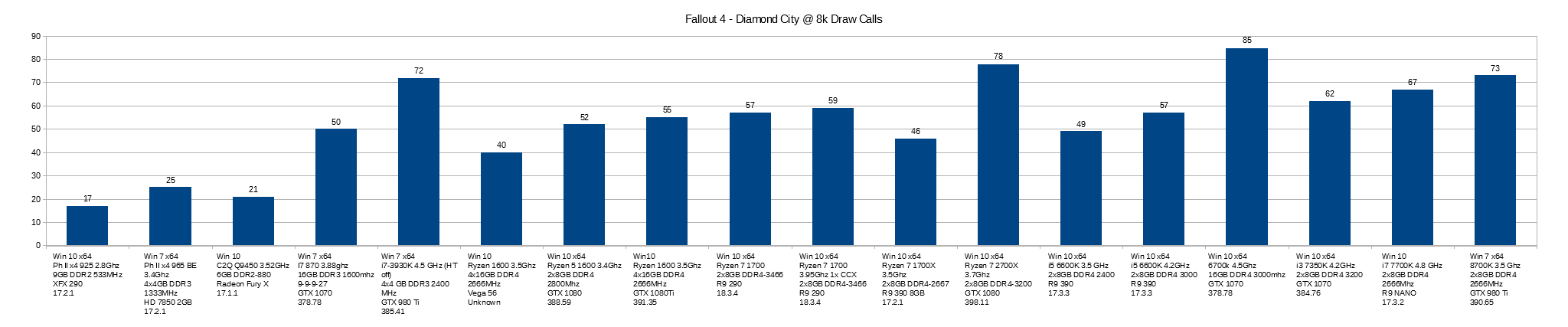

But even deferred rendering is not enough these days. In Fallout 4, there are areas where your framerate would plummet. And why is this? Draw calls. The worst offender is in player-made settlements, where the draw calls can reach 20,000 in number. But that's not part of the game in and of itself, so instead, I picked two particularly problematic areas: Corvega, and Diamond City. The former due to it issuing the most draw calls out of any area in Fallout 4 (11,000 draw calls), and the latter due to it issuing many draw calls (8,000) while also having NPCs interacting with each other.

And here are the results, from an array of systems. First is the worst offender, Corvega:

Second, is Diamond City:

We see a couple very interesting things. The first, is that we see Fallout 4 loves fast RAM more than anything else. The second, is that NVidia's driver has around 30% higher framerates than AMD's, no matter the architecture. For Ryzen to pull 60fps in Fallout 4's intensive scenes, blazing fast DDR4 is required, as is an NVidia card. An Intel xLake CPU can just about keep at 60fps when partnered with an AMD card, but when partnered with an NVidia card, the game can reach above 70fps in these draw call limited scenes.

When paired with a Ryzen processor, the difference in driver overhead is especially pronounced, with 50fps being a hard to reach target when partnered with an AMD GPU. But an NVidia one? And with fast RAM? Ryzen then appears to give the xLake architecture a run for it's money. Outside of that particular configuration, however, and Ryzen is sorely lacking in draw call performance.

Edit1: This behaviour also coincides with the results from Part 2, where we saw Ryzen performing around 25% slower than Skylake in a draw call benchmark. With Fallout 4, we see Ryzen performing 21-23% slower than Skylake when in draw call intensive scenes.

Last edited: