nVidia didn't seem to have the slithers -- what can create the slithers?

The slithers are the result of tearing because vsync is off. You see them on the nVidia screens, too.

nVidia didn't seem to have the slithers -- what can create the slithers?

I think you're off a bit on this one. The Titan isn't made for millionaires. The Titan is for hardcore PC lovers that have a decent amount of disposable income and like to get really nice stuff. When you think about it $1000 isn't a tremendous amount of money.

He's just totally ignoring the issue like the AMD PR guy did with Titan. They must have gotten the same memo! :hmm:

here we go again...attack the messenger.

that shot is with Vsync!

The slithers are the result of tearing because vsync is off. You see them on the nVidia screens, too.

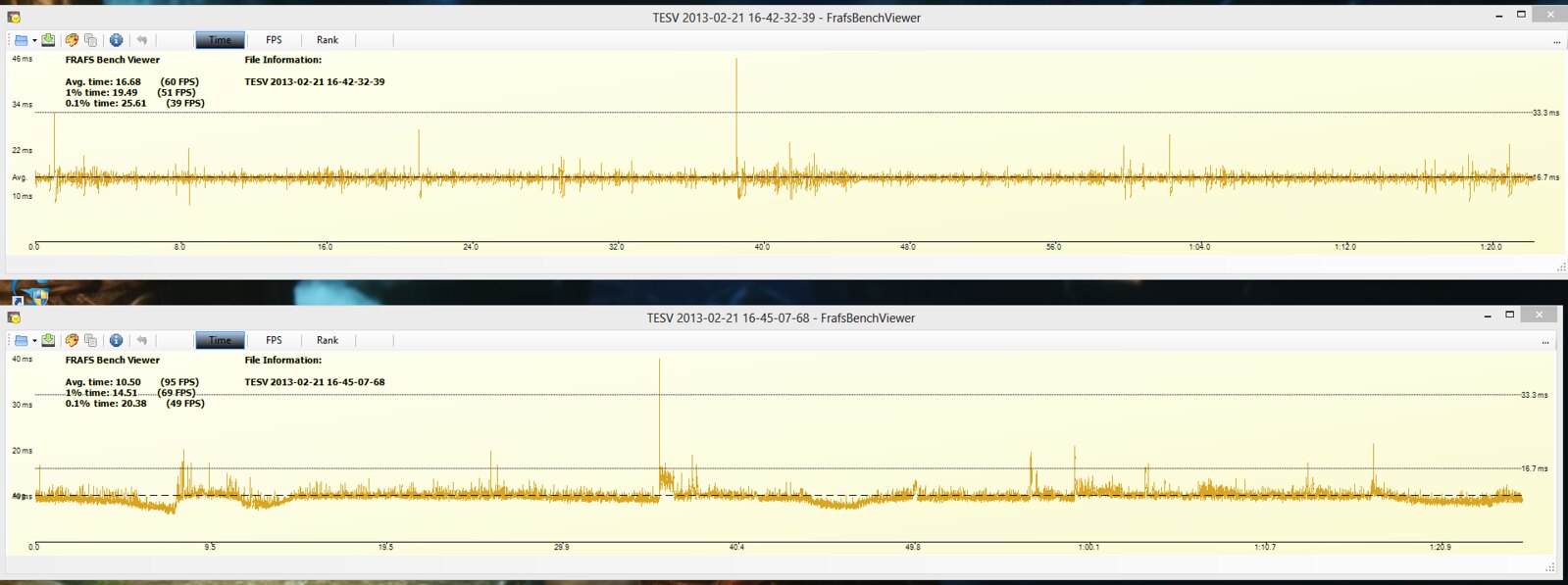

This is the same graph with data gathered from our method that omits RUNT frames that only represent pixels under a certain threshold (to be discussed later). Removing the tiny slivers gives us a "perceived frame rate" that differs quite a bit - CrossFire doesn't look faster than a single card.

Since we're being told that frametimes are the true flawless comparison of a cards performance, anyone care to explain this?

This also matches results at Tomshardware showing SLI with better frametimes. That just isn't possible.

Oh but wait. Frametimes are the ultimate method to compare video cards now, I forgot. I'm sorry for questioning it.

I'm defining slither as a runt frame!

This is the same as that TechReport guy benching Skyrim without Vsync when it messes up the physics. Pure geniuses dealing with this issue!

They don't grasp when they are cherry picking, and cherry picking low results (good) across the board. They just see them graphs, and them directions that point to what's better , higher or lower.Evidently the concept to too much for some to grasp.

They don't grasp when they are cherry picking, and cherry picking low results (good) across the board. They just see them graphs, and them directions that point to what's better , higher or lower.

So is it official that online store availability begins at 9am on the 21st ? Trying to preplan my newegg F5 spamfest.

How do explain frametime inconsistencies where SLI actually has higher 99% frametimes than single cards? Is this the true video card comparison method that we need?

So the 690 has better 99% frametime than a GTX 680. Yeah ok.

Lower is better. Yeah ok. Did anyone bother to question this stuff? I'm just seeing tons of frametime benchmarks where stuff that shouldn't be happening, is. I'll give you time to get back to me on this.

GTX 690 has hardware frame metering, from my understanding but when one raised the complexity with multi-monitor resolutions in that specific game -- the single GPU's did shine a bit more.

PCPer is using a GTX 680 SLi with the same figures, SLi throwing better frame latencies than single cards.

20nm cards will likely have pretty bad price/perf increases just like the first batch of 28nm cards, thanks to Apple and co. eating up so much TSMC 20nm capacity.

On the other hand, do we HAVE to upgrade? Have you seen the specs on the PS4? I don't think anyone with a 79xx or 680/70 needs to upgrade anytime soon. Consolification has stunted PC game development for years, and I don't think Crysis 3 can reverse that trend all by itself.

Anyways, on to more important things than AMD vs Nvidia...

Which is entirely possible, as seen by AMD single doesn't mean better.

Nvidia has used some scaling to increase smoothing, it doesn't always work though sometimes you'll see 690 throwing frame times as ugly as AMD's single cards.

[/url]

PCPer is using a GTX 680 SLi with the same figures, SLi throwing better frame latencies than single cards.

Nice scores, but it still can't max out Crysis 3. :awe:

Anyways, on to more important things than AMD vs Nvidia...

So its all a myth that there's more MS with SLI than single GPU, all these years??