Like many we've been finding AMD's Bulldozer to be underwhelming... and it is. Just don't expect a magic bullet to fix it.

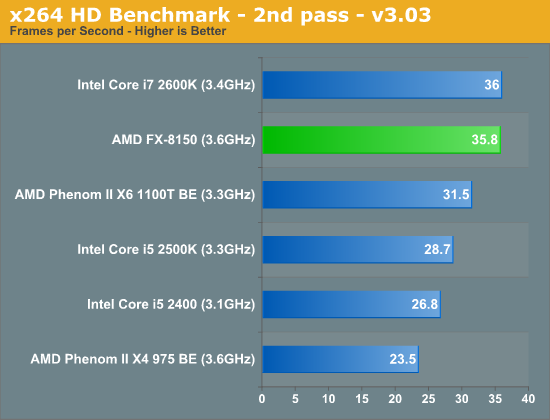

Over the past few weeks we've had AMD’s new FX-8150 CPU in the labs, running a variety of benchmarks to get our head around the performance delivered by AMD’s new Bulldozer architecture. Like many other reviewers we've been finding the performance of the CPU underwhelming, benching below Intel’s Core i7-2600K and even consistently dipping below the Core i5-2500K.

You may not be aware but there is a simmering dissent in

some corners of the internet over Bulldozer reviews. These cover off everything from suspected caching bugs to AMD deliberately sending out a lower performing ASUS motherboard with the review kit (that one in particular has us scratching our heads). But no matter how much our memories of AMD’s greatness want us to subscribe to the crazy theories we just can't do it – it really just seems like AMD painted itself into a corner with years of pre-launch hype over the Bulldozer architecture.

We had initially expected AMD to launch Bulldozer at Computex this year, alongside AMD’s new 990X chipset. Instead we ended up with samples of motherboards and the suggestion we test them using a Phenom II X6 – the implication being that it would be months before Bulldozer arrived. The hot rumour floating around Taipei during Computex was that AMD was struggling to make Bulldozer a competitive performer, and that it was reaching out to overclockers for help tweaking the CPU.

Ever since then there were several rumoured release dates for Bulldozer, but months went by without news. As each rumoured release passed it seemed more and more like AMD was searching for some sort of magic bullet and we suspect that it ended up a case where AMD had to put up or shut up, hence last week’s launch. We wouldn’t be surprised if the fact that Intel is set to launch Sandy Bridge-E before the end of the year played a part in AMD’s timing.

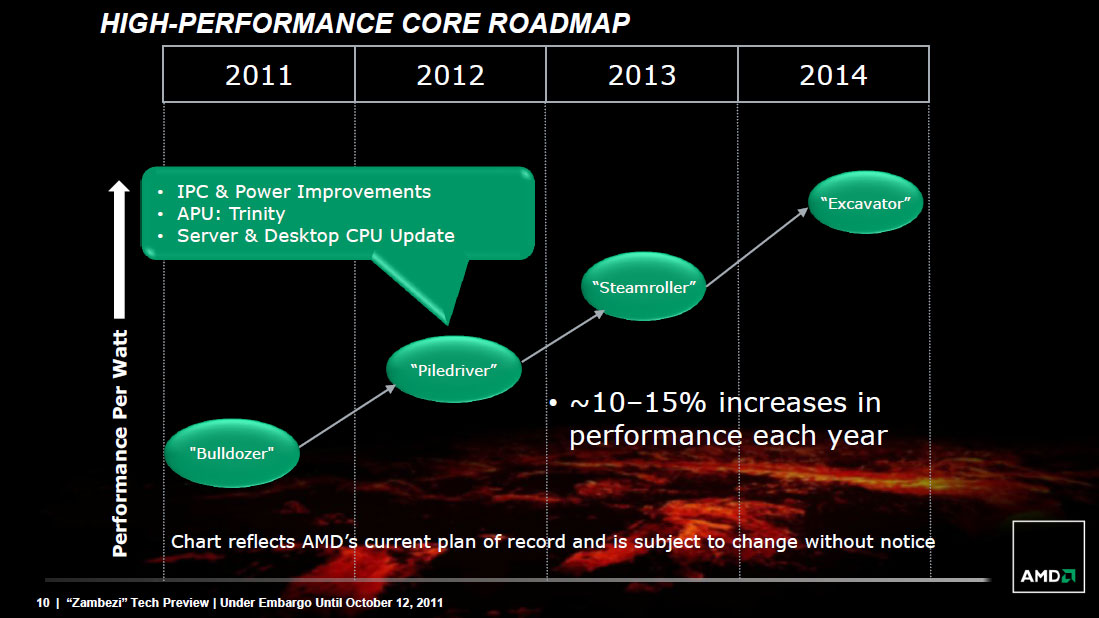

Bulldozer was accompanied by none of the confident bravado that came alongside the launch of Brazos and Llano APUs earlier this year. If anything AMD has subtly shifted gears in recent months to begin hyping up Trinity – next year’s APU – which will combine the Bulldozer-based Piledriver CPU with a 7000 series Radeon GPU. Generally speaking manufacturers tend to shy away from hyping the next generation of product until the current generation launches.

Even during the review process we were being hit with more and more outlandish suggestions, largely revolving around performance in nefarious ‘future workloads’. The implication was that a flood of heavily threaded applications is on the way, the kind of promise that has been made ever since the first multicore CPUs hit the market.

Some of the suggestions for testing applications practically flew in the face of good benchmarking methodology. One suggestion was Battlefield 3, despite the fact that we were at the time hours from the end of beta, despite the fact that the beta version featured old code and was lacking high end assets. Even more importantly it meant testing in an online multiplayer environment, which is about the worst thing you can do for replicability.

Then there were suggestions we use Windows 8, whose task scheduler is apparently more advanced and better leverages the multiprocessing-focused Bulldozer. Of course, there were no associated drivers and the only copy of Windows 8 available is the strange UI-focused developer preview build.

But by that point we had moved well into ridiculous territory. Speculation about the future is well and good but if we need to be testing with unreleased operating systems just to see improvements we aren’t actually reviewing a product in the real world anymore. No matter how outlandish the conspiracy theories, the hard reality is that if you went out and bought an FX-8150 today you’d get less value for your money than if you bought a Core i5-2500K (or even a Phenom II X6 1100T).

We've asked AMD to see if we can get some lower end models of Bulldozer, where its traditional price/performance advantages might be seen. We're also testing with motherboards other than the Crosshair V Formula to see what, if any, performance benefits may arise. But if you are one of those AMD fans desperately hanging out to hear that a simple tweak will make Bulldozer awesome, then you should probably just accept the fact that while it’s a perfectly cromulent CPU, AMD has lost this round of the performance battle to its far more embiggened opponent.