Official AMD Ryzen Benchmarks, Reviews, Prices, and Discussion

Page 210 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

3DVagabond

Lifer

- Aug 10, 2009

- 11,951

- 204

- 106

I was leery of the power-consumption numbers of Ryzen all the way from AMD's demo to clueless reviewers using P95 stress-test numbers to report power consumption when Ryzen wasn't running that code optimally. Finally, here's a more realistic result of the actual power Ryzen consumes under full load. The 65w chip is at 113, 95w at 161, while Intel's 140watter is at 132. No free lunch here, folks!

http://hexus.net/tech/reviews/cpu/103270-amd-ryzen-7-1700-14nm-zen/?page=7

That's system power. And from the mains. So, you also have to take into account PSU loss, which TDP would not.

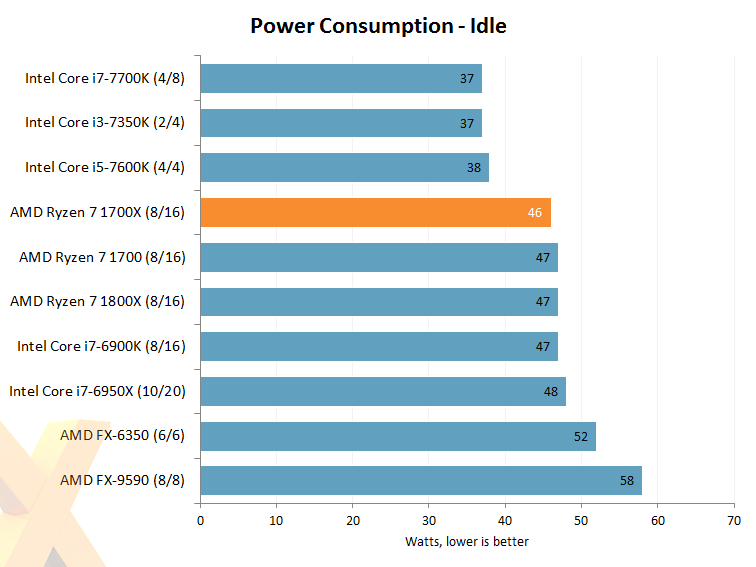

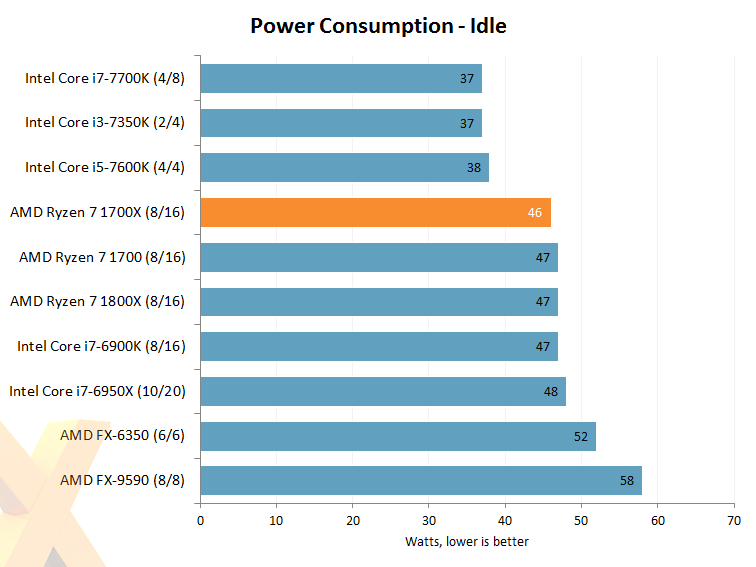

To emulate real-world usage scenarios, we record system-wide mains power draw when idle, when encoding video via HandBrake and while playing Deus Ex: Mankind Divided

Also might as well print the reviewer's comment about the tests.

The Ryzen 7 1700X's lower clock speed means that it takes a reasonable chop out of the R7 1800X's power consumption.

That same advice is applicable to the R7 1700, whose 65W TDP translates into lower multi-thread power consumption than a four-core Core i7-7700K, and you already know that it obliterates said chip in applications such as Cinebench and HandBrake.

AMD has done an impressive job in the performance-per-watt metric on Ryzen, with this design philosophy best exemplified by the R7 1700.

I know this..... but thanks for pointing it out anyway.TDP does not equal power consumption.

Krumme, must you always see red everywhere when someone raises any question about AMD? I am very well aware of the strengths of Ryzen in media encoding. I was merely pointing out that this is the first time (I am noticing) that the reported power consumption is actually on point to what I expected it to be. A lot, if not majority, of early reviewers were taking their power consumption numbers from Prime 95. The Stilt made it known in his technical thread that Prime 95 was broken. The result was that while Blender and Handbrake scores were obscenely high, power consumption figures were ridiculously low. This was a false picture. My first comment in the technical thread was to ask about this discord. Still interested in Ryzen's Prime 95 numbers once everything is fixed, by the way.Wake up dude. You still dont get the memo dont you?

You are referring to the handbrake test.

Did you notice the performance difference here for the same test?

A 1700 non x beats a 6900. Read again.

A 1700x beats a 6950. Yes the 350 usd cpu beats the 10 core 1800 usd intel. Read again.

Now go look at the power figures again.

http://m.hexus.net/tech/reviews/cpu/103270-amd-ryzen-7-1700-14nm-zen/?page=3

I know it wasnt you intention but you just proved both how insanely powerfull zen is and how efficient it is at the same time. Lol.

Free lunch for all that open their eyes to the new reality.

Anyway, I was especially interested because of the debate between KTE and bjt2 + Abwx about process and efficiency, TDPs and etc. So Kompukare's post was quite revealing to me. That Ryzen is a monster in Handbrake and some other programs is without a doubt, but the "no free lunch" comment was me justifying the more realistic power consumption figures as well. It's a technical observation, so you can save all the fanboy stuff about price and all that. The 6950x is a quad-channel setup and yet consumes 30Watts lower than the 1800x while only being 8fps slower. The 1700x is 4fps faster while consuming 6 watts more. So yeah, "no free lunch," my brother!

No worry, bro, I get it. See above. I do appreciate your comprehensive response and your demonstrated maturity in responding to my post. Much appreciated!You know that Hexus like most reviewers take their power readings of the whole system at the wall?

And that none of the system have a 0W idle?

So what you really want is the power deltas between idle and load.

Something like this :

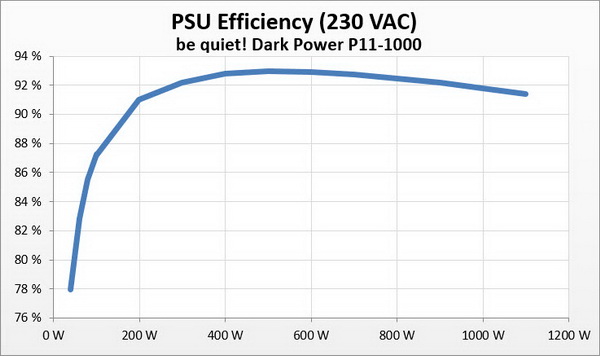

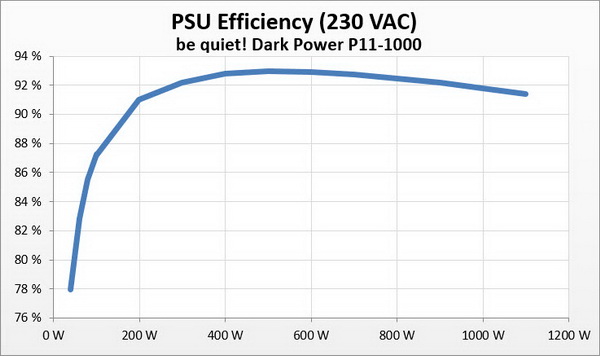

Although that still doesn't tell the full story as what the chip draws is only after the PSU's and the VRM's inefficiencies. Now we don't know the VRMs but the PSU they used is a be quiet Dark Power Pro 11 (1,000W). Which is a Platinum rated supply and we can do better than that since TPU reviewed it:

https://www.techpowerup.com/reviews/beQuiet/DarkPowerPro11_1000W/6.html

Right, not going to account for that whole curve as what the FX9590 used at load (295W) is a lot different than what the i3-7350K did (68W), but if we said they are all on average 90%, then we get something like this:

Okay, for Handbrake the i7-6950K doesn't use 140W, but the 1700 and 1700X are well within their 65W and 95W TPU. The 1800X exceeds it a bit.

Mind you, still no idea how much heat the VRMs waste - rather suspect it's more than 7.6W though.

Atari2600

Golden Member

- Nov 22, 2016

- 1,409

- 1,655

- 136

The 6950x is a quad-channel setup and yet consumes 30Watts lower than the 1800x while only being 8fps slower. The 1700x is 4fps faster while consuming 6 watts more. So yeah, "no free lunch," my brother!

Yeah, your right.... it doesn't come free of charge.

Last time I looked its something like $1200 or similar extra in your pocket.

Yea, Intel might just price themselves right out of the artificial segment they themselves created.Yeah, your right.... it doesn't come free of charge.

Last time I looked its something like $1200 or similar extra in your pocket.

But again, I'm of the opinion that success breeds unforeseen headaches. If you control both server and the consumer space you'd have to find way to drive a wedge between the two segments or allow 'free movement' up and down the ladder. AMD has no such worries, for now. They must make the most they can off Intel's dilemma hehe.

Malogeek

Golden Member

Depends on how much you want to overclock it. You need a pretty nice air cooler to get over 3.7Ghz sustained.What is the minimum cooler to overclock 1700 ?

I know this..... but thanks for pointing it out anyway.

I was merely pointing out that this is the first time (I am noticing) that the reported power consumption is actually on point to what I expected it to be.

Ahh broe. You were talking about "clueless reviewers" then went on to link a bm for total power consumption that dont stress the fpu in core framing it like 1700 was 113w while hedt Intel was only 130w. Thats perhaps even more clueless or misleading than using prime.

By all means. For this workload you can have your cake and eat it - free lunch all the way if you like - with the 1700. Its both faster and uses less energy than either 7700 or 6900. And cost less. I mean if this is not the definition of free lunch i dont know what it is?

Its 100% the oposite of what your message was. You were just 100% wrong. So what. Good news. Move on.

Last edited:

It seems Asus had some issue with the Hero's BIOS, now solved: https://twitter.com/BitsAndChipsEng/status/846872691982381058

Unbelievable!Ahh broe. You were talking about "clueless reviewers" then went on to link a bm for total power consumption that dont stress the fpu in core framing it like 1700 was 113w while hedt Intel was only 130w. Thats perhaps even more clueless or misleading than using prime.

By all means. For this workload you can have your cake and eat it - free lunch all the way if you like - with the 1700. Its both faster and uses less energy than either 7700 or 6900. And cost less. I mean if this is not the definition of free lunch i dont know what it is?

Its 100% the oposite of what your message was. You were just 100% wrong. So what. Good news. Move on.

- Jun 10, 2004

- 14,608

- 6,094

- 136

It seems Asus had some issue with the Hero's BIOS, now solved: https://twitter.com/BitsAndChipsEng/status/846872691982381058

You mean besides frying a microcontroller on-board and bricking prior to 0902?

IIRC 0902 forced 2T timings on memory, and 1001 is 1T. That alone could account for a few % difference in some benches.

You know that Hexus like most reviewers take their power readings of the whole system at the wall?

And that none of the system have a 0W idle?

So what you really want is the power deltas between idle and load.

Something like this :

Although that still doesn't tell the full story as what the chip draws is only after the PSU's and the VRM's inefficiencies. Now we don't know the VRMs but the PSU they used is a be quiet Dark Power Pro 11 (1,000W). Which is a Platinum rated supply and we can do better than that since TPU reviewed it:

https://www.techpowerup.com/reviews/beQuiet/DarkPowerPro11_1000W/6.html

Right, not going to account for that whole curve as what the FX9590 used at load (295W) is a lot different than what the i3-7350K did (68W), but if we said they are all on average 90%, then we get something like this:

Okay, for Handbrake the i7-6950K doesn't use 140W, but the 1700 and 1700X are well within their 65W and 95W TPU. The 1800X exceeds it a bit.

Mind you, still no idea how much heat the VRMs waste - rather suspect it's more than 7.6W though.

VRM efficiency varies with load, as with PSU, with similar curve, but absolute maximum VRM efficiency is around 86-88%...

- Jun 10, 2004

- 14,608

- 6,094

- 136

VRM efficiency varies with load, as with PSU, with similar curve, but absolute maximum VRM efficiency is around 86-88%...

The TI NexFETs used on the higher-end boards like the Asus C6H and Asrock Taichi are rated 25A continuous output at 90% efficiency and 40A maximum.

Nitpicking aside, the general quality of CPU reviews these days really isn't up to snuff. Most sites are only showing a few data points without proper apples to apples or context provided. In reality every data point is valid as long as the context of the test methodology is understood. I won't harp on you about cherry picked benchmarks because that is clearly showing an entire genre of games (RTS) that are typically very CPU bound under performing compared to the 7700k. The makers of Total War and Ashes have already come out and said they're in the process of writing optimizations for Ryzen topology. I imagine game engine optimization will show big yields with regard to how the threads are managed for AI simulations and physics due to the sheer scale of battles in these games.

I would love to see some serious frame time analysis of these games at 1080p with streaming, Discord, music playing, and various chrome tabs open. This is what most people do that game online, every single one of my friends that games uses their PC in this way. Sitting in competitive queue while listening to music, watching you tube, reading articles and voice comm. at the same time is pretty typical behavior. This is the true CPU test that reviewers don't seem to want to deep dive into, because empirically you can't guarantee the same load every time. What would probably be far more useful data would be to have the forum users run frame analysis on their own Ryzen systems for a few days in a row and take the overall average and organize it by GPU so we can see real numbers from the real world.

Spot on. As an enthusiast gamer, playing @4K I would trade my 6700K OC@4.9+GHz for 1700.

I have to turn off YouTube when I play BF4 because it drops the frames a fair bit.

Normally when I game, I multitask also, and so do all of my friends. Multiple tabs open, skype, YT/Twitch@1080p (or skype video) on second screen...

Review sites are so behind the times, they are like your grandparents sending you snailmail and mentioning how long it takes.

All these game benchmarks in a vacuum, at 1080p, in Singleplayer instead of Multiplayer (if its mainly a MP title) are as useful to a real modern gamer as synthetic ones. Then when directly comparing a 4c to 8c cpu, they make even less sense, in today's multitasking world.

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

Important notice to MSI Ryzen Users:

"Please do not post, and don't use any BETA BIOS for AM4 systems. If you have one already flashed, OK. But do not flash any further releases found anywhere.

There is a reason why they got removed!

Any one found to post the files for BETA BIOS (or links where to find such) may risk of "no posting" account limit for duration of 14 days.

Again: MSI had a reason to request those BETA BIOS removed.

We will give information when we get any."

--------------------------------------------------------

"And that AGESA code was bad.....which is why MSI pulled the BIOS's....just an FYI...

So more than anything, be unhappy with AMD than anything...."

"Please do not post, and don't use any BETA BIOS for AM4 systems. If you have one already flashed, OK. But do not flash any further releases found anywhere.

There is a reason why they got removed!

Any one found to post the files for BETA BIOS (or links where to find such) may risk of "no posting" account limit for duration of 14 days.

Again: MSI had a reason to request those BETA BIOS removed.

We will give information when we get any."

--------------------------------------------------------

"And that AGESA code was bad.....which is why MSI pulled the BIOS's....just an FYI...

So more than anything, be unhappy with AMD than anything...."

CatMerc

Golden Member

- Jul 16, 2016

- 1,114

- 1,153

- 136

It hit me a couple days ago.The fact that we are even splitting hairs over performance in many games and applications is incredible. AMD has mounted one of the biggest comebacks ever, never thought I'd again see the day where I actually wanted AMD processors in my rigs.

I want an AMD CPU in my rig. Me, a 6600K owner who up until now was perfectly satisfied.

They broke up the market so much that despite being satisfied with my processor, I want to upgrade. I don't find 4C/4T acceptable anymore when a 6C/12T option will be available for the same price soon. By Zen2 I should have the funds (and the actual need) to upgrade, so that's probably when I will do so, but damn if even now Zen isn't tempting!

For the entire duration of my interest in this field, AMD was basically irrelevant. I only got interested in processors when the FX 8350 and friends were all AMD had. This is brand new for me pretty much lol

thepaleobiker

Member

- Feb 22, 2017

- 149

- 45

- 61

I have an i5 7500, and I am "mildly enraged" that I can get a 6C/12T overclockable CPU for roughly the same price.It hit me a couple days ago.

I want an AMD CPU in my rig. Me, a 6600K owner who up until now was perfectly satisfied.

They broke up the market so much that despite being satisfied with my processor, I want to upgrade. I don't find 4C/4T acceptable anymore when a 6C/12T option will be available for the same price soon. By Zen2 I should have the funds (and the actual need) to upgrade, so that's probably when I will do so, but damn if even now Zen isn't tempting!

For the entire duration of my interest in this field, AMD was basically irrelevant. I only got interested in processors when the FX 8350 and friends were all AMD had. This is brand new for me pretty much lol

(I'm de-stressed currently thanks to snagging a new i7-7700 non-K for $219 - the same price as the aforementioned 6C-12T...but man, AMD has got me pining for its Ryzen CPUs...... its cray cray)

Regards,

Vish

inf64

Diamond Member

- Mar 11, 2011

- 3,884

- 4,692

- 136

Well I managed to get my 4690K to 4.5Ghz and I'm still contemplating an upgrade to 12T Ryzen 5 with a midrange board (B350). I would lose a bit on ST front with 4Ghz Ryzen 6C/12T (maybe 10-15%) but gain A LOT on MT and multitasking front( 1.8-2x). The only thing putting me off is the price of good(read: verified to be compatible) fast 8GB DDR4 sticks (need 2x8).

beginner99

Diamond Member

- Jun 2, 2009

- 5,320

- 1,768

- 136

Spot on. As an enthusiast gamer, playing @4K I would trade my 6700K OC@4.9+GHz for 1700.

I have to turn off YouTube when I play BF4 because it drops the frames a fair bit.

Normally when I game, I multitask also, and so do all of my friends. Multiple tabs open, skype, YT/Twitch@1080p (or skype video) on second screen...

Review sites are so behind the times, they are like your grandparents sending you snailmail and mentioning how long it takes.

All these game benchmarks in a vacuum, at 1080p, in Singleplayer instead of Multiplayer (if its mainly a MP title) are as useful to a real modern gamer as synthetic ones. Then when directly comparing a 4c to 8c cpu, they make even less sense, in today's multitasking world.

You are right. Problem is how to you test/benchmark this? The variance (error) will be huge. Anything less than 5 runs per config wouldn't mean much and error would probably still be over 10%. So it's hard to compare when the error itself is pretty huge. And how do you display the data? 10% would be a huge difference in charts which can then be highly missleading. Error bars for sure needed.

french toast

Senior member

- Feb 22, 2017

- 988

- 825

- 136

Malogeek

Golden Member

Current CPUs are B1Does anyone have an idea what a B2 stepping could bring? What stepping are we at now?

For the entire duration of my interest in this field, AMD was basically irrelevant. I only got interested in processors when the FX 8350 and friends were all AMD had. This is brand new for me pretty much lol

I went to college in 1992 and bought a computer with my parents' monetary assistance $500 from me and $500 from each of them. I was the only one in my dorm with an AMD system but I could actually game on that thing because AMD represented value - 386dx/40 and 1mb video card while my roommate had a 386sx16 and a POS video card for the same amount.

I suspect the R5 will be a tremendous value for those who don't want to spend or just done have piles of cash.

It's nice to have competition back.

Last edited:

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

Adored hit the nail on the head regarding these benchmarks. As with statistics, you can make numbers say what you want within reason.

Last edited:

Atari2600

Golden Member

- Nov 22, 2016

- 1,409

- 1,655

- 136

re. multiplayer benchmarks...

is it really beyond the wit of man to have several remote computers send keyboard/mouse scripted actions of "players" in a multi-player environment, as well as have a keyboard/mouse script on the local computer that can allow for a repeated multi-"player" environment for benchmarking?

It can't be much more complicated.

is it really beyond the wit of man to have several remote computers send keyboard/mouse scripted actions of "players" in a multi-player environment, as well as have a keyboard/mouse script on the local computer that can allow for a repeated multi-"player" environment for benchmarking?

It can't be much more complicated.

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

Sure, but wouldn't that require 64 BF1 accounts though?re. multiplayer benchmarks...

is it really beyond the wit of man to have several remote computers send keyboard/mouse scripted actions of "players" in a multi-player environment, as well as have a keyboard/mouse script on the local computer that can allow for a repeated multi-"player" environment for benchmarking?

It can't be much more complicated.

Computerbase.de tests BF1 in multiplayer. I am not sure exactly how exactly, but they get it done, and assume they do such in a way that they have fairly consistent results.

Mr Evil

Senior member

Games are typically not deterministic, which means that there will be subtle differences each run. Add unpredictable network latency to that, and the inputs will end up out of sync.re. multiplayer benchmarks...

is it really beyond the wit of man to have several remote computers send keyboard/mouse scripted actions of "players" in a multi-player environment, as well as have a keyboard/mouse script on the local computer that can allow for a repeated multi-"player" environment for benchmarking?

It can't be much more complicated.

So yes, it's quite complicated.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.