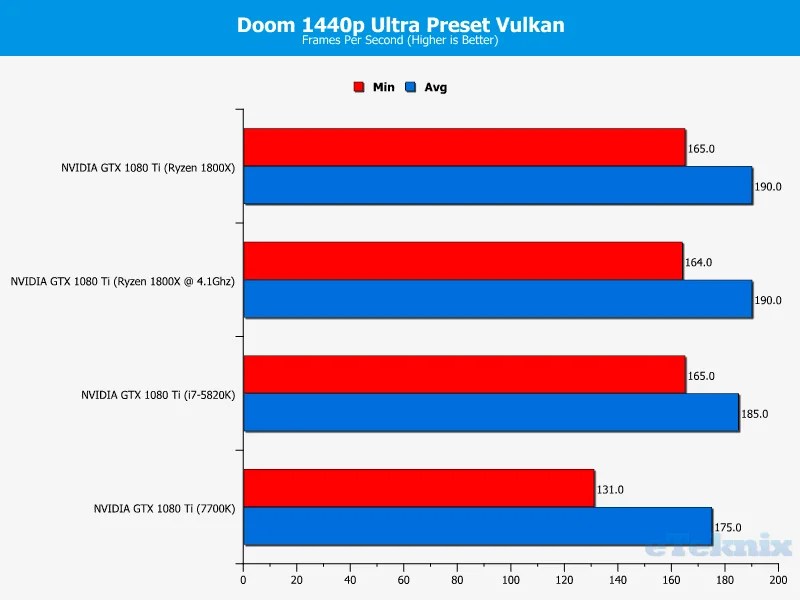

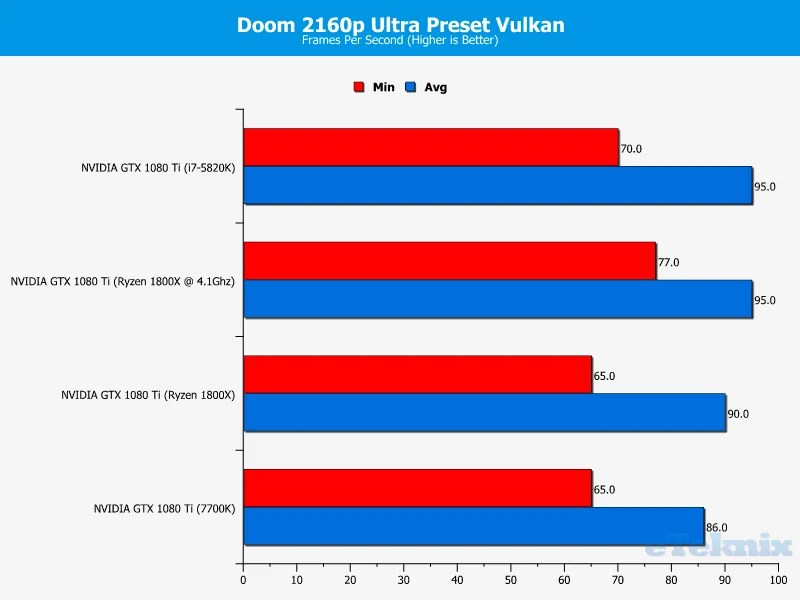

When we started this testing, we had no idea what the final results would be. Our best guesses were that Ryzen was going to sit between the 7700K and 5820K in most tests or suffer worse performance at resolutions above 1080P due to the DDR4 memory latency issues we saw when testing the CPU and motherboard last week. Of course, in real-world testing like this, it didn’t seem to make much of a difference, at least regarding what you would notice as a consumer. In almost all tests (things didn’t go perfectly in Far Cry Primal) the Ryzen 1800X gave the best frame rates at all resolutions, and even more so when pushed to 1440P and 2160P, where the 8-core 16-thread design of the CPU was able to relieve the GTX 1080 Ti of any bottlenecks in performance.

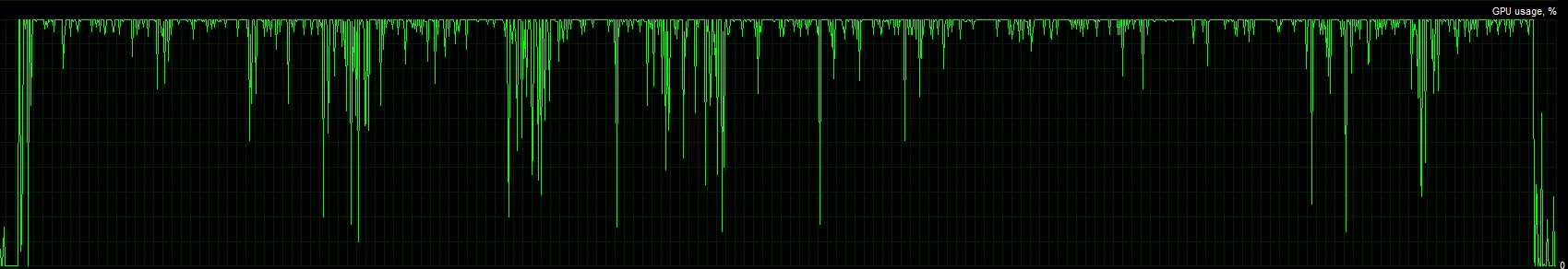

The most exciting thing we saw so far wasn’t the average frame rates, but the minimum. People are often quick to leap to the maximum frame rate, and I’ll give you a bit of a tip, high frame rates are great, but they’re not all that. You can run some games as low as 20FPS and it’ll play great, just look at classics like Zelda 64 and Goldeneye, they ran at 20FPS and they felt pretty smooth. The trick is they didn’t drop frames. If you’re gaming at 100+ FPS and your frame rate drops below 60FPS for a moment, you’re going to notice; the same is true from 60-40, and so on. The Ryzen 1800X helped maintain the highest minimum frame rates we’ve ever seen, and that means a more consistent, smoother and overall better gameplay experience. When it comes down to it, this higher minimum number is what you want from a gaming chip, not just the bigger average or maximum number.

Our testing may seem strange to some, particularly our choice of CPUs to pit against Ryzen. I chose the 7700K as it’s currently a very popular choice for those building a high-end gaming PC, and it’s an excellent choice too, it’s a powerful chip, features the latest Kaby Lake architecture and works very well. With Ryzen launching, the price of the Intel chip is now £320-340, although it’s not a huge leap up from Skylake, it’s still great for gaming.

The Ryzen 1800X is more expensive at just under £500, but when you see 20-50% improvements in minimum frame rate, and gains regarding average frame rate, that certainly makes sense. As I’m writing this, the Ryzen 1700 is arriving at eTeknix HQ, and the 1700X is on its way, and we’re expecting the 1700X to be more in line with the 7700K performance and price, so that’s food for thought. Of course, this certainly won’t be the last time we test out the gaming capabilities of the new AMD or Intel CPUs for that matter.

The BIOS and drivers in general for Ryzen are still pretty fresh, there’s most certainly room for improvement there, and memory performance bugs are hopefully going to be worked out soon. On top of that, AMD is working with 1000’s of developers to improve overall market support of multi-core processors, and that’s sure to bring benefits to all PC gamers, not just AMD users. Either way, it looks like the Ryzen 1800X is offering some serious performance for high-end PC gaming, and we’re eager to see both Intel and AMD battle it out over the next couple of years. There are more Intel and AMD chips on the horizon, and the current ones are already pretty exciting, so we’re eager to see more battles regarding performance and retail prices, as that’s going to be a big win win for consumers.