also one pic said valid and one said it wasn't valid lolTimespy on overclocked 6700K @ 4.5GHz scored me around 5900 CPU score IIRC. That was with DDR4-3466.

Official AMD Ryzen Benchmarks, Reviews, Prices, and Discussion

Page 152 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

looncraz

Senior member

- Sep 12, 2011

- 722

- 1,651

- 136

According to the post you would push it to 1.0, if you go too far though. PCIE 2 won't matter much,m but I am pretty sure you would see some hefty dropoffs at 1. I asked stilt where that line is, but I don't think he got back to me yet.

EDIT: Apparently stilt doesn't know atm.

Let me help:

Gen 3: 85~105 MHz

Gen 2: 105~145 MHz

Gen 1: > 145

Dropping to Gen 2 isn't that much of an issue for anything aside from NVMe. Not even much of an issue for Crossfire, which uses PCI-e for communications between the GPUs.

MaxDepth

Diamond Member

- Jun 12, 2001

- 8,757

- 43

- 91

http://openbenchmarking.org/embed.php?i=1703030-RI-RYZENGAME81&sha=f608913&p=2

Well, that sorta answers my question about the CPU and Vulkan performance on Linux. So either new tuning needs to be implemented in core or, the 2017 GPUs will pick up the slack for an improved performance? I guess time will tell.

For the record, I'm glad AMD came out with the new CPUs just like I felt with their 400-series GPUs last year. It is much better than I was I expected, but for me, I can't help to be more inclined to look at the next iteration of GPUs and CPUs from AMD as my next possible build choices. I guess I'm feeling this way to see if there are even better improvements with shared mobo resources than what I am seeing here.

Well, that sorta answers my question about the CPU and Vulkan performance on Linux. So either new tuning needs to be implemented in core or, the 2017 GPUs will pick up the slack for an improved performance? I guess time will tell.

For the record, I'm glad AMD came out with the new CPUs just like I felt with their 400-series GPUs last year. It is much better than I was I expected, but for me, I can't help to be more inclined to look at the next iteration of GPUs and CPUs from AMD as my next possible build choices. I guess I'm feeling this way to see if there are even better improvements with shared mobo resources than what I am seeing here.

looncraz

Senior member

- Sep 12, 2011

- 722

- 1,651

- 136

Hello,

I am new to these boards and I am looking to make a new Photoshop CC workstation. I REALLY want to go with Ryzen but these results made me worried:

https://www.pugetsystems.com/labs/a...n-7-1700X-1800X-Performance-907/#Introduction

Is anyone able to comment on how much the growing pains are likely to have negatively impacted those results? Or do you think that the current issues with Ryzen, once resolved, won't have much impact on Photoshop performance?

I can't get over how much cheaper the Ryzen is than Broadwell-E, and I'd rather not wait until end of August for Skylake-X (and only if it will be priced appropriately...), but some of those performance differences are pretty significant. Of course this is only one test, if anyone has others that are Photoshop related (Specifically photo editing) I'd love to see them.

TIA

Photoshop is garbage for multi-threaded scaling beyond a few cores. Pretty sad considering how well photo editing should actually lend itself to parallelism.

Frequency and IPC will reign supreme until Adobe fixes that (which is supposedly in the works... so... that's a matter of 7700k being better now, but possibly 70% slower in a couple years).

Still, it's not like Ryzen is slow - it's just not as fast as Intel's fastest quad core... but neither is Intel's fastest 8-core...

MaxDepth

Diamond Member

- Jun 12, 2001

- 8,757

- 43

- 91

https://www.techpowerup.com/231268/...yzed-improvements-improveable-ccx-compromises

Someone with latest AIDA64 results?

Hmm, so accessing the L3 cash in the other CCX is essentially like accessing L4. While it hits why we have slightly lower performance in games, it is not consistent with the fact that a 4+0 core analysis from CB.de did not show in their benchmark. or am i missing something?

If true it will make optimizing games quite complicated. still possible though. a scheduler should be able to reduce those costly interfabric cache calls.

That the Data Fabric interconnect also has to carry data from AMD's IO Hub PCIe lanes also potentially interferes with the (already meagre) available bandwidth

am I reading this right? But why did AMD claim its Infinity bus being as superior?

Last edited:

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

Gamers Nexus made another bad review. Not nearly as terrible as the last, but still pretty bad. And the people on the amd reddit are praising him for it, because of the brown nosing title.

FFS. He threw a toddler tantrum, recorded conversations with amd, then proceeded to use that to slander them, and then wrote an absurdly bad hit piece of a review.

Apparently you can put sprinkles on a turd, and the avg joe will think it's a delicacy.

I have no faith in humanity...

FFS. He threw a toddler tantrum, recorded conversations with amd, then proceeded to use that to slander them, and then wrote an absurdly bad hit piece of a review.

Apparently you can put sprinkles on a turd, and the avg joe will think it's a delicacy.

I have no faith in humanity...

Last edited:

Gamers Nexus made another bad review. Not nearly as terrible as the last, but still pretty bad. And the people on the amd reddit are praising him for it, because of the brown nosing title.

I have no faith in humanity...

Especially when humanity is posting on the web

wilds

Platinum Member

- Oct 26, 2012

- 2,059

- 674

- 136

Ahhhhhhhh NO.

You don't have to agree, but it is true; 1440p is in its twilight years and will soon be 100% obsolete when 21:9 4k /16:9 4k120 hits the scene.

4k60 is the new mainstream resolution.

21:9 4k 120hz will make ay variant of 1440p look antique.

Not to mention 1440p has been out for a long, long time...

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

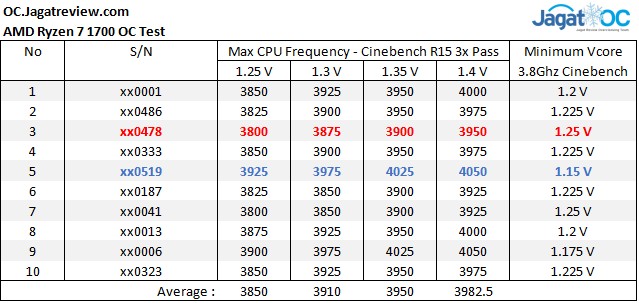

R7 1700 binning data from 10 CPUs. Indonesian Article.

TL;DR:

at 1.25V their worst CPU reached 3.8ghz!

at 1.35V 10/10 reach 3900+.

at 1.40v 8/10 reach 3975+, 4/10 reach 4000+

at 1.40v the best overclock is 4050, the worst is 3925

at 1.40v the average is 3982.5

This was tested on an x370 Asus crosshair.

TL;DR:

at 1.25V their worst CPU reached 3.8ghz!

at 1.35V 10/10 reach 3900+.

at 1.40v 8/10 reach 3975+, 4/10 reach 4000+

at 1.40v the best overclock is 4050, the worst is 3925

at 1.40v the average is 3982.5

This was tested on an x370 Asus crosshair.

Last edited:

Malogeek

Golden Member

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

Seemingly worst case of 3.9 @1.35v is really good. From what I have seen there is a huge difference in tdp between 1.35v and 1.4v though. That next 100 mhz isn't worth it imo.I'll be happy with 3900 at 1.35v 8C/16T $329 CPUcan't wait to play with it.

Last edited:

Malogeek

Golden Member

Another reason I'd like to stay at 1.35v.Seemingly worst case of 3.9 @1.35v is really good. From what I have seen there is a huge difference in tdp between 1.35v and 1.4v though.

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

looncraz

Senior member

- Sep 12, 2011

- 722

- 1,651

- 136

it will be great, but why it runs at half speed in first place?They must have reason to do this.

It doesn't actually run at half speed. It runs at the same speed as the memory controllers. DDR4 is simply double-rated (Double-Data-Rate... DDR) and the IMC runs at 1/2 the rating.

AMD DF & IMC runs at 1.2Ghz with DDR4-2400. And at 1.6GHz with DDR4-3200.

That out of the way, I wish they would have just allowed us to set the clock of the data fabric independent of the memory controller. Apparently the hardware already has that capability - it's just a matter of exposing that (and motherboard support would also be required).

I'd love to play around with those frequencies independently. I bet the data fabric can handle 2Ghz+, it's the memory controller that can't handle it that high.

I am a bit sceptical here. 1.25v giving 3.8 at worst? Hmm. Seems to optimistic to me. My asus b350m overvolted to 1.33 at auto for 3.3 as first step.R7 1700 binning data from 10 CPUs. Indonesian Article.

TL;DR:

at 1.25V their worst CPU reached 3.8ghz!

at 1.35V 10/10 reach 3900+.

at 1.40v 8/10 reach 3975+, 4/10 reach 4000+

at 1.40v the best overclock is 4050, the worst is 3925

at 1.40v the average is 3982.5

This was tested on an x370 Asus crosshair.

I think they tested wrong and didnt stresstest.

In a month or two when bios is updated we will know and can make an AT 1700 survey. Perhaps i missed a setting or two.

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

zinfamous

No Lifer

- Jul 12, 2006

- 111,994

- 31,558

- 146

You don't have to agree, but it is true; 1440p is in its twilight years and will soon be 100% obsolete when 21:9 4k /16:9 4k120 hits the scene.

4k60 is the new mainstream resolution.

21:9 4k 120hz will make ay variant of 1440p look antique.

Not to mention 1440p has been out for a long, long time...

eh, you just don't have any kind of grasp on the actual market numbers. 1440 is barely adopted. It was hardly adopted. It will probably never hit 4k levels (Again, because plenty of terrible 4K displays out there are just terrible builds and far, far cheaper than a typical gamer-quality 1440--so 4k will, simply by price inertia, gain quick adoption), but it is still considered premium based on quality and price--and will do so for the next 5 years with actual GPU cost to push them at ULTRA, 144hz, etc.

4k really will be untouchable at the same quality with which you can push 1080p60, or 144hz. It is a simple argument of numbers and economics. For whatever reason, you are completely ignoring logical numbers. IT makes no sense.

The market is not legions of "pro l33t gamers, brah!" Far from it. 1080 is mainstream until 2019, at least. 1440 will be skipped more or less, and 4k will have that mainstream adoption, but it will be short-lived. Maybe 3 actual years, because 5k-8k are the sensible resolutions....but then we need to consider GPU cost to drive those ridiculous resolutions, lol. fun times.

imported_jjj

Senior member

- Feb 14, 2009

- 660

- 430

- 136

Don't expect anyone to take you seriously when you call 1440p. "low res"

Next time before you post, go do some thinking.

With a Titan X Pascal 1440p is low res. It's the equivalent of a 1070 at 1080p so relevant for high refresh rates folks.

You are too lazy to read but you go insulting people while being wrong and when your mistake is revealed you push your aggression further instead of accepting that you messed up.

At the very least you can grow up and maybe learn what decency.

unseenmorbidity

Golden Member

- Nov 27, 2016

- 1,395

- 967

- 96

Someone made a great post on reddit talking about the discrepancies with the benchmarks. I could barely believe the level of incompetence!

https://www.reddit.com/r/Amd/comments/5xx0o7/gamersnexus_game_selection_is_important_but_how/

https://www.reddit.com/r/Amd/comments/5xx0o7/gamersnexus_game_selection_is_important_but_how/

IllogicalGlory

Senior member

- Mar 8, 2013

- 934

- 346

- 136

What is it with Techpowerup stealing other people's charts and data lately? They're a professional tech website with plenty of resources and a big audience, and they're writing half-baked articles with data taken from other people's reviews, without even providing a link to the source. Where's their own Ryzen review?

imported_jjj

Senior member

- Feb 14, 2009

- 660

- 430

- 136

https://forums.aida64.com/topic/3768-aida64-compatibility-with-amd-ryzen-processors/

3) L1 cache bandwidth and latency scores, as well as memory bandwidth and latency scores are already accurately measured.

4) L2 cache and L3 cache scores indicate a lower performance than the peak performance of Ryzen. The scores AIDA64 measure are actually not incorrect, they just show the average performance of the L2 and L3 caches rather than the peak performance. It will of course be fixed soon."

There was no evidence of a L3 problem at any point in time and AMD was quick to counter those claims but, in this era of fake news, folks keep insisting that there is a problem. We don't have the software tools to test it yet but soon.The cache clocks with the core and average perf can be quite a bit lower than peak.

The memory latency does so far appear to be less than ideal but not terrible vs Broadwell-E.

Last edited:

piesquared

Golden Member

- Oct 16, 2006

- 1,651

- 473

- 136

Really, why are people hunting around trying to find the cause of some imaginary issue. Whatever the individual numbers are, they add up to a pretty powerful and efficient chip. Thats the end result so whatever the in between numbers are, perhaps they are by design. Sure gaming at low res is lower performing but game optimizations seems like the most likely cause. Some games perform where Ryzen is expected to, so that must mean that the chip is capable of it. I bet those optimizations will do a lot of good.

Not speaking as a loyal AMD customer, but i can see Zen truely shaking up the market. It gives them access to Apple as well. How would apple like to get out from under intel's heavy foot? Servers? Mobile? APUs? I think AMD struck gold with Zen. I also dont see how intels margins are going to hold up.

Cant wait to see Raven Ridge probably going to have to get one to go along with Ryzen. I wonder if i can just swap the 2 back and forth depending on what im using the computer for.

Not speaking as a loyal AMD customer, but i can see Zen truely shaking up the market. It gives them access to Apple as well. How would apple like to get out from under intel's heavy foot? Servers? Mobile? APUs? I think AMD struck gold with Zen. I also dont see how intels margins are going to hold up.

Cant wait to see Raven Ridge probably going to have to get one to go along with Ryzen. I wonder if i can just swap the 2 back and forth depending on what im using the computer for.

Really, why are people hunting around trying to find the cause of some imaginary issue. Whatever the individual numbers are, they add up to a pretty powerful and efficient chip. Thats the end result so whatever the in between numbers are, perhaps they are by design. Sure gaming at low res is lower performing but game optimizations seems like the most likely cause. Some games perform where Ryzen is expected to, so that must mean that the chip is capable of it. I bet those optimizations will do a lot of good.

Not speaking as a loyal AMD customer, but i can see Zen truely shaking up the market. It gives them access to Apple as well. How would apple like to get out from under intel's heavy foot? Servers? Mobile? APUs? I think AMD struck gold with Zen. I also dont see how intels margins are going to hold up.

Cant wait to see Raven Ridge probably going to have to get one to go along with Ryzen. I wonder if i can just swap the 2 back and forth depending on what im using the computer for.

The Ryzen 1700 looks like great bang for buck regardless, but the variation in performance leaves me a bit unsure about my own use case.

Mostly it only seems to be in games (which I care very little about), but in the case of the multithreaded titles like Watch Dogs 2 which scale with threads, it would be good to know exactly what's going on.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.