- Jun 10, 2004

- 14,608

- 6,094

- 136

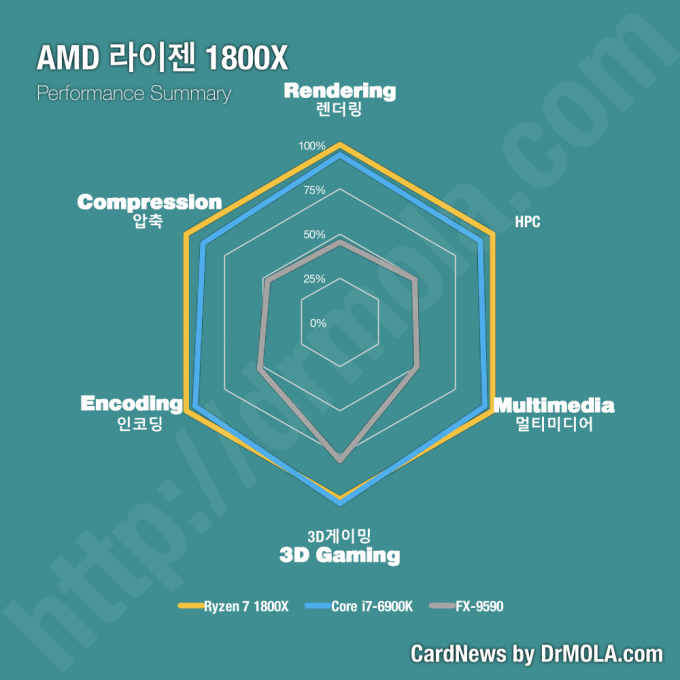

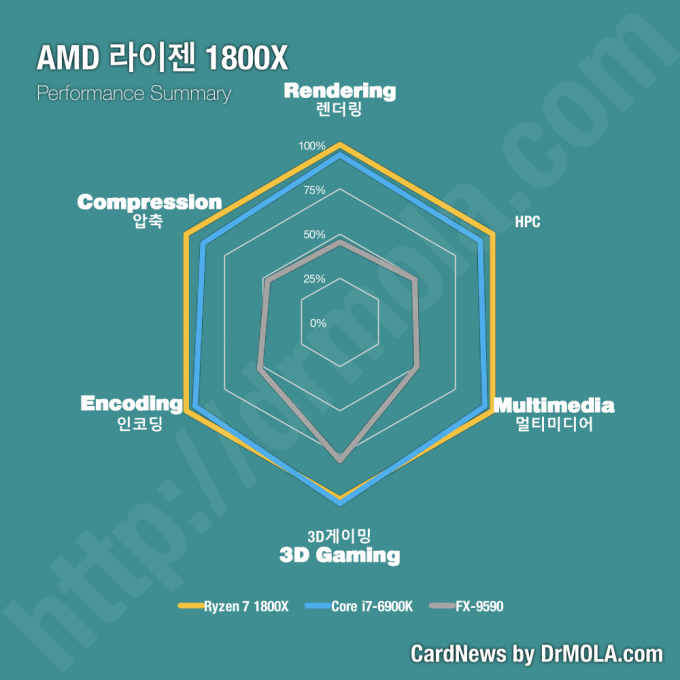

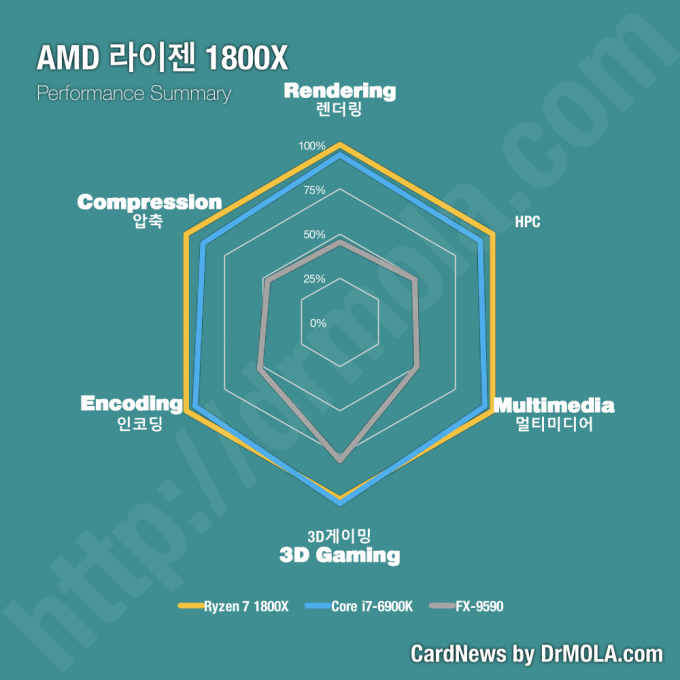

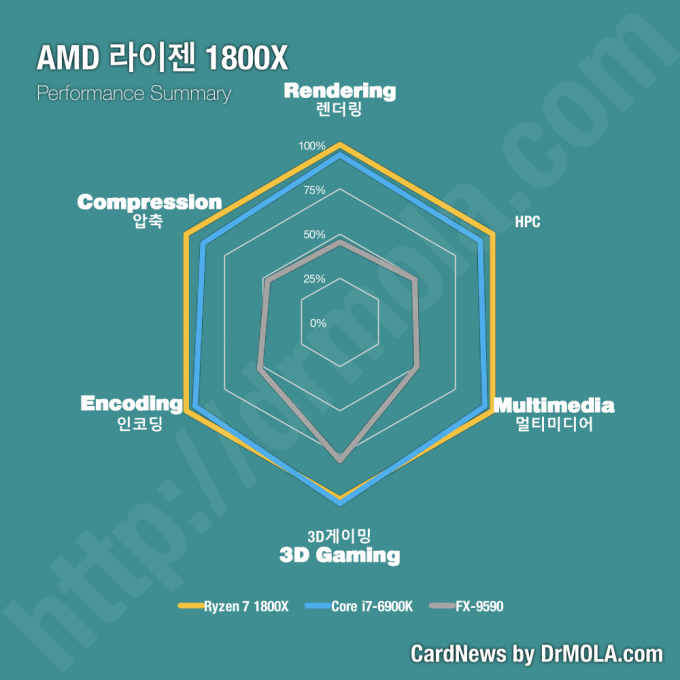

Much better way to visualize Ryzen's performance.

Much better way to visualize Ryzen's performance.

I don't expect the 1800X to keep up with the 7700K in everything, but I do expect so when compared to the 6900K. Computerbase's test suite for example clearly favors the 6900K over the 7700K, and yet the 1800X is sitting below the 7700K there.

The gaming was not a good idea

Disagree. They didn't run actual game benches except on BF1 IIRC or side by side only. They did it to show that Ryzen will satisfy gamers, especially those who do high resolution gaming but also have other strong tasks to perform. Regular gamers are Not going to spend $500 for a cpu anyways. Most gamers do not have a 1080/FuryX gpu either. Most gamers make a compromise with cost/performance and AMD showed that Ryzen will Not disappoint those who want a computer for gaming and strong workstation/productivity purposes.

Some gamers only care about max gaming performance now and that's why the 7700k keeps going on and is going to be better, at least for awhile longer.

There was no need to show this side by side comparison as there was only one CPU they need to show an improvement over

However,we heard the same thing about Bulldozer,its a new design and it will take time to optimise. You know what,as time progressed it did actually move ahead of the Phenom II in many aspects,but it took time.

The main concern is not if the improvements will come(they will in my view),but when and how long will it take?

That's not entirely true. It did not take more than 6 months. And when the Bulldozer refresh came a year after, things have settle down. FX was inherently bad which is why the improvements did not help.

Much better way to visualize Ryzen's performance.

You seem to conveniently forget I was the one who managed to find that post on Legitreviews about AMD saying SMT patches would drop 30 days from now for the R5 1600X. But AMD technical PR manager Robert Hallock said on twitter the problem was not windows related but need optimisation per game,and when asked how long it would take he could not give an estimated of how long. These contradict each other,and hence we are all putting our eggs into the basket of that windows update 30 days from now. That is the only time-frame we have and anything else we don't know how long it will take,and what priority it will be for game developers. Unless you can give people time estimates seriously just step back and we will need to wait and see. We don't know how long it will take,as even AMD is not really giving a solid answer and Microsoft has not really said anything either.

Its just rather annoying that we see Windows 7 having no real issue according to testing by The Stilt and Microsoft should have got this sorted by now.

Also improvements did come as time progressed with at least the Piledriver CPUs,they started to push ahead of the Phenom II.

Look at some games like Watch Dogs 2 in the GameGPU review. The FX8350 is holding its own against newer Intel CPUs,which I doubt a Phenom II X6 would do.

The point IMO was to show that a $500 cpu could run 4k res like an over 1k cpu. Other than that I don't know the intention.

I will just agree to disagree.

Gl with that dual core. Might want to reinstall windows every other week.Legit Reviews tested three games at 3840x2160 using the quad-core 7700K ($350), dual-core 7350K ($170), octo-core 1800X ($500), and octo-core 1700X ($400). The overall scores were, as a % of the 7700K scores:

100% 7700K

99% 7350K

98% 1800X

97% 1700X

In other words, if you game at 4k and don't care about other stuff, you can save a bunch of money and buy a cheap CPU and put the rest into the GPU. If you game at 4k, there comes a point where your CPU is irrelevant.

There are people who claim that the game testing results at 1080 show that the R7 won't do well in the future at 4k when GPUs are much faster. But if a lowly 7350 can keep up in 4k, it'll be a long while before that time ever comes. It's more likely that games will take advantage of all those extra cores sooner than GPUs will expose R7's lower IPC.

It's a long way of saying I think you're correct but the bigger point is that "Your CPU is probably irrelevant at 4k gaming". However, I'd sure like to see comprehensive 4k testing to see exactly how slow a CPU has to be to actually matter at 4k.

Oddly, it's cheaper to game at 4k if only because your CPU is so irrelevant.

There is two issues.

The Win10 scheduler.

The games not recognizing ryzen as smt cpu.

Last one is cause of the radicat underperformance. The first one is most likely just causing some minor performance losses.

W10 scheduler issue can cause a lot of issue with cache management. I.e. if a thread goes from 1 CCX to the next, as the L3 is separate, it will be inherently be slower. The magnitude of the impact will depend on how good or bad infinity fabric is. There is no benchmark showing its bandwidth but I saw a latency test showing slower performance.

Here is my Coreinfo output on windows 10 with an 1800x

Code:Logical to Physical Processor Map: **-------------- Physical Processor 0 (Hyperthreaded) --**------------ Physical Processor 1 (Hyperthreaded) ----**---------- Physical Processor 2 (Hyperthreaded) ------**-------- Physical Processor 3 (Hyperthreaded) --------**------ Physical Processor 4 (Hyperthreaded) ----------**---- Physical Processor 5 (Hyperthreaded) ------------**-- Physical Processor 6 (Hyperthreaded) --------------** Physical Processor 7 (Hyperthreaded) Logical Processor to Socket Map: **************** Socket 0 Logical Processor to NUMA Node Map: **************** NUMA Node 0 No NUMA nodes. Logical Processor to Cache Map: **-------------- Data Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64 **-------------- Instruction Cache 0, Level 1, 64 KB, Assoc 4, LineSize 64 **-------------- Unified Cache 0, Level 2, 512 KB, Assoc 8, LineSize 64 ********-------- Unified Cache 1, Level 3, 8 MB, Assoc 16, LineSize 64 --**------------ Data Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64 --**------------ Instruction Cache 1, Level 1, 64 KB, Assoc 4, LineSize 64 --**------------ Unified Cache 2, Level 2, 512 KB, Assoc 8, LineSize 64 ----**---------- Data Cache 2, Level 1, 32 KB, Assoc 8, LineSize 64 ----**---------- Instruction Cache 2, Level 1, 64 KB, Assoc 4, LineSize 64 ----**---------- Unified Cache 3, Level 2, 512 KB, Assoc 8, LineSize 64 ------**-------- Data Cache 3, Level 1, 32 KB, Assoc 8, LineSize 64 ------**-------- Instruction Cache 3, Level 1, 64 KB, Assoc 4, LineSize 64 ------**-------- Unified Cache 4, Level 2, 512 KB, Assoc 8, LineSize 64 --------**------ Data Cache 4, Level 1, 32 KB, Assoc 8, LineSize 64 --------**------ Instruction Cache 4, Level 1, 64 KB, Assoc 4, LineSize 64 --------**------ Unified Cache 5, Level 2, 512 KB, Assoc 8, LineSize 64 --------******** Unified Cache 6, Level 3, 8 MB, Assoc 16, LineSize 64 ----------**---- Data Cache 5, Level 1, 32 KB, Assoc 8, LineSize 64 ----------**---- Instruction Cache 5, Level 1, 64 KB, Assoc 4, LineSize 64 ----------**---- Unified Cache 7, Level 2, 512 KB, Assoc 8, LineSize 64 ------------**-- Data Cache 6, Level 1, 32 KB, Assoc 8, LineSize 64 ------------**-- Instruction Cache 6, Level 1, 64 KB, Assoc 4, LineSize 64 ------------**-- Unified Cache 8, Level 2, 512 KB, Assoc 8, LineSize 64 --------------** Data Cache 7, Level 1, 32 KB, Assoc 8, LineSize 64 --------------** Instruction Cache 7, Level 1, 64 KB, Assoc 4, LineSize 64 --------------** Unified Cache 9, Level 2, 512 KB, Assoc 8, LineSize 64

Update to Pauls Hardware. Overclocking, Windows High Performance mode, better HSF configuration, tweaks from AMD... check it out:

20% fps increase for GTA5 with 1700 @ 3.9. I'm surprised how hot the 1800X gets compared to the 1700.

Apparently disabling Windows HEPT is crucial to performance, too.

I'd like to see a price/perf chart like that.

It seems that way from coreinfo but what i meant was a multithreaded game, unlike rendering applications, can start and/or terminate 100s of thread every minute. W10 will try to spread them out evenly on so as to distribute the load across all cores. W10 does not know if that thread will try to access data from another thread or not, so it may place them on separate CCX. if you check the YouTube review you will notice that the threads gets bounced around randomly.It seems fine to me.

more reason to get the $330 1700 with the bundled led cooler over the $500. No ryzen can be OC beyond 4.1 ghz without ridiculous voltage increase hence liquid cooler so might as well as just get 1700 and OC that to 3.9Update to Pauls Hardware. Overclocking, Windows High Performance mode, better HSF configuration, tweaks from AMD... check it out:

20% fps increase for GTA5 with 1700 @ 3.9. I'm surprised how hot the 1800X gets compared to the 1700.

Apparently disabling Windows HEPT is crucial to performance, too.

20% fps increase for GTA5 with 1700 @ 3.9. I'm surprised how hot the 1800X gets compared to the 1700.

It seems that way from coreinfo but what i meant was a multithreaded game, unlike rendering applications, can start and/or terminate 100s of thread every minute. W10 will try to spread them out evenly on so as to distribute the load across all cores. W10 does not know if that thread will try to access data from another thread or not, so it may place them on separate CCX. if you check the YouTube review you will notice that the threads gets bounced around randomly.

all CPUs before this has had unified L3, game codes did not care which core a new thread ends up in. But with ryzen it may matter if CCX intercom adds too much latency.

Also make sure you close your browser when playing a game.Gl with that dual core. Might want to reinstall windows every other week.