Deep breaths......Who said that frequency and bandwidth are same? What changes when you get faster memory? Bandwidth and latency. Latency changes plateau after a certain point, but bandwidth continues to increase as long as the memory controller isn't saturated. Changing the memory frequency directly affects memory bandwidth. This is why it is said that you should get faster memory when your applications are memory bound - depending on the application it may be sensitive to bandwidth or latency, or both.

You never explicitly stated it, but you kind of implied it when you said that:

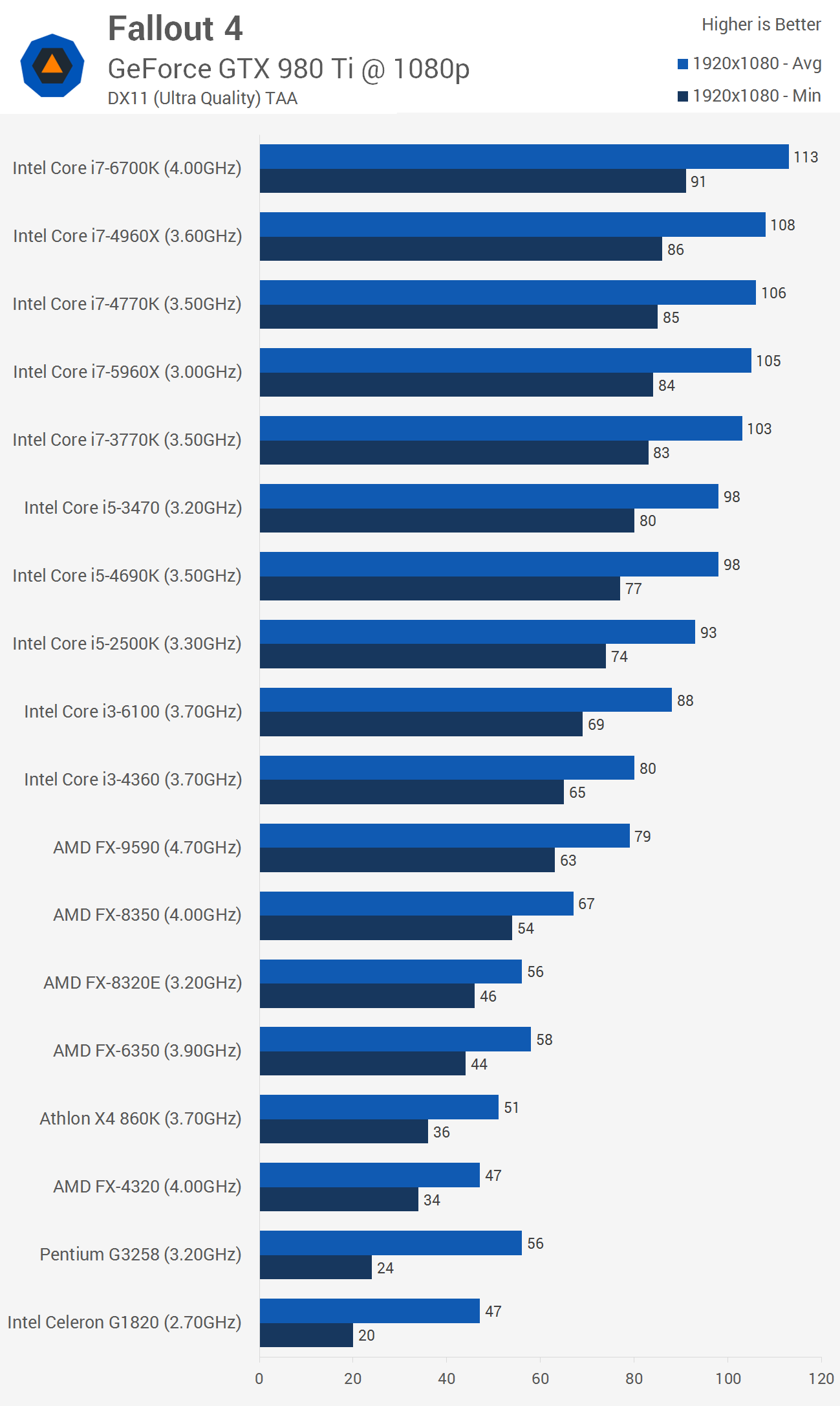

It is laughable that you show Fallout 4 to claim that bigger L3 on the 5960X is what puts it on top when it is known that Fallout 4 loves memory bandwidth.

Show me one benchmark which shows Fallout 4 benefiting from increased bandwidth. All the benchmarks show Fallout 4 benefiting from memory speed!

X99 has an inherent advantage here because quad-channel support means that even with lower frequency memory, you can get more bandwidth from it compared to a dual-channel setup. This is what I mean when I say that Fallout 4 loves memory bandwidth - because ultimately this is what you are changing by getting faster RAM or moving to X99. I suspect that it may be sensitive to latency as well.

You have no idea whether Fallout 4 loves memory bandwidth, that's just an assumption. No game that I know of, including Fallout 4, responds to the huge amounts of memory bandwidth afforded by the X99 platform. On the other hand, it responds to memory speed, which is measured in nanoseconds and rated in cycles. This is proven over and over again by many benchmarks.

Also, the 5960x's 20MB L3 cache would reduce the game's dependence on faster RAM compared to CPUs with smaller amounts of L3 cache..

Faster memory implies more bandwidth, as long as you don't loosen the timings.

It does, but how do you know that it is benefiting from bandwidth and not from speed?

Oh, you still haven't proved how more than 8MB L3 affects performance, apart from repeating a textbook statement. I showed you graphs where 2,4,6,8 MB L3 had an effect, albeit with diminishing returns, proving that it matters more when you have a low amount of it to begin with. Do your part and show me something similar for larger L3.

I shouldn't have to prove anything. This should be common knowledge among computer enthusiasts. More L3 cache reduces the dependence on system memory performance for a CPU, because it means that the CPU can keep more data locally than having to go all the way to system memory which takes time. Also, the bandwidth and latency afforded by the L3 cache is superior to any system memory architecture, regardless of how fast it is.

And there are no benchmarks showing the effect of L3 cache size, because there is no way to disable the L3 cache, or parts of it.