If you cared to listen to NVIDIA's 2016 GDC talk where they talk about how developers should implement DCLs, then it would give you the context to properly understand what the OP video is talking about.

Hmm, here's their technical speeches for GDC 2016, and I don't see anything about DCLs, at least not for DX11. In the interest of time, could you point it out?

NVidia GDC 2016

I don't even...one moment you say it scales because of cache, the very next moment you say it scales to six threads. So going from the 6850K,6900K, and 6950X the bigger change according to you is the cache and not the additional cores?

Deep breaths! If you read my post, I mentioned that it was influenced by L3 cache, and also that it scaled to six threads. But the fact that it scales to six threads is nothing remarkable in and of itself.

Several game engines will use six threads, or even more.

Show me benchmarks where going from 8MB L3 to 25MB L3 and beyond has more than a 1-2 fps difference in performance.

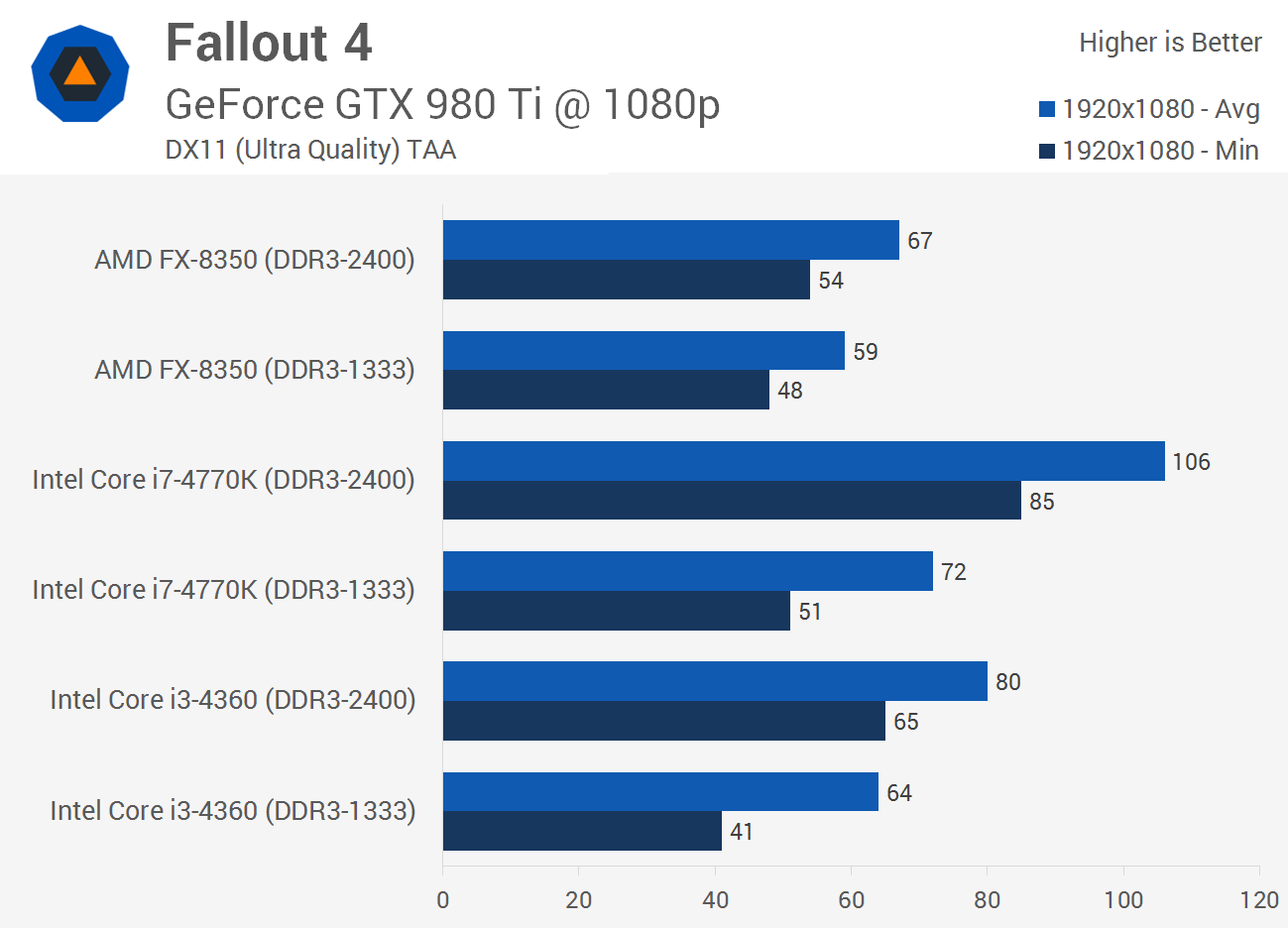

Not hard to find one. Fallout 4 responds well to L3 cache size, which is why the 5960x sits atop this benchmark chart:

I have a better

source - I don't know if you deliberately chose to ignore it in my original post or simply forgot about it.

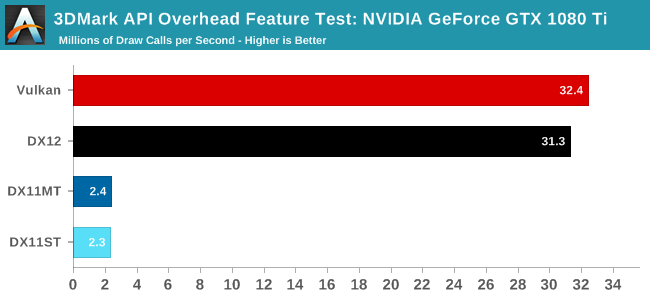

That's for draw call submissions. DX12 uses a single thread for draw call submissions as well. The command lists record from multiple threads, but the final submission to the GPU is serial.

Well for starters, here is an example of the task based parallelism in effect. The game is very well threaded in itself. Unlike in the case of AMD where the physics engine updating the rendering engine+drivers cause GPUs to take a hit, NVIDIA's drivers are doing their job here.

Once again, the physics engine is CPU based in Project Cars. It shouldn't have any effect on the ability of the CPU to issue draw calls unless you're using a weak CPU like a dual core without SMT or something.

AMD's problems with Project Cars are likely just due to overall driver inefficiency. BTW, Project Cars does support DX11 multithreading, but it's disabled by default the last time I checked.

Why don't you also take a look at Broadwell-E scaling in the same chart?

I have been looking at it. Because the test was conducted so poorly, it's difficult to tell whether it's from L3 cache size or threads. I suspect cache size and architecture are playing a bigger role here than thread count, because the 6700K is ahead of the 6850K.

Here's a better example of CPU scaling in Project Cars. As you can see, it doesn't use over four threads....as I suspected.

Look at the charts again before making this erroneous conclusion.

How about you look at my new chart

In hindsight, Project Cars might not have been the best example. Given that you mention TCGR:W for the umpteenth time, I had a look at The Division instead -

You really seem to have a liking for Toms Hardware I must say, even though they aren't that good.

Tell me why there is reverse scaling with no. of cores on the Broadwell-E processors, and why the 6700K tops them all.

For God's sake man, stop using Toms Hardware as a source!

It's obvious that the game is GPU bound in that benchmark. Doing a CPU scaling test at 1440p is insanely stupid first off. To do a proper CPU scaling test, you should run the benchmark at a much lower resolution to keep things CPU bound.

The Division scales to about 6 threads if I recall, so if the 6700K is ahead (and only by 1 FPS), it's just margin of error GPU bound stuff.

If you want to see some real CPU scaling tests on The Division, look at PCgameshardware.de