https://www.youtube.com/watch?v=nIoZB-cnjc0&feature=youtu.be

Summary:

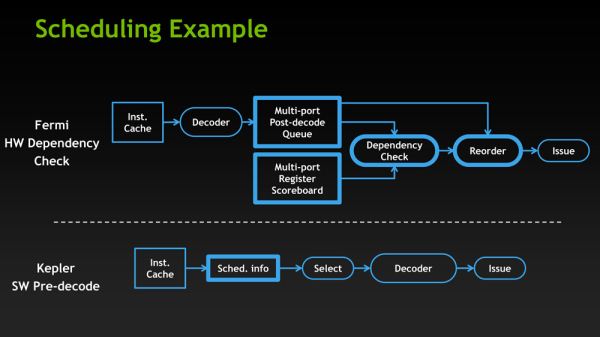

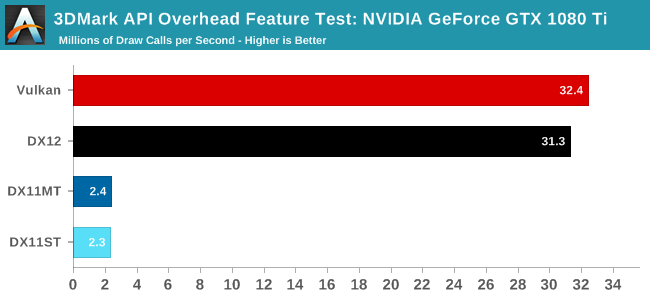

DX11 puts draw calls on a single thread. Nvidia's DX11 driver uses a software schedule to take a game's single threaded DX11 draw calls and splits the draw calls across multiple cores. This incurs a higher CPU overhead hit across all cores but oftentimes results in improved performance due to not running into a single threaded bottleneck.

AMD GCN architecture uses a hardware scheduler and can not take a game's single threaded DX11 draw calls and split them across multiple cores.

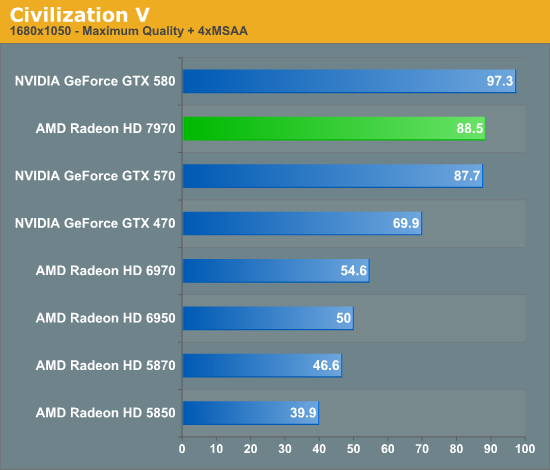

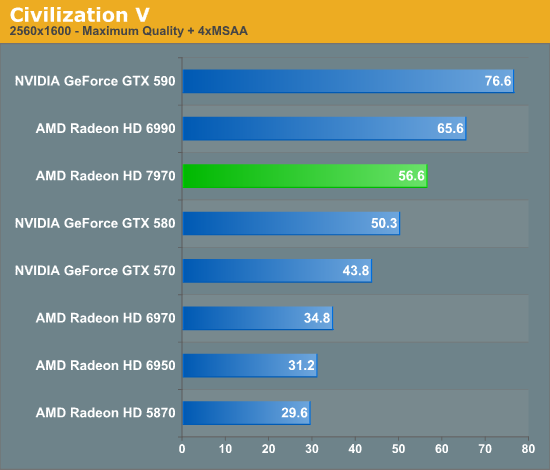

The result is that in games that heavily place game logic + draw calls on a single thread, AMD performance will suffer while Nvidia performance will not.

However, games that are multi threaded so that draw calls are dedicated to 1 core while game logic is spread across the other cores results in the possibility of AMD performance pulling ahead of Nvidia performance with similar level GPUs like 480 vs 1060 due to Nvidia's software scheduler incurring a CPU overhead hit across multiple cores to split draw calls.

Summary:

DX11 puts draw calls on a single thread. Nvidia's DX11 driver uses a software schedule to take a game's single threaded DX11 draw calls and splits the draw calls across multiple cores. This incurs a higher CPU overhead hit across all cores but oftentimes results in improved performance due to not running into a single threaded bottleneck.

AMD GCN architecture uses a hardware scheduler and can not take a game's single threaded DX11 draw calls and split them across multiple cores.

The result is that in games that heavily place game logic + draw calls on a single thread, AMD performance will suffer while Nvidia performance will not.

However, games that are multi threaded so that draw calls are dedicated to 1 core while game logic is spread across the other cores results in the possibility of AMD performance pulling ahead of Nvidia performance with similar level GPUs like 480 vs 1060 due to Nvidia's software scheduler incurring a CPU overhead hit across multiple cores to split draw calls.

Last edited: