There were a number of people who said they wanted to have a technical discussion about ray tracing and hw ray tracing but I don't see them initiating one.. So lets get into it and put a nail in this teased coffin.

Caustic Graphics was one of the first companies to implement hardware ray tracing

a DECADE ago.

https://www.crunchbase.com/organization/caustic-graphics#section-overview

Here's their detail. They operated on $3 million of funding 2006-2010 and were acquired by Imagination technologies for $21 million in 2010.

WHERE'S THE BILLION DOLLAR R&D BUDGET? 10 years of R&D work .. Nowhere.

Why? Because all ray tracing is are a bunch of ALUs performing vector math in parallel with handler logic for convergence and divergence set upon a BVH. It's a cheap and dumb asic.

https://www.anandtech.com/show/2752

The major differences with this hardware will be with the replacement of the FPGAs with ASICs (application specific integrated circuit - a silicon chip like a CPU or a GPU). This will enable an estimated additional 14x performance improvement as ASICs can run much much faster than FPGAs. We could also see more RAM on board as well. This would bring the projected performance to over 200x the speed of current CPU based raytracing performance.

Imagination Technologies then integrated this tech into their powerVR line :

https://www.imgtec.com/legacy-gpu-cores/ray-tracing/

I can almost guarantee you that Nvidia does nothing incredibly different because its a standardized algorithm. I could implement this in verilog and have it functioning on a FPGA dev board in a couple of weeks. It's parallelized convergent and divergent vector math. From the horses mouth :

https://www.zhihu.com/question/290167656

The RT core essentially adds a dedicated pipeline (ASIC) to the SM to calculate the ray and triangle intersection. It can access the BVH. Because it is the ASIC-specific circuit logic, it is compared with the shader code to calculate the intersection performance. Mm^2 can be an order of magnitude improvement. Although I have left the NV, I was involved in the design of the Turing architecture. I was responsible for variable rate coloring. I am excited to see the release now.

作者:Yubo Zhang

This isn't anything complicated nor are tensor cores which is why Google was able to whip up their own Asics over night as are others.

So, there's your technical discussion. A time tested vector math algorithm is not complicated. Slap some ALUs together with control logic, registers and memory.

Those who came up with the algorithm and refined it are the true people who deserve praise. You know like the researcher that got a mention in the presentation. Stamping this in an asic is child's play. So, what every discussion about

IS THE PRICE and

Performance... Because Nvidia didn't create the algorithm and they weren't the first to put ray tracing into HW or asic form. This was done over a decade ago and I don't hear anyone praising the small group who got together and did this on a much smaller budget because people actually don't care about the technical accomplishment. You want to pretend like you do but you don't.

So, just about everyone whose a consumer of this product on just about every site on the internet agrees : This pricing is absurd. From the /r/nvidia green team members to prominent hardware reviewers, everyones aghast at the price and they have every reason to be. Nvidia can chock it up to die size like intel does (Well guess what...

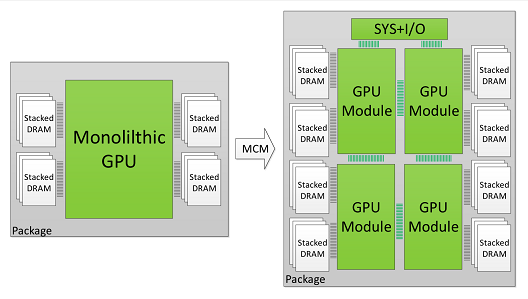

Go MCM)... However, that's a logistic problem they need to address and should address. No one forced them to do what they're doing. Intel is facing similar pains because of this more costly approach.

Then comes the psychology of it all. You're launching a yet to be proven technology that you need to sell people on as if you don't care if people adopt it or not... This is how big and great tech companies begin faltering. This is why it's always good that new ones are allowed to enter. This is sheer arrogance IMO. They didn't create the algorithms underlying AI but they stamp them into asics (tensor processing units) and try to gouge the hell out of people. They didn't create the ray tracing algorithms but they stamp them into asics (ray trace units) and try to gouge the hell out of people. This is exactly how/why someone comes out of left field and kicks a yuge successful company in the nuts.

This is why I have every belief that we are headed to a democratized accelerator card architecture with a high speed standardized bus network connecting them. AMD is preparing for this and it is known as Heterogeneous System Architecture. The platform must be democratized at this point. Technology is currently chained to the ground because greedy myopic companies want to preserve their legacy pricing models.

- PCIE switches need to come to the desktop and out of the enterprise.

- PCIE 4.0 needs to become a thing pronto

- A new lower latency open standard bus protocol needs to become a thing

- A CPU should become a high level processing router

The age of dedicated and swappable accelerator cards needs to become a reality and this ridiculous desire to silo compute into proprietary domains needs to be broken.

So, I chock this up to be a grand miscalculation on Nvidia's behalf. They just alienated the whole gaming community who is already on high alert and reeling due to the virulent price gouging that occurred during the crypto mania. No one came to their defense or aided them. Everyone denied culpability while they profited from the cancerous plague that wasted earth's resources on a ponzi scheme. You think gamers forgot about this? The extortionist pricing. The denial by the whole chain of profiteers as to why it persisted? Direct selling thousands of Gaming GPUs to miners while shelves laid bare? The free for all on the consumer?

And you repay them with this? Slapping a decade old proven technology into your pipeline

with no performance data and Memecoin price levels? Then letting this simmer for a month? While you let virulent marketing poke and prod your consumers? Go over to /r/nvidia. Tons of people woke up today and cancelled their pre-orders when they said the horrid performance numbers in the Tomb Raider demo and they were pissed about it. You call this good marketing? The psychological mind @#%! companies do regarding viral product launches has to stop. It's becoming old and long in the tooth. People are over it. If you're going to announce and launch the product, get your grown arse on stage, detail the product like an adult with the metrics people want to see and call it a day.

Steve Jobs coined a particularly interesting format and it was apt for the time, age, and magnitude of the product. Then, beyond this innovative way of launching a product we had the iterator, and now the idiot. Enough is enough with this hot garbage.

They could have humbly announced a bold new feature they hope will change gaming and compute for ages and launched with the lowest end card first .. Solidify a user base. Solidify buyin. Let everyone know that this is a ship that aims to usher

everyone to the next level in computing. See what shapes up.. See what unique ways people use the new cores. Announce the SDK and all the features will be available to every price point. See what comes of it. Let your consumers make new markets for you.

Instead, they jawboned this at extortionist prices and walked off stage as if everyone has to buy it. Word on the street is that people clearly don't and aren't sold on it. In fact, they're offended they were tried like this.

Meanwhile, game makers are inserting annoying and heavily charged political propaganda into every game they sell doing everything to piss off their user base and then telling them : If they don't like it don't buy the game. This is peak arrogance of a chapter in technology that is soon going to be slammed shut.

Good riddance. I want new ideas. New companies and new products. This is why everything in this universe is cyclical. It affords a chance for a cleansing to occur when a wave has jumped the shark.