PhonakV30

Senior member

- Oct 26, 2009

- 987

- 378

- 136

I've seen in 1060 reviews that nvidia is ready to bite their finger off if that means amd looses an arm. They can sacrifice a bit of performance going from vulkan to OGL, just to make amd cards suffer more performance loss.

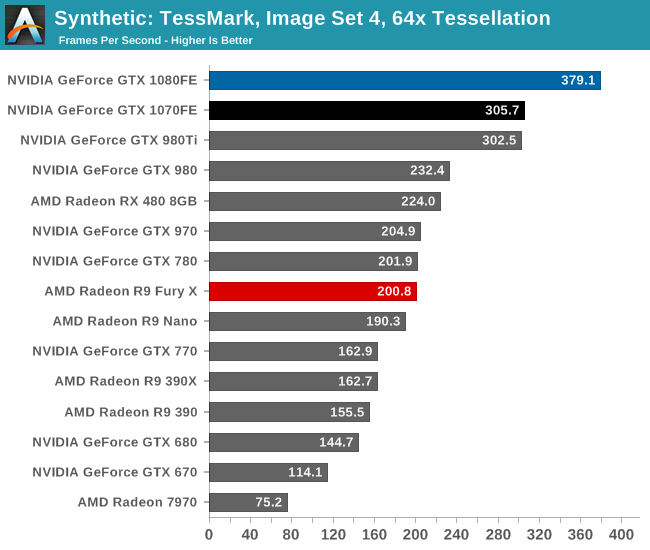

They will kill amd with tesselation and render previous nv gens obsolete with preemption.

They can't kill RX 480 with Tess