Is this relevant for consumer loads like office and games? If so how much?

I posted some gaming benchmarks in this thread a while back, but I can't find them. Thankfully I keep the BBcodes of things that are somewhat important, so here it is. All in all, gaming is unaffected mostly. Haven't tested with the 1.60 BIOS though. As for the office apps, I haven't noticed anything. The 8600k is too fast for those anyway.

Hello everybody.

So I did some benchmarks, before and after the kb4056892 patch, primarily on my core i7-860, but also some quick tests on the i5-8600k. My 2500k will have to wait for a while, because I am running some other projects at the same time and I need to finish up with the i5-860.

This effort is completely hobbyist and must not be compared with professional reviews. It’s just that I believe no reviewer will actually take old systems under consideration, so that’s where I stepped in. There are some shortcomings on this test anyway, some of which are deliberate.

The test is not perfect because I have used mixed drivers. Meaning that in some tests I have used the same driver, in another a couple months different drivers, but in Crysis I have used a year old driver for the pre patch run (it made no difference anyway). From my personal experience, newer drivers rarely bring any performance improvement. After the first couple drivers have come out, nothing of significance changes. Usually Nvidia’s game ready drivers, are ready right at the game launch and very few improvements come after that. Actually it’s primarily the game patches that affect the game’s performance, which granted, may need some reconsideration on the driver side. Still the games I have used did not have that problem (except one specific improvement that occurred in Dirt 4).

In this test, there are three kinds of measurements. First I did the classic SSD benchmark, before and after the patch. The I have gaming/graphics benchmarks, which consist from either the built in benchmark of the game or from my custom gameplay benchmarks. Then I have World of Tanks Encore, which is a special category, because it’s an automated benchmark, but I used fraps to gather framerate data while the benchmark was running, because it only produces a ranking number and I wanted fps data.

In my custom gameplay benchmarks, I rerun some of my previous benchmarks of my database, with the same settings, same location etc. Keep in mind that these runs are not 30-60 second runs, but several minutes long ones. I collected data with fraps or Ocat depending on the game.

Please note that ALL post patch benchmarks, were with the 390.65 driver for both the 1070+860 and 970+8600k configurations. This driver is not only the latest available, but also brings security updates regarding the recent vulnerabilities.

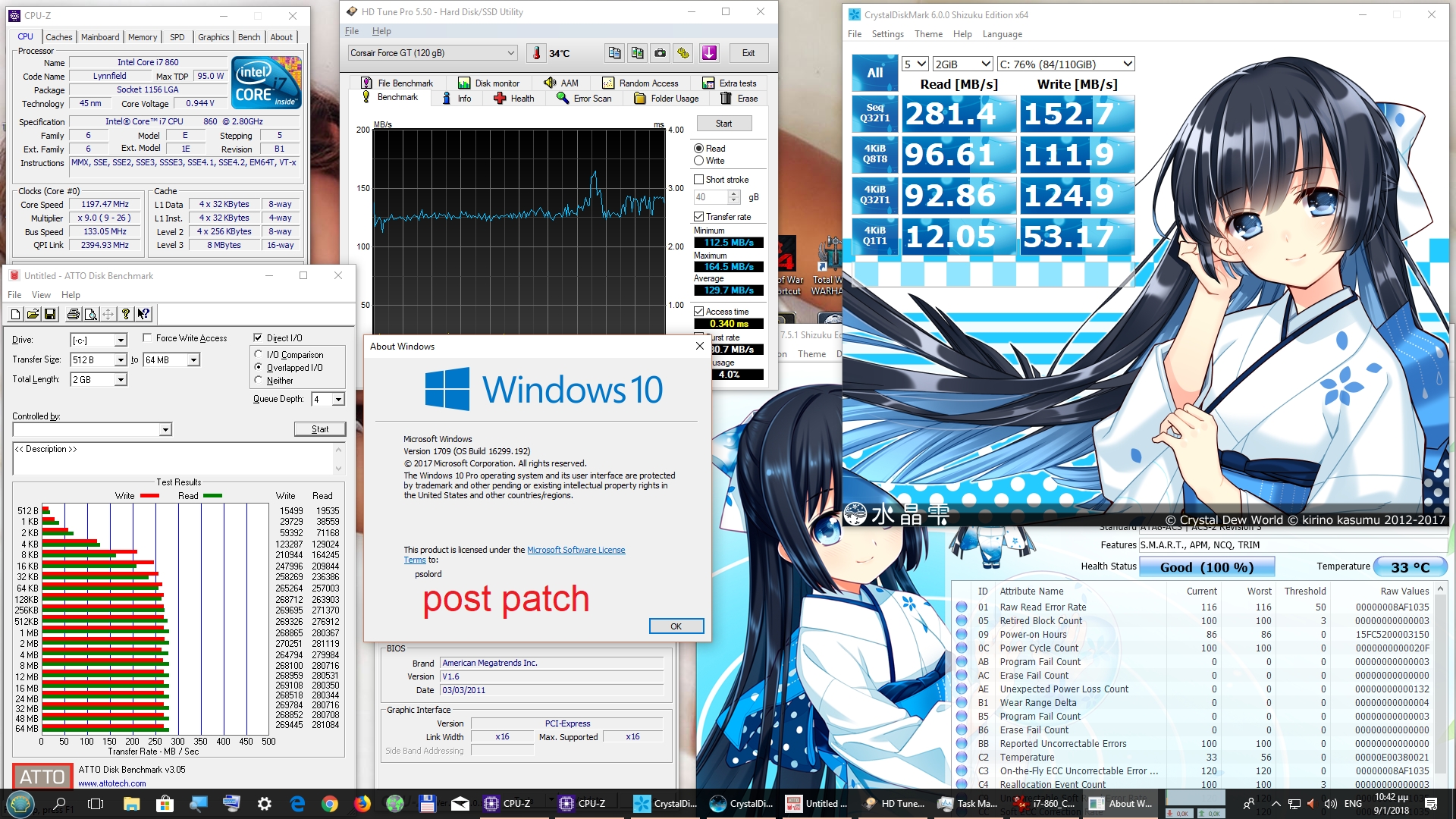

So let’s begin with the core i7-860 SSD benchmarks. Please note that the SSD benchmarks on boths systems, were done at stock clocks. The 860’s SSD is an old but decent Corsair Force GT 120GB.

As you can see, there are subtle differences, not worthy of any serious worries imo. However, don’t forget, that we are talking about an older SSD, which also is connected via SATA2 since this is an old motherboard as well.

Now let’s process to the automated graphics benchmarks, which were all done with the core i7-860@4Ghz and the GTX 1070@2Ghz.

Assassins Creed Origins 1920x1080 Ultra

Ashes of the Singularity 1920x1080 High

Crysis classic benchmark 1920X1080 Very High

F1 2017 1920X1080 Ultra

Unigine Heaven 1920x1080 Extreme

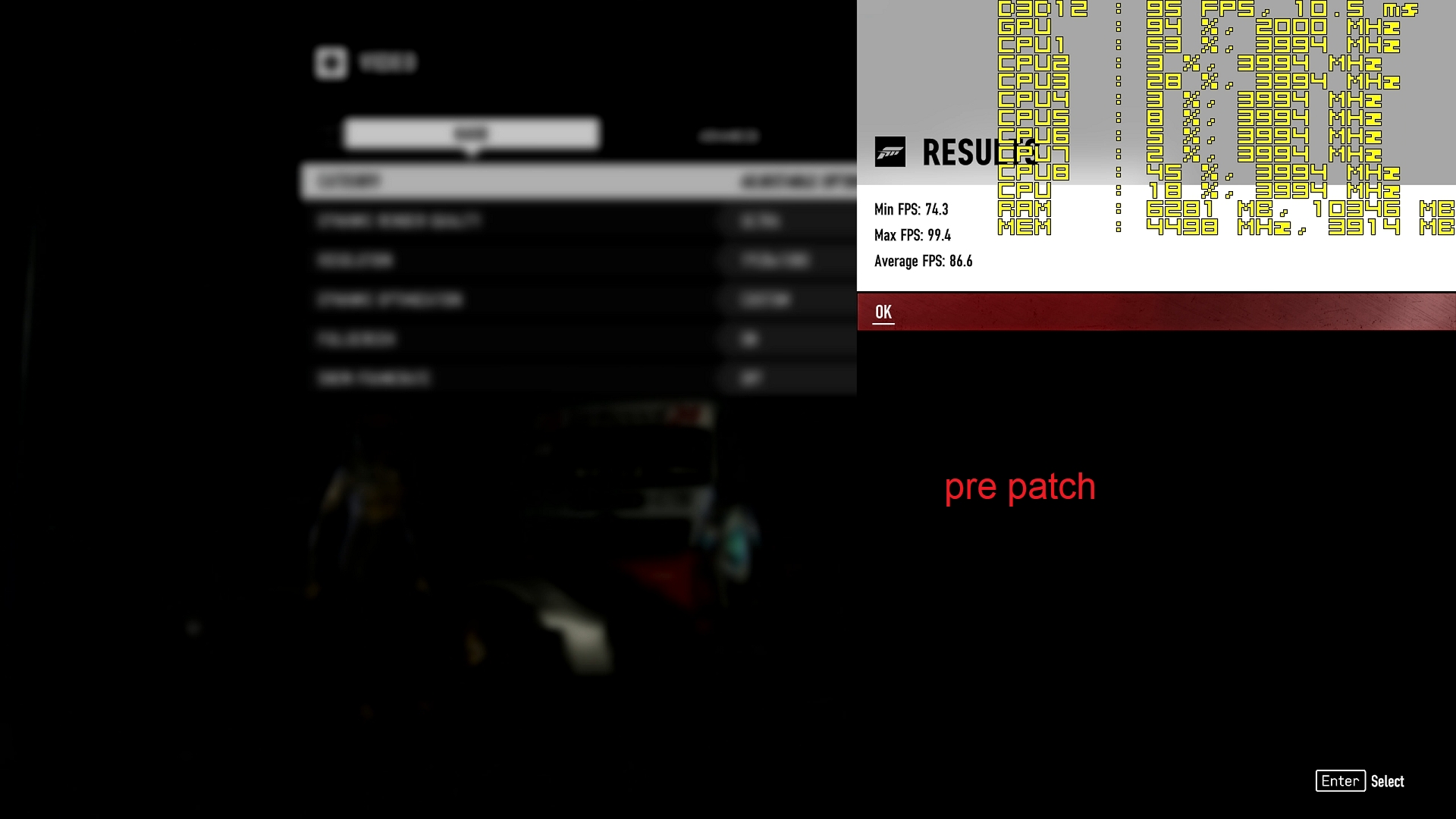

Forza Motorsport 7 1920X1080 Ultra