Please explain how the CPU and GPU are using anything close to 90 watts when the entire notebook is running on an 85 watt adapter.

First of all, that number was quoted by AnandTech, not me.

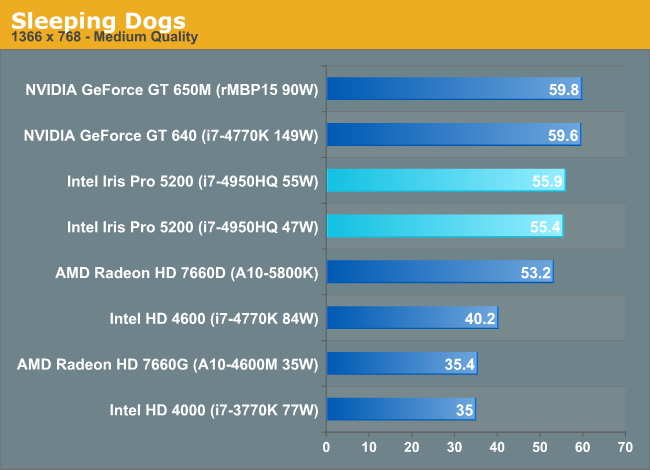

AnandTech said:The GT 650M is a 45W TDP part, pair that with a 35 - 47W CPU and an OEM either has to accept throttling or design a cooling system that can deal with both. Iris Pro on the other hand has its TDP shared by the rest of the 47W Haswell part. From speaking with OEMs, Iris Pro seems to offer substantial power savings in light usage (read: non-gaming) scenarios. In our 15-inch MacBook Pro with Retina Display review we found that simply having the discrete GPU enabled could reduce web browsing battery life by ~25%. Presumably that delta would disappear with the use of Iris Pro instead.

AnandTech said:With a discrete GPU, like the 650M, you end up with an extra 45W on top of the CPU’s TDP. In reality the host CPU won’t be running at anywhere near its 45W max in that case, so the power savings are likely not as great as you’d expect but they’ll still be present.

A regular GT650M has 45W TDP, Apple's GT650M (clocked higher than a 50W TDP GTX660M) likely draws even more power, contributing to the unimpressive 2.33 hour battery @ heavy workloads of the current rMPB 15''. Anand is very clear here, the current CPU+dGPU solution wont be running at rated TDP simultaneously (hence your 85W power adapter) but it's still more power hungry than a single 47W chip would be. Doesnt take a genious to figure out an Iris Pro could deliver better battery life, question is how much.

Last edited: