Nemesis 1

Lifer

- Dec 30, 2006

- 11,366

- 2

- 0

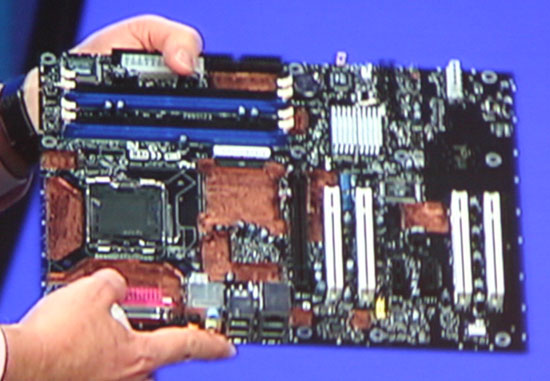

I am sure many would agree with ya on this as at this moment I do also . But its clear the trend is accelerating in the other direction . Because of space constraints in my water cooling rig and the need for Blue ray and more storage I went with Asus component encloser with blue ray . I also went that direction with the storage for music and video You also failed to mention Thunderbolt that is easily daisy chained the parts are a bit high right now but that will change in a year or 2 , Intel developed it at there cost and AMD is allowed to use it. this is the way forwardHow many people want a computer smaller than a Shuttle type, with one or no PCIe slots, limited panel IO (there's just not room!), and then having to rely mainly on USB for every non-special-function device added after the first two SATAs? OK, now of those people, how many of them are willing to pay more than a standard ATX case scenario, to get less system flexibility? A small percentage of Apple PC customers, and anyone buying a notebook/tablet/etc..

Last edited: