3000 / 2300 = 1.304x not 2.000x.Can't use base frequencies because according to that Icelake is at half the clocks of Tigerlake and we know that's not true.

Discussion Intel current and future Lakes & Rapids thread

Page 410 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

There is when it comes to motherboards. We've already seen problems with Z490 boards not supporting the 10900k (for instance) due to the high PL2 values and long tau periods. An OEM that wants to supply a bare minimum spec board for AM4 never has to worry about sustained power draw of more than 142W through the socket. And that's for a 16-core monstrosity. Plus we haven't seen what happens when Intel tries to dissipate that much power on 10nm on a desktop-sized die (40c Ice Lake-SP is massive . . . and 380W).

You would think so. But if that is the case, why is Intel shooting straight for 250W PL2? Is that what they have to do to beat AMD in some benchmarks? Ice Lake-SP is already pulling monstrous amounts of power while still losing benchmarks to competitor's server CPUs from 2019.

Ice Lake is not on 10SF/10ESF. Intel is also pushing Ice Lake to absurd limits in order to be competitive. Sapphire Rapids should give us a better picture of efficiency going forward.

Magic Carpet

Diamond Member

- Oct 2, 2011

- 3,477

- 234

- 106

Pretty much. During Sandy/Ivy/Etc times it was Intel that intentionally limited power consumption of its chips because their advantage was huge. Intel was great, I could use a picopsu with a cheap board to run their fastest sku without worrying of frying my board. Now things have turned around. Now I have to think, count and tinker!But if that is the case, why is Intel shooting straight for 250W PL2? Is that what they have to do to beat AMD in some benchmarks?

I know. Has the consensus around here changed about TDP is not equal to power consumption though? Intel's PL2 have a 56 second default duration which the AMD camp loves to see in reviews but then turn around and trash when it comes to these sort of discussion. PL2 x Forever is not an Intel feature, neither is unlocking power on AM4 boards, which actually pushes Zen 3 chips beyond 142W PPT.Incorrect. None of the AM4 CPUs have a TDP over 105W. They have a PPT of 142W.

I stand corrected.You can get 8 cores @ 65W. Ryzen 3700X, APUs, etc.

You would think so. But if that is the case, why is Intel shooting straight for 250W PL2? Is that what they have to do to beat AMD in some benchmarks? Ice Lake-SP is already pulling monstrous amounts of power while still losing benchmarks to competitor's server CPUs from 2019.

Part benchmarks, part these chips being marketed towards people who would just overclock anyway.

coercitiv

Diamond Member

- Jan 24, 2014

- 7,516

- 17,986

- 136

Personally I fail to understand why you keep trying to trigger people into an AMD discussion on this thread. First the remark about the AMD 142W PPT power limit being somehow equivalent to Intel's PL1 limit, and now a vague remark that TDP is not equal to power consumption combined with this gratuitous suggestion that the "AMD camp" uses PL2 limit as reference for Intel power consumption and PL1 as reference for performance benchmarks. I guess this has to be done, let's talk Intel vs. AMD power management.I know. Has the consensus around here changed about TDP is not equal to power consumption though? Intel's PL2 have a 56 second default duration which the AMD camp loves to see in reviews but then turn around and trash when it comes to these sort of discussion.

Intel uses a combination of PL1&PL2, a timer and a temperature ceiling to determine boosting. This works well to extract the maximum amount of performance as early as possible, but has the small disadvantage of pushing temps to the limit with undersized cooling. Normally engineers tune the Tau timer on a specific OEM product to compensate, but DYI consumers do not. Overall Intel's boosting algorithm is predictable and dependable, but has grown old and cannot meet Intel's own expectations when it comes to extracting all the performance out of their CPUs, since the gap betwen PL2 and PL1 has grown so large that moving from one limit to the other leads to drastic changes in performance.Their ABT mechanic is a move in the right direction, but should have been used years ago in their entire lineup.

AMD uses a combination of PPT and target temperature to determine boosting behavior. In a normal usage environment it is actually the temperature readings that dictate average power consumption and NOT the PPT limit. This "target temperature" is arguably a better method to dictate boost since it's directly correlated to cooler performance, and not indirectly via the use of a timer and an "upper temperature ceiling". The CPU has a target temperature and will fluctuate clocks around it while also avoiding the PPT limit. On average this extracts the best performance that the cooling allows for.

As far as the consensus on TDP == Power consumption, this has been explained repeatedly:

- For "proper" stock Intel platforms TDP == Average Power consumption. They are one and the same since TDP is dictated by PL1 limit, which is a power limit that nowadays is easy to reach. Power consumption is the basis for Intel's TDP definition.

- For AMD platforms TDP is NOT a function of power consumption, since this variable is not included in the TDP formula at all. AMD defines their TDP based on target temperature delta over ambient and reference cooler heat dissipating properties (thermal resistance).

Both Intel and AMD managed to fail consumers with regard to their TDP ratings. Both of them (ultimately) disregarded the consumer's need to eyeball expected power consumption based on a simple indicator (or set of indicators). This is why this forum has gradually migrated from using TDP as measuring stick and increasingly relies on actual power & temperature measurements to understand cooling needs for a DYI system.

If Intel wants to increase TDP further for K SKUs then so be it. But at least enforce stock limits, and then allow consumers to optimize performance via enabling a feature similar to ABT. This makes stock behavior predicable and dependable again, while also allowing anyone to extract maximum performance with proper cooling.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,233

- 13,325

- 136

Ice Lake is not on 10SF/10ESF. Intel is also pushing Ice Lake to absurd limits in order to be competitive. Sapphire Rapids should give us a better picture of efficiency going forward.

We don't know a whole lot about 10SF or 10SFE's voltage/clockspeed curve in all-core turbos approaching 5 GHz (or whatever it is that Alder Lake-S attempts with its flagship SKU); that being said, Intel launching yet another SKU with a TDP of 125W (or higher!) and a PL2 that is twice the TDP ought to tell you something about pushing things to absurd limits.

I know. Has the consensus around here changed about TDP is not equal to power consumption though?

Are you serious? On the one hand, you have a company selling a CPU that will not under any circumstance exceed 135% of TDP unless you start messing with UEFI settings. Then you have Intel which can (with current products like the 10900k and 11900k) reach 200% of TDP for nearly a minute, and that's on boards that actually obey Intel's own tau specs. Many boards have a limitless tau unless you tinker with them to defeat that behavior.

Intel can not, under any circumstance, behave as though their products are even remotely better-behaved in the power consumption department!

Pretty much. During Sandy/Ivy/Etc times it was Intel that intentionally limited power consumption of its chips because their advantage was huge. Intel was great, I could use a picopsu with a cheap board to run their fastest sku without worrying of frying my board. Now things have turned around. Now I have to think, count and tinker!

Moving from 65nm -> 45nm -> 32nm -> 22nm gave Intel some massive advantages in perf/watt over previous nodes 14nm extended that advantage versus Intel 22nm once they had it working properly. That Intel is sticking with such high TDPs and PL2 values tells me that even 10SFE isn't going to offer them huge perf/watt advantages over 14nm, at least not compared to past node transitions.

Magic Carpet

Diamond Member

- Oct 2, 2011

- 3,477

- 234

- 106

That's right. I have doubts that Alder Lake S will get the performance per watt crown back, but I hope, that I am wrong. Again, my main complaint is that all action I've seen in the last few years have only been happening in the mobile space. I wish, I could buy say, a Tiger Lake part for my desktop today, but I can't. And I absolutely despise mobile designs with noisy fans. I've moved to ARM for all my mobile needs, pretty much, because it's damn silent 24/7 365 a year, no matter what the workload and no need to clean the fans, no matter how dusty is the environment.Moving from 65nm -> 45nm -> 32nm -> 22nm gave Intel some massive advantages in perf/watt over previous nodes 14nm extended that advantage versus Intel 22nm once they had it working properly. That Intel is sticking with such high TDPs and PL2 values tells me that even 10SFE isn't going to offer them huge perf/watt advantages over 14nm, at least not compared to past node transitions.

My last x86 laptop was this and then I gave up.

We don't know a whole lot about 10SF or 10SFE's voltage/clockspeed curve in all-core turbos approaching 5 GHz (or whatever it is that Alder Lake-S attempts with its flagship SKU); that being said, Intel launching yet another SKU with a TDP of 125W (or higher!) and a PL2 that is twice the TDP ought to tell you something about pushing things to absurd limits.

Are you serious? On the one hand, you have a company selling a CPU that will not under any circumstance exceed 135% of TDP unless you start messing with UEFI settings. Then you have Intel which can (with current products like the 10900k and 11900k) reach 200% of TDP for nearly a minute, and that's on boards that actually obey Intel's own tau specs. Many boards have a limitless tau unless you tinker with them to defeat that behavior.

Intel can not, under any circumstance, behave as though their products are even remotely better-behaved in the power consumption department!

Moving from 65nm -> 45nm -> 32nm -> 22nm gave Intel some massive advantages in perf/watt over previous nodes 14nm extended that advantage versus Intel 22nm once they had it working properly. That Intel is sticking with such high TDPs and PL2 values tells me that even 10SFE isn't going to offer them huge perf/watt advantages over 14nm, at least not compared to past node transitions.

I have heard estimates that 10SF is 20% more efficient than 10nm. We know quite a bit about efficiency vs Ice Lake thanks to AnandTech: https://www.anandtech.com/show/16084/intel-tiger-lake-review-deep-dive-core-11th-gen/6

EDIT: AnandTech says Tiger Lake was 13% more efficient in their testing.

Last edited:

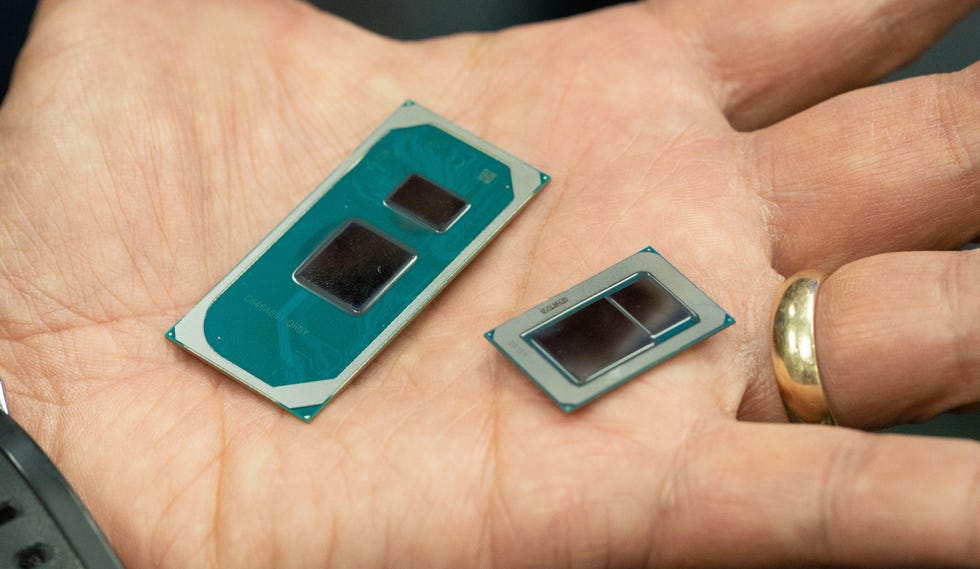

That's just the pcb, right? Not the actual die?There's only one Ice Lake 4+2 LP die (stepping D1) and one Ice Lake PCH-LP die (stepping D0) from which Intel currently makes 4 platforms (ICL-U/UN/Y/YN) and at least 15 SKUs. The N models use smaller "nano" packages, and it's amazing to me that to date there does not appear to be a single photo of those packages anywhere on the internet. For some reason, iFixit didn't bother to publish pictures as part of their usual teardowns. I almost disassembled some of my customers' machines to snap a few pics but couldn't justify pulling off the heat sinks for the sake of my own curiosity. Anyway, mostly similar specs, but not the same product:

ICL-Y, BGA1377, 26.5 mm x 18.5 mm

ICL-YN, BGA1044, 22 mm x 16.5 mm

ICL-U, BGA1526, 50 mm x 25 mm

ICL-UN, BGA1344, ?? mm x ?? mm

The dimensions I quoted are for the package/organic substrate, yes, not the silicon die. These are all multi-chip SoC packages containing both the CPU die and PCH.That's just the pcb, right? Not the actual die?

This is what U and Y look like:

UN and YN are respectively smaller, but there are no photos available, AFAICT.

Isn't cpu connected to PCH using DMI? basically glorified PCIE. I doubt distance matters a lot, compared to usual latencies of PCIE.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

Isn't cpu connected to PCH using DMI? basically glorified PCIE. I doubt distance matters a lot, compared to usual latencies of PCIE.

Closer physical distances can be used to lower latency, but only if the interface/protocol is designed for it.

On mobile chips with PCH on package it uses a variant they call ODI which is much more power efficient than DMI. A higher performance ODI was used to connect eDRAM on older Intel CPUs.

3000 / 2300 = 1.304x not 2.000x.

1165G7 @ 15W = 1.8GHz

1065G7 @ 15W = 1.3GHz

That's a 38% difference in clocks but you'll never see that. 28W Tigerlake has 2.3x higher base clocks, but again doesn't matter. Also lot of 1065G7 were set at 20W or even 25W and the 1068G7 wasn't any faster despite having 2.3GHz base clock.

Last edited:

As IntelUser2000 said, the LP (Low Power) platforms use OPI (On Package Interconnect/Interface/DMI?). In this case it's OPI x8 @ 4 GT/s, which is the equivalent of DMI 3.0 x4, but uses less power.

The package size has a lot to do with the number of balls required for power delivery and I/O. Most of the balls are for power and ground, and Y is a lower power platform, hence fewer balls. U also supports both DDR4 and LPDDR4/X memory interfaces (vs. just LPDDR4/X for Y) and offers an additional Type-C interface and two additional HSIO lanes. The ball pitch appears to be tighter on Y as well.

The board real estate matters a lot to some OEMs, especially in products like tablet PCs and 2-in-1s.

The package size has a lot to do with the number of balls required for power delivery and I/O. Most of the balls are for power and ground, and Y is a lower power platform, hence fewer balls. U also supports both DDR4 and LPDDR4/X memory interfaces (vs. just LPDDR4/X for Y) and offers an additional Type-C interface and two additional HSIO lanes. The ball pitch appears to be tighter on Y as well.

The board real estate matters a lot to some OEMs, especially in products like tablet PCs and 2-in-1s.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

The board real estate matters a lot to some OEMs, especially in products like tablet PCs and 2-in-1s.

They truly are a Aircraft Carrier when it comes to changing strategies and steering a different way.

They'd have saved lot more space if it integrated the PCH like everyone did since 2017. AMD did that with Raven Ridge. Alderlake better have active interposer using Foveros like Lakefield, without the downsides of course.

I expected Tigerlake to have one based on some early leak/rumors of them having greatly improved power efficiency. Well, that was a bust. Plus whatever efficiency there was they used it to clock it to the stratosphere - because Netburst 2.0.

NostaSeronx

Diamond Member

- Sep 18, 2011

- 3,815

- 1,294

- 136

Just to clarify: The fully integrated FCH was introduced in Kabini within 2013.They'd have saved lot more space if it integrated the PCH like everyone did since 2017. AMD did that with Raven Ridge.

Last edited:

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

Just to clarify: The fully integrated FCH was introduced in Kabini within 2013.

So did Intel with Atom series a year earlier with their Smartphone Medfield platform. And that continues on till today.

But they haven't changed their main lineup to a SoC. At least with AMD, they moved their main lineup starting with Carrizo. Mobile chips should have moved to it much earlier too. Actually AMD's way of putting a cut down version and desktop variant needing an extra for added I/O is a very good strategy.

Last edited:

They'd have saved lot more space if it integrated the PCH like everyone did since 2017. AMD did that with Raven Ridge. Alderlake better have active interposer using Foveros like Lakefield, without the downsides of course.

Doesn't sound like that's happening until Meteor.

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

what a bucket of redacted

I am gonna be direct- so many women at "leader" positions is for me clearly the reason why Intel is in this crap

Pat good luck, those are very hard to fire even if they don't deliver- my own expecience- social manipulation, decieving, blatant lying, being in every project where don't even have to be to get the credit or silent leave those which don't run too well t not ruin their rep

compare this conversation to Jim Keller or Lisa Su

I don't wish Intel bad but with this stuff it is a big no

Profanity (even abbreviated) is not allowed in the tech forums.

Furthermore, your misogynistic beliefs/viewpoints are not welcome on the

forums, period.

AT Mod Usandthem

Last edited by a moderator:

Women can be smart and technically or managerially apt.

Although I must say I got a bit worried when I saw Dr. Kelleher in the recent presentation. I will not comment further in order not to spoil warm welcoming inclusive atmosphere on this forum.

Although I must say I got a bit worried when I saw Dr. Kelleher in the recent presentation. I will not comment further in order not to spoil warm welcoming inclusive atmosphere on this forum.

These upper manager interviews are useless 99% of the time anyway. Absolutely no info in them, no matter what company they are from. For example Anandtech interview with AMD CTO? Guy was asked question about automotive and stuff literally days before Xilinx deal reveal and proceeded with casual MBA talk. Each and every corporate interview is like that. Zen2 is "completely new" process, Zen3 is "that" big redesign, AVX512 is flourishing and so on and on.

The real gems in the industry are proper leaks like we had with ZEN3 architecture early on, reading those interviews is waste of time. Investor calls are sometimes interesting too, as analysts also have black belts in MBA talk and word and fraze questions in ways that make upper management snakes slip and reveal more than they would normally.

The real gems in the industry are proper leaks like we had with ZEN3 architecture early on, reading those interviews is waste of time. Investor calls are sometimes interesting too, as analysts also have black belts in MBA talk and word and fraze questions in ways that make upper management snakes slip and reveal more than they would normally.

Last edited:

Hulk

Diamond Member

- Oct 9, 1999

- 5,428

- 4,166

- 136

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.www.anandtech.com

what a bucket of redacted

I am gonna be direct- so many women at "leader" positions is for me clearly the reason why Intel is in this crap

Pat good luck, those are very hard to fire even if they don't deliver- my own expecience- social manipulation, decieving, blatant lying, being in every project where don't even have to be to get the credit or silent leave those which don't run too well t not ruin their rep

compare this conversation to Jim Keller or Lisa Su

I don't wish Intel bad but with this stuff it is a big no

Profanity (even abbreviated) is not allowed in the tech forums.

Furthermore, your misogynistic beliefs/viewpoints are not welcome on the

forums, period.

AT Mod Usandthem

It has nothing to do with gender, you are either good/qualified for a job or you aren't. Now if you are referring to the belief of equal outcomes and the result of that proposition in the workplace then that's a different issue for a different forum.

For me the interview had no "meat" in it at all. In my opinion Intel won't have anything to talk about until they actually release a great product.

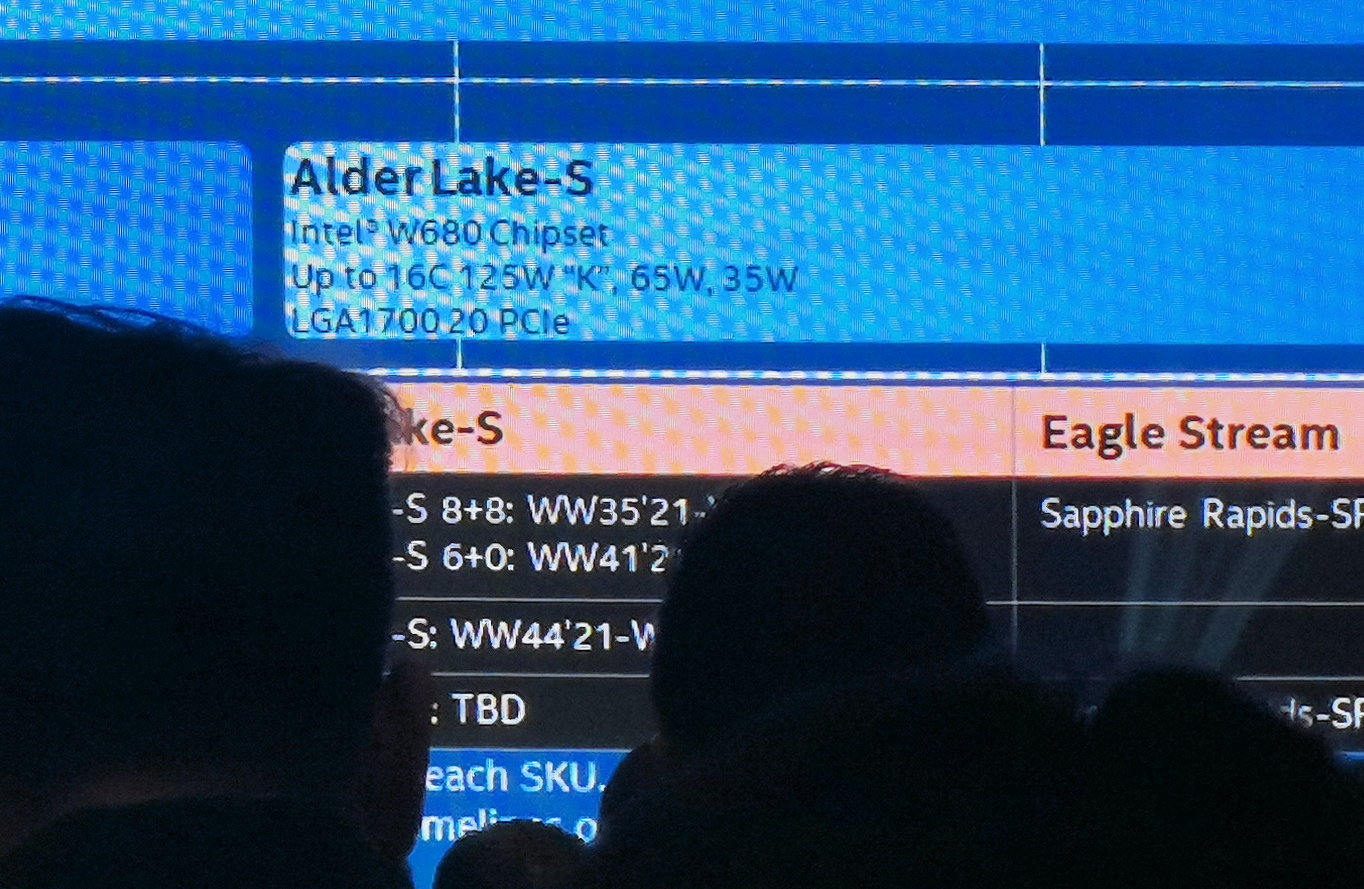

Roadmap leak

videocardz.com

videocardz.com

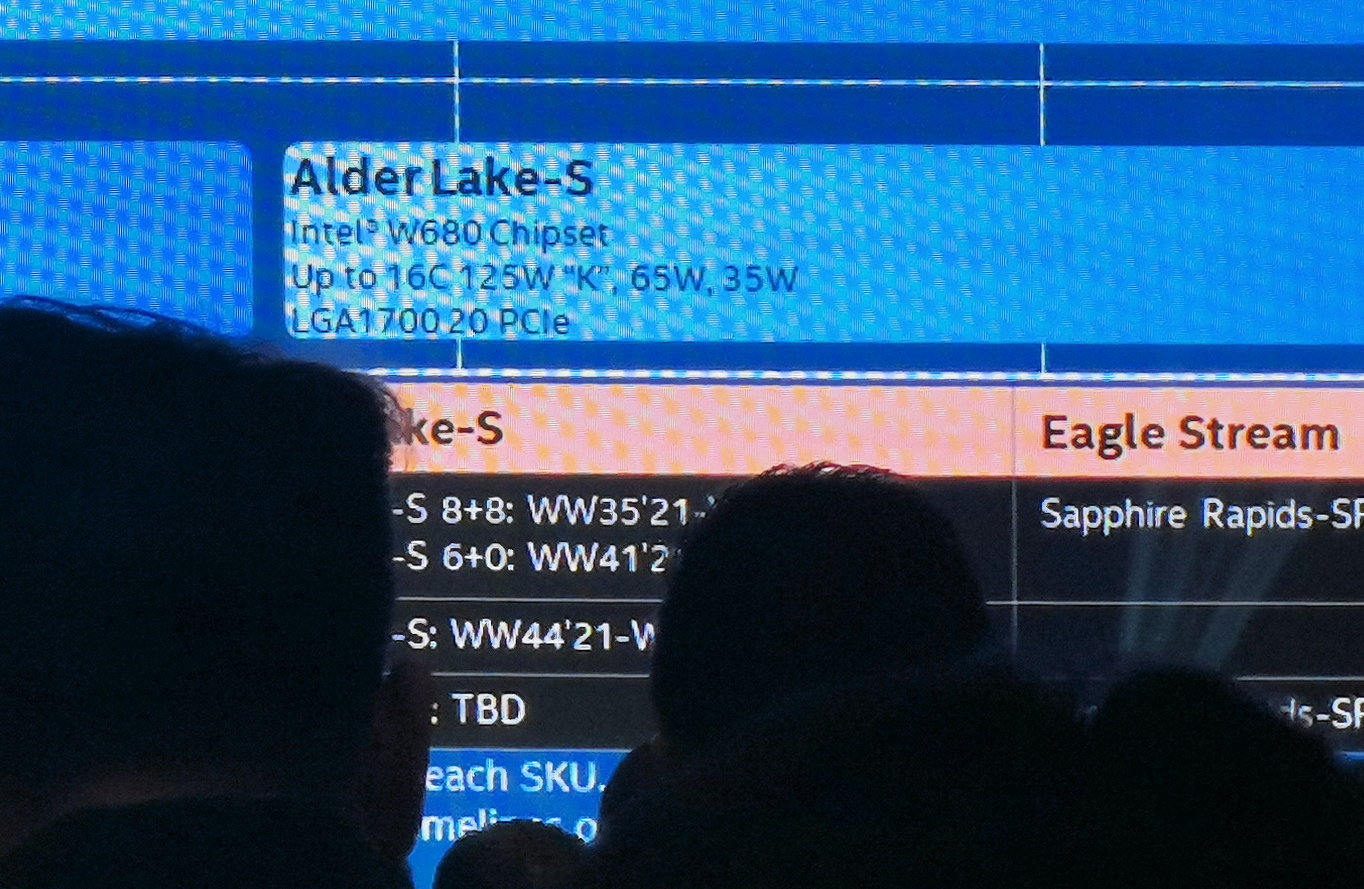

Looks like Alder Lake production around September. If availability follows Rocket Lake's timeline, maybe on shelves for Black Friday?

Also, an interesting note of Ice Lake for workstations.

Intel roadmap with Alder Lake-S workstation CPUs planned for Q3 2021 has been leaked - VideoCardz.com

Alder Lake-S already in Q3? A very interesting leak has just been shared by @9550pro. It appears that the launch of Intel Alder Lake-S might be much sooner than previously expected. The roadmap that has leaked does not refer to the client ‘Core’ series specifically but rather to the S-Series...

Looks like Alder Lake production around September. If availability follows Rocket Lake's timeline, maybe on shelves for Black Friday?

Also, an interesting note of Ice Lake for workstations.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.