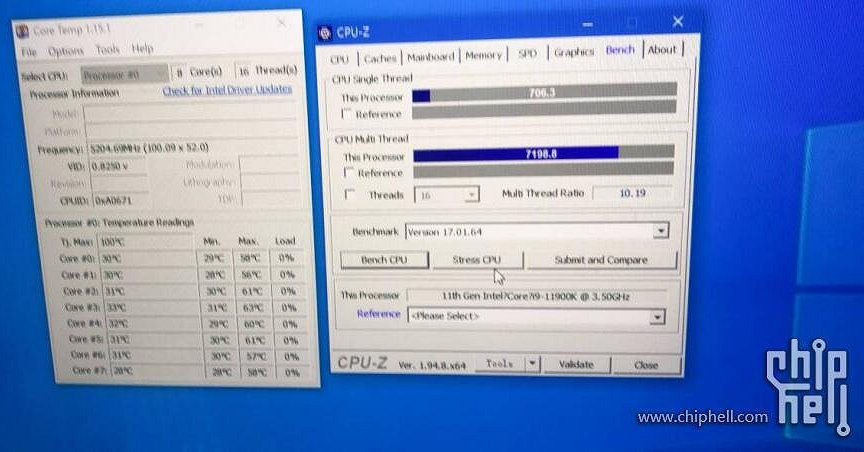

Coming straight to the performance numbers, both CPUs were tested at an overclock frequency of 5.2 GHz across all cores. Do note that the Intel Core i9-11900K features a brand new architecture but has 2 fewer cores than the Core i9-10900K which relies on an enhanced Skylake architecture. Both CPUs were tested on a Z490 motherboard and the memory featured was 16 GB DDR4-3600 due to a lock on Rocket Lake. The Core i9-11900K voltage was set to auto and hit 1.48V. A chiller was required to maintain the 5.2 GHz overclock.

In CPU-z, the Intel Core i9-11900K is 11% faster than the Core i9-10900K in single-core tests but ends up 12% slower in multi-core tests. In Cinebench R15, the Intel Core i9-11900K once again takes a 12% lead over the Core i9-10900K but also ends up 12% slower in multi-core tests. The Cinebench R20 & Cinebench R23 results show a 16% gain for the Core i9-11900K over its Core i9 predecessor but again, when it comes to multi-threaded performance, the CPU is no match to its predecessor.

In x264 1080p, the Intel Core i9-11900K loses to the Core i9-10900K by 5 FPS. The same is true for V-Ray where the Core i9-10900K outpaces the Core i9-11900K by 14 more MPaths. Moving over to 3DMark results, our friend over at Twitter,

Harukaze5719, has compiled a chart that compares the performance of the Core i9-11900K and Core i9-10900K in all said tests. The Core i9-11900K loses to the 10th Gen flagship in all tests.

Lastly, we have gaming performance tests and the results here are underwhelming for the Rocket Lake flagship. The Intel Core i9-11900K seems to be just slightly better or on par with the Core i9-10900K but there are also titles that show performance reduction versus the Core i9-10900K. The

previous benchmarks also showed similar performance numbers and further mentioned how hot and power-hungry the flagship Rocket Lake CPU is going to be.