Interesting thread and nice to see the results across a wide variety of games.

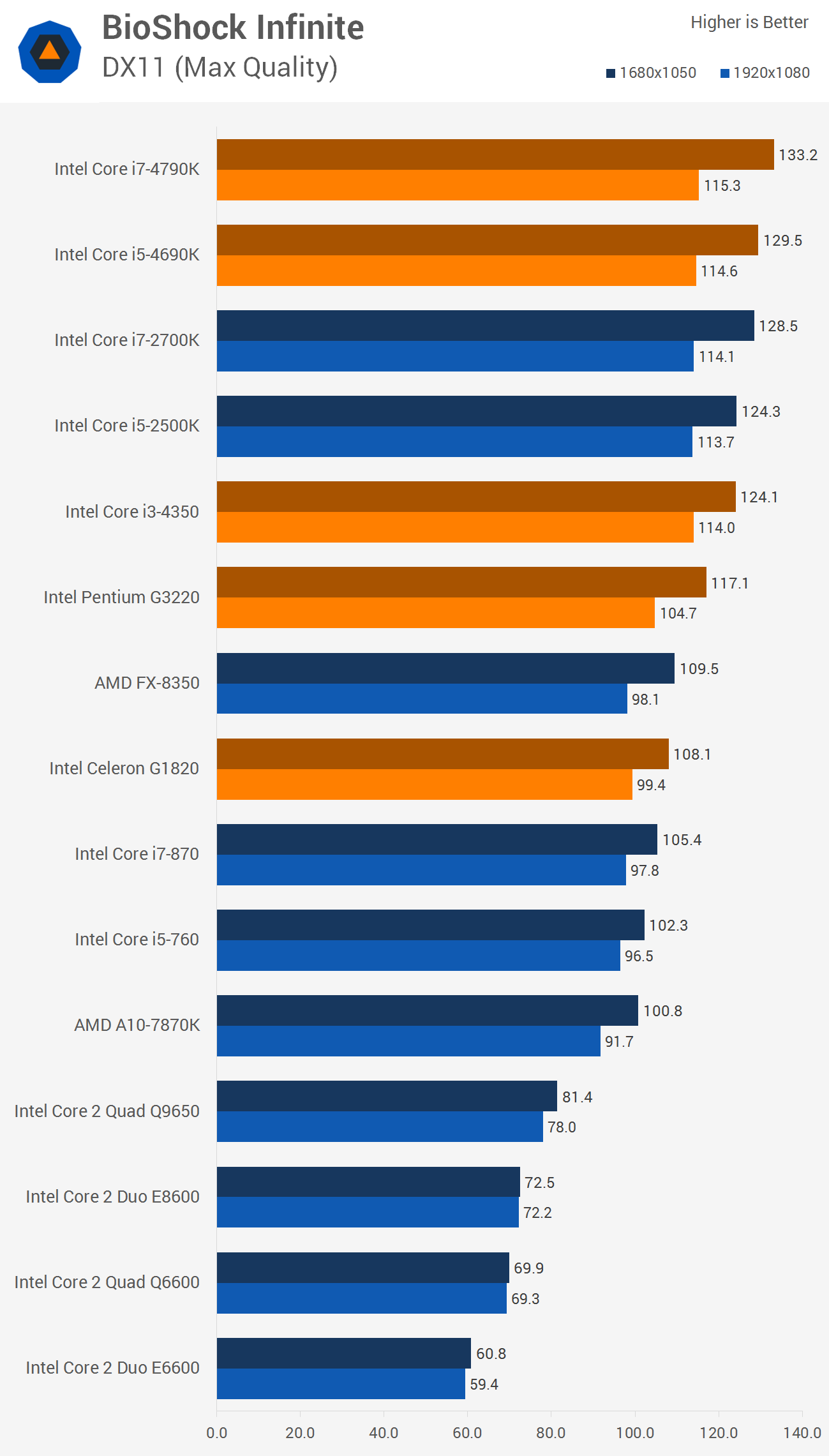

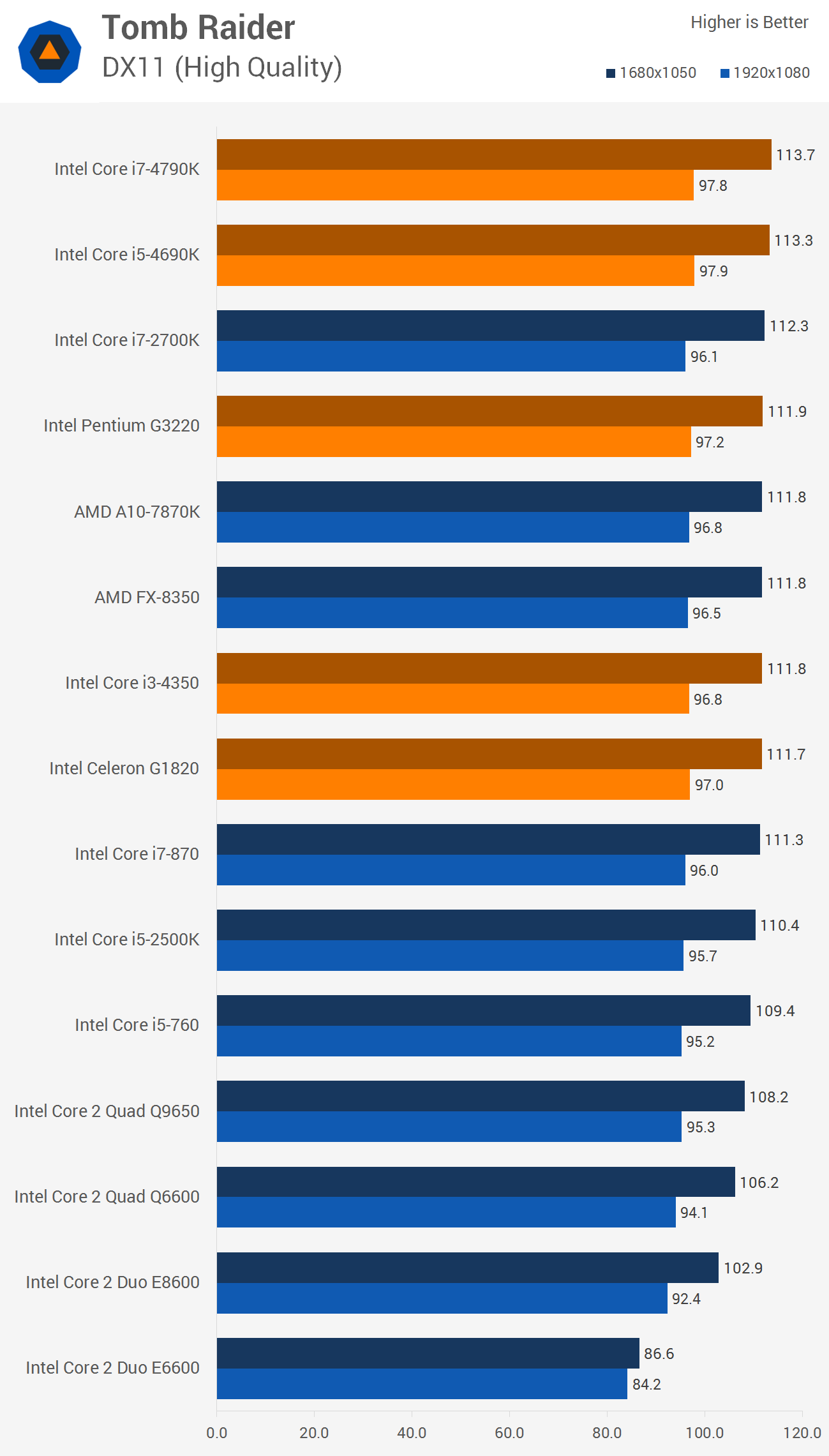

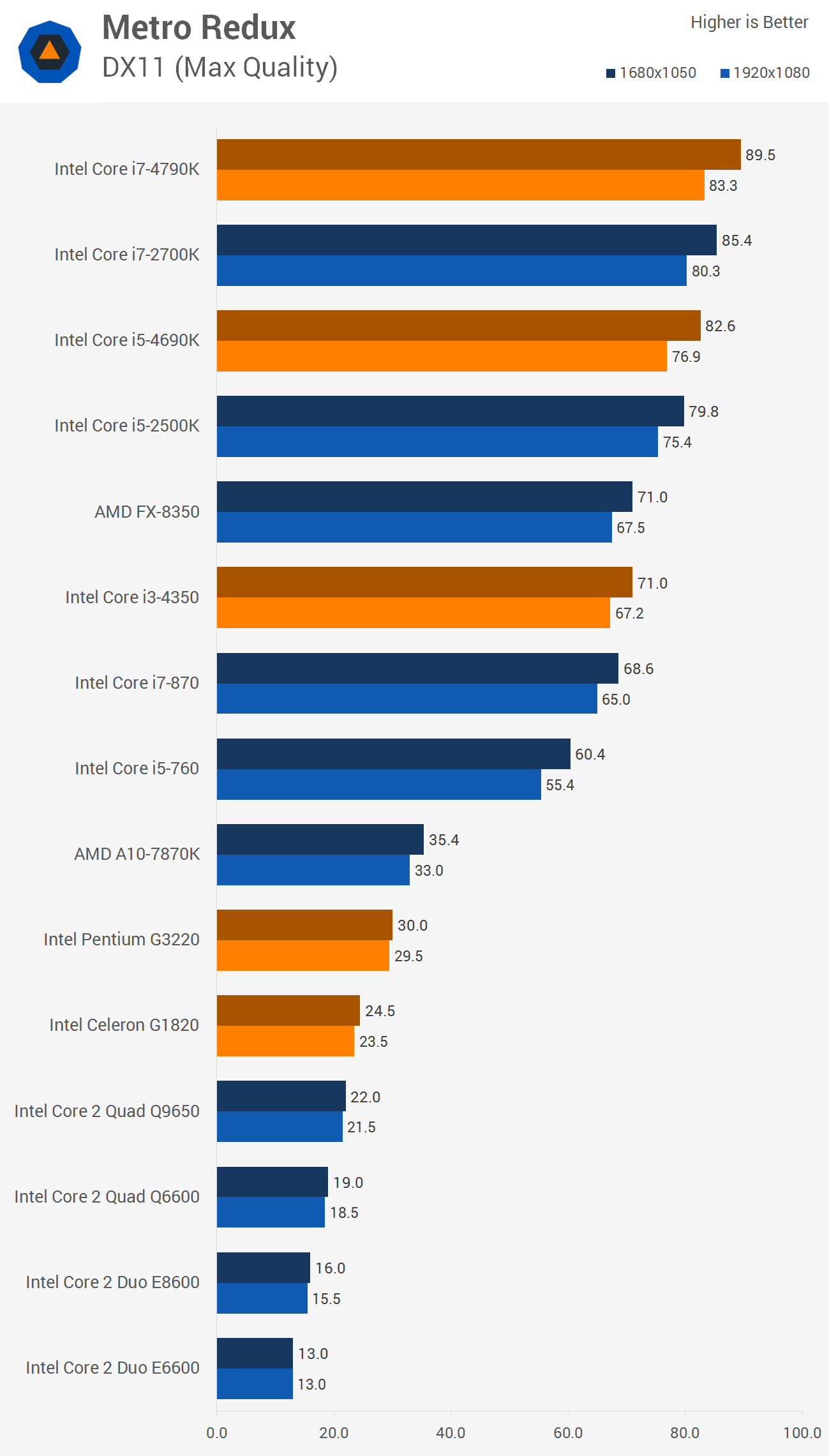

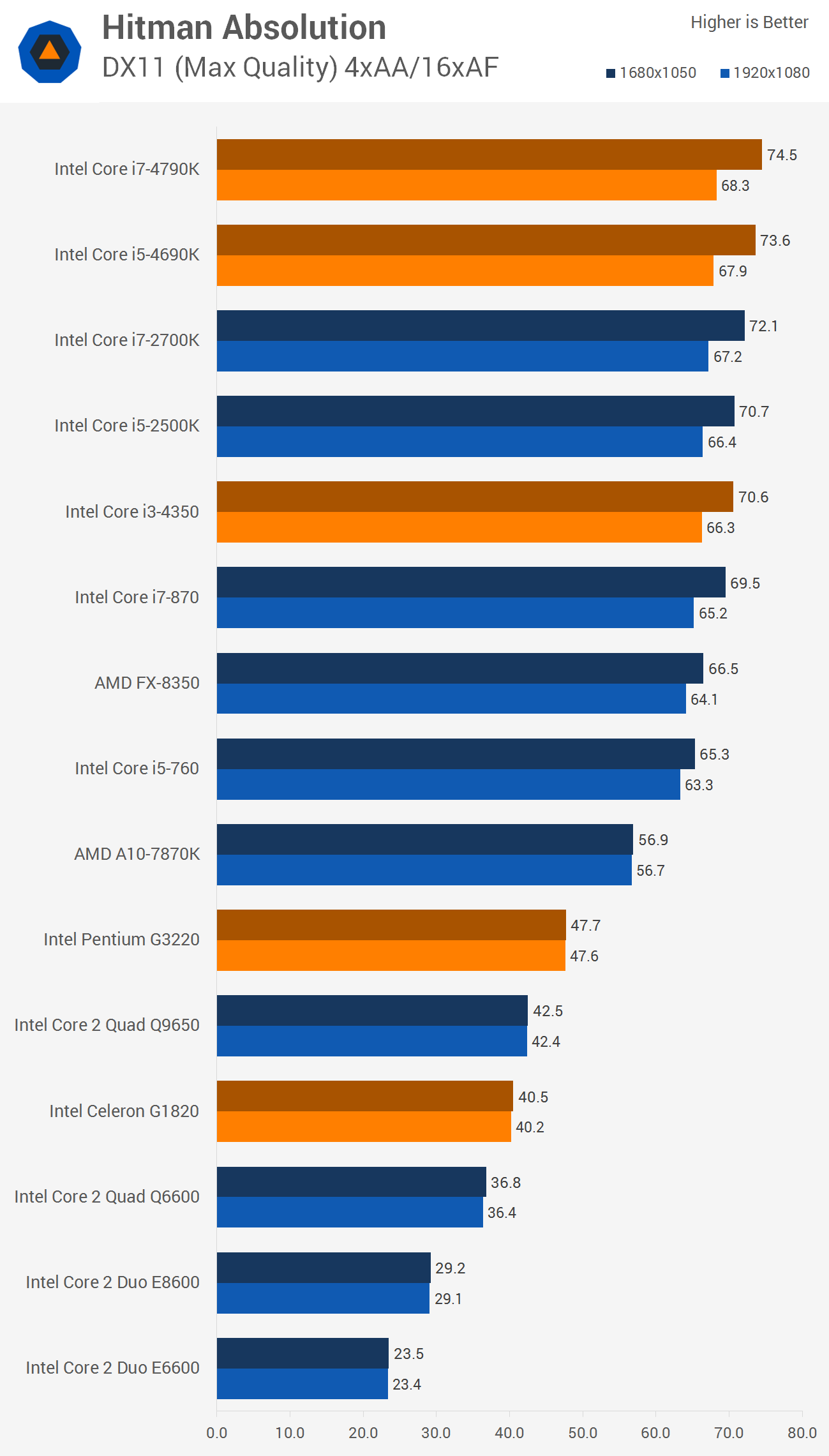

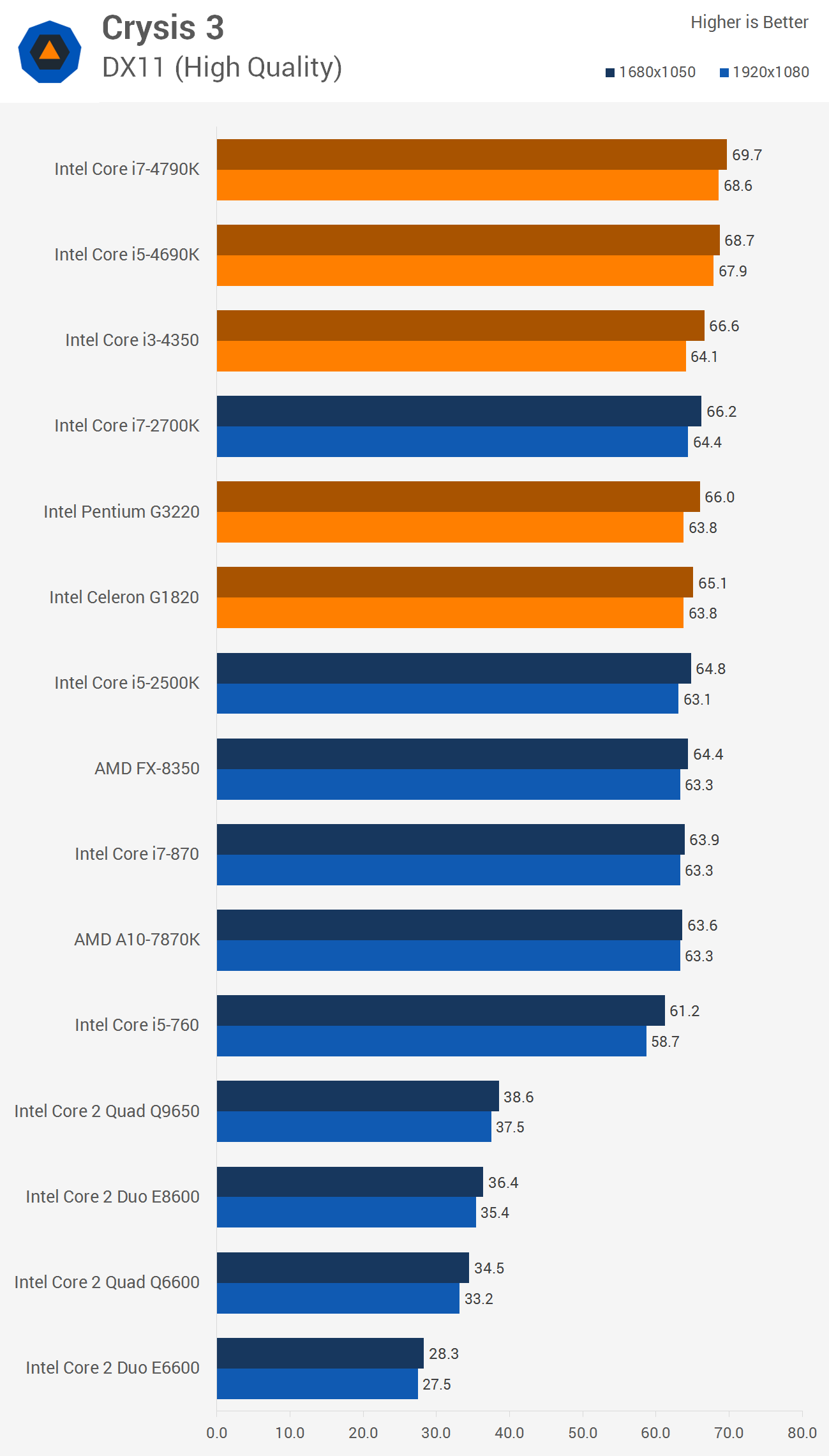

C2D/Q is a hit and miss depending on the title. TechSpot did a good investigation. While some games can be perfectly playable with E6600/Q9550:

Other games are more or less unplayable/console level fps:

Source

Even an

i5 760/i7 860 @ 3.8-3.9Ghz would provide a HUGE increase in performance and actually provide >> PS4 level of graphics/performance with an HD7950/GTX760 level GPU. Q9550 OC cannot claim that in a wide variety of games.

for example on Witcher 3 the 260X is up to

2x the 6970

http://pclab.pl/art66374-5.html

This is why I cannot trust anything that ever comes out of that site. Since the first person ever linked reviews from there, all their data constantly contradicts all other professional sites.

Computerbase - average 1080P performance

260X = 100%

HD6970 = 100%

http://www.computerbase.de/2015-08/nvidia-geforce-gtx-950-test/3/#abschnitt_tests_in_1920__1080

Specifically TW3:

260X = 18.1 fps (+24%)

6970 = 14.6 fps

http://www.computerbase.de/2015-08/nvidia-geforce-gtx-950-test/3/#diagramm-the-witcher-3-1920-1080

In any event, TW3 is an extremely punishing game for lower end/slower GPUs. More or less to have a great gaming experience in TW3 requires a far more powerful/modern GPU than 260X/6970/580.

Time and time again I see comments how AMD abandoned pre-GCN graphics card but the truth is the GPUs of that era are simply too slow to keep up with modern games at decent settings.

GTX580 is only 9% faster on average than the HD6970 at 1080P in modern games. So in fact, AMD didn't abandon pre-GCN GPU optimizations because 580 bombs just as much -- the simple truth is those GPUs/architectures are just too slow for modern titles. In comparison, from my links above, the 280X is now

84% faster than the 6970 and

68% faster than the GTX580 in modern titles. Modern games basically wipe the floor with older GPUs, which is to be expected since 6970/480/580 are all almost 5 years old. At least most people on this forum were smart enough to buy a $230-300 6950 and unlock it instead of spending extra on the 6970. Looking at relative standing, compared to that, the $450-500 580 looks horrendous since it's just as unplayable but cost way more than the unlocked 6950 2GB.

260X will beat the 6950 on most newer games, pre GCN AMD cards don't get to much attention from their drivers team and game devs....

The first part of your statement is basically false as Computerbase has 260X and 6970

exactly tied on average in 18 modern games. That's as conclusive and scientific as it possibly gets. The second part of your statement is completely misleading because it applies to both NV's Fermi and AMD pre-GCN cards and has been shown conclusively in this video that beyond the 1st year driver improvements

brought very little performance increases for Fermi as well. This is actually completely in-line with 580 beating 6970 by only 9% on average at 1080P in modern games.

Why do people have 16GB+ RAM? So they can have 100 tabs open in Chrome? Why?.

Actually having an SSD helps way more with 100 chrome tabs than having 16GB of RAM.

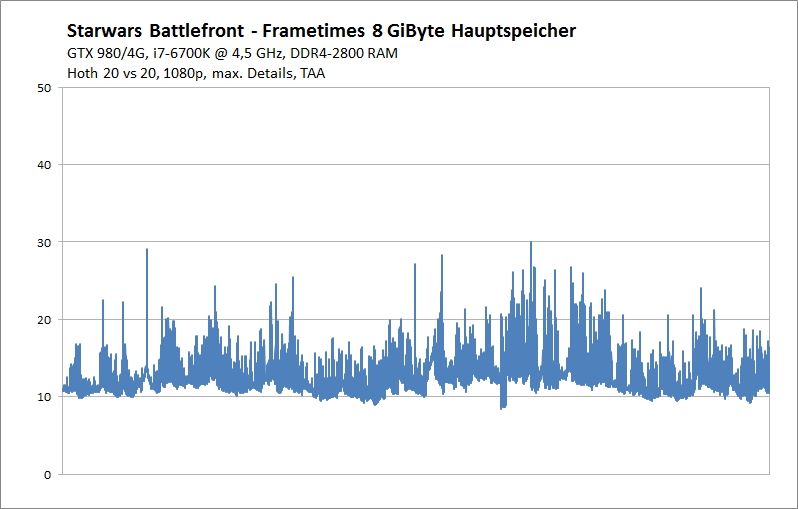

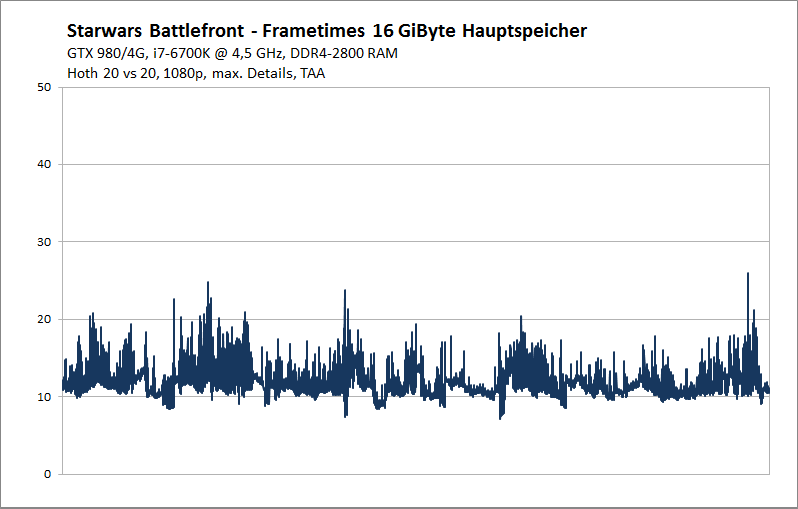

The only scientific tests of a game I've seen that shows a benefit over 8GB of system memory is SW:BF Beta.

http://www.pcgameshardware.de/Star-...950/Specials/Beta-Technik-Benchmarks-1173656/

Up until that point I've never seen any game that actually benefits from more than 8GB of system memory as far as performance went. It's possible there are such games but I haven't seen the data and if anyone has, please add to the knowledge base.

I think most people buy 16GB of memory because DDR3 has been very cheap in recent years and 16GB sounds nicer than 8. hehe. Up until SW:BF, that $$$ would have been better spent moving from i5 to an i7, getting a faster GPU, SSD, better monitor, etc. That's why I've stuck to 8GB for as long as I could as why waste $ for no benefit?