- Feb 2, 2009

- 14,003

- 3,362

- 136

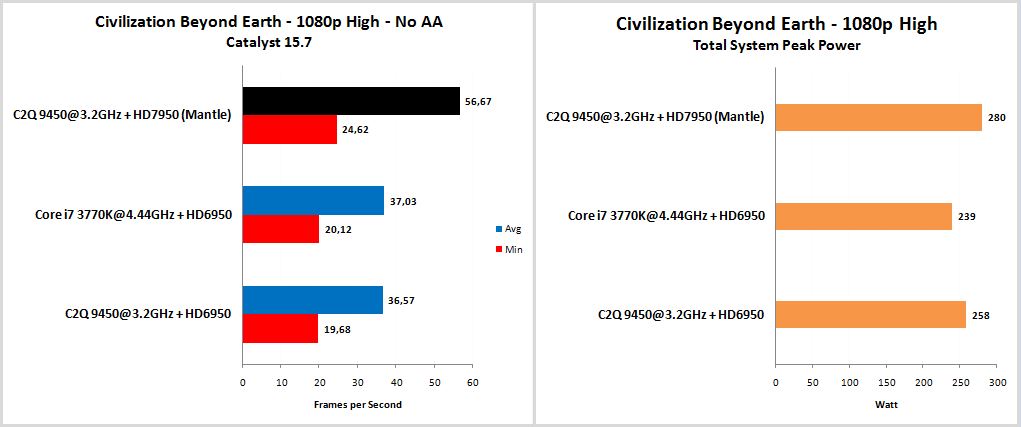

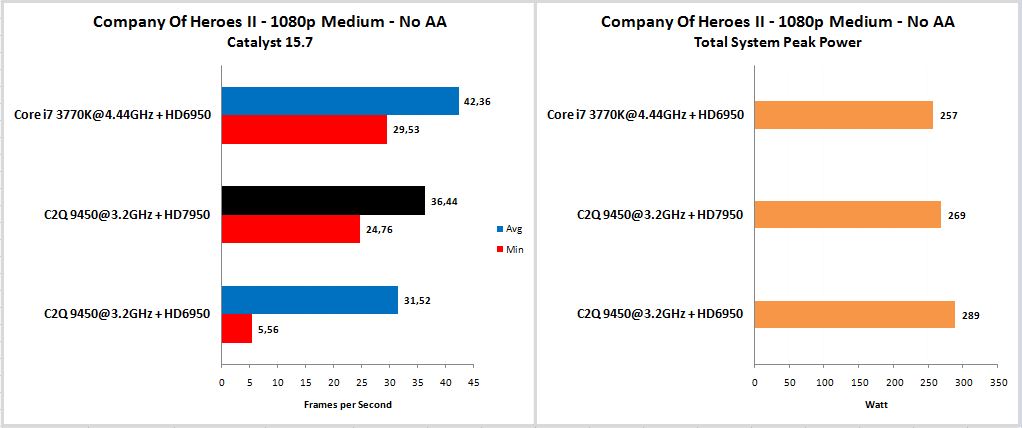

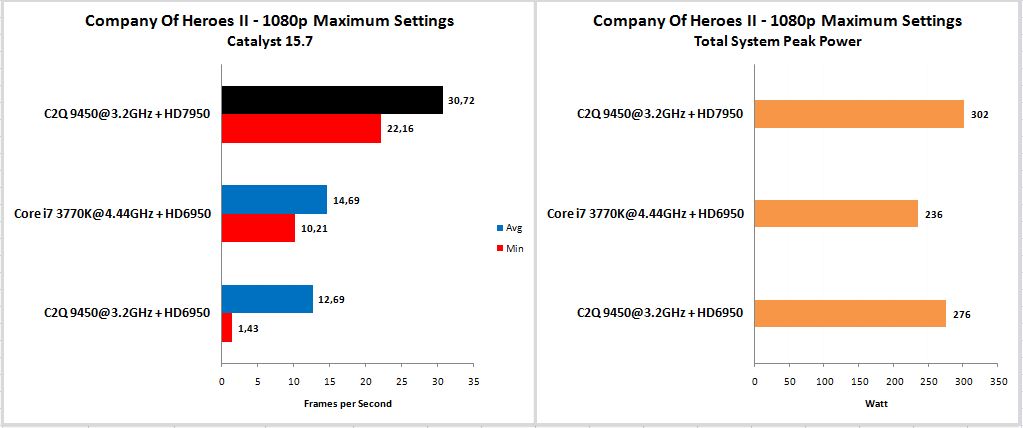

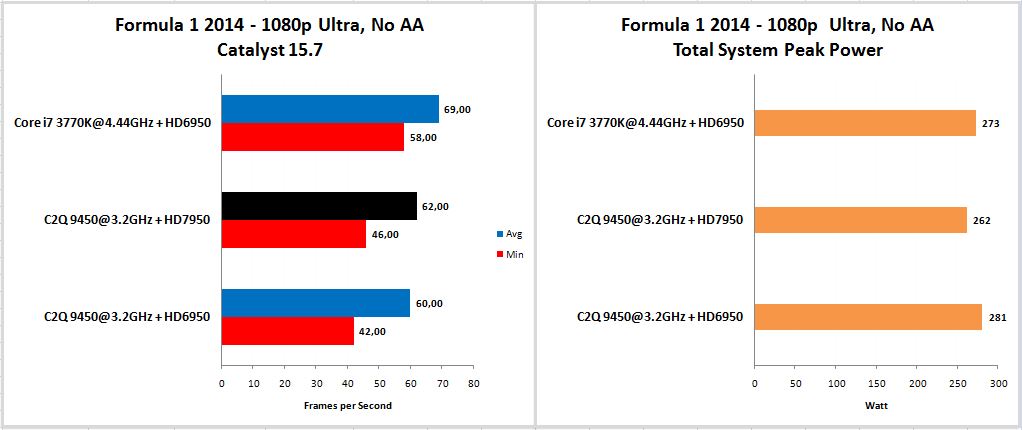

So you still have your venerable Core 2 Quad that has been overclocked to a mildly 3.2GHz and paired with an AMD HD6950.

A simple question, do you upgrade to a new CPU/platform or do you upgrade your Graphics Card ?? Can the Core 2 Quad leverage the performance of todays middle-End Graphics cards or a new faster CPU will give you more performance in todays Games??

This topic will investigate which of the two will give you the better Gaming experience.

For the CPU upgrade I will use the Intel Core i7 3770K overclocked to 4.44GHz. This will simulate new CPUs with very high single core performance.

For the GPU upgrade I will use the AMD HD7950 overclocked to 1GHz, this will simulate graphics cards like the R9 280/285 and R9 380.

System Specs

Socket 775

CPU : Intel Core 2 Quad 9450 @ 3.2GHz

Motherboard : ASUS Rampage Formula

Memory : 2x 2GB Gskill F2-8500 DDR-2 1066MHz

HDD : 1TB Seagate ST1000DM003 7200rpm Sata-6

Socket 1155

CPU : Intel Core i7 3770K @ 4.44GHz

Motherboard : ASUS Maximus V Gene

Memory : 2x 4GB Kingston KHX2133C11D3K4 DDR-3 2133MHz

HDD : 1TB Seagate ST1000DM003 7200rpm Sata-6

Graphics Cards used

ASUS HD6950 DCII/2DI4S/1GD5 (810MHz core, 1250MHz Memory)

ASUS HD7950 DC2T-3GD5-V2 (1000MHz core, 1500MHz memory)

For both Systems

Windows 8.1 Pro 64Bit

Catalyst 15.7

A simple question, do you upgrade to a new CPU/platform or do you upgrade your Graphics Card ?? Can the Core 2 Quad leverage the performance of todays middle-End Graphics cards or a new faster CPU will give you more performance in todays Games??

This topic will investigate which of the two will give you the better Gaming experience.

For the CPU upgrade I will use the Intel Core i7 3770K overclocked to 4.44GHz. This will simulate new CPUs with very high single core performance.

For the GPU upgrade I will use the AMD HD7950 overclocked to 1GHz, this will simulate graphics cards like the R9 280/285 and R9 380.

System Specs

Socket 775

CPU : Intel Core 2 Quad 9450 @ 3.2GHz

Motherboard : ASUS Rampage Formula

Memory : 2x 2GB Gskill F2-8500 DDR-2 1066MHz

HDD : 1TB Seagate ST1000DM003 7200rpm Sata-6

Socket 1155

CPU : Intel Core i7 3770K @ 4.44GHz

Motherboard : ASUS Maximus V Gene

Memory : 2x 4GB Kingston KHX2133C11D3K4 DDR-3 2133MHz

HDD : 1TB Seagate ST1000DM003 7200rpm Sata-6

Graphics Cards used

ASUS HD6950 DCII/2DI4S/1GD5 (810MHz core, 1250MHz Memory)

ASUS HD7950 DC2T-3GD5-V2 (1000MHz core, 1500MHz memory)

For both Systems

Windows 8.1 Pro 64Bit

Catalyst 15.7