Wrong.

Heatpipes have maximum effective heat flux based on physical dimensions:

The heat pipe capillary limit typically sets the maximum heat pipe operating power, except at very low and very high temperatures. It simply states that the wick pumping capability must be greater than the sum of the heat pipe pressure drops.

www.1-act.com

Science!

You can not push enough air through the fins to make attached heat pipes perform any better beyond a certain point. I was pushing over 300cfm through the fins of an NH-D15. That is insane. There's also limits on how many heatpipes you can cram into the base on an HSF and have them still pick up heat effectively.

you still look at it from CPU perspective, I look at it from the bottleneck perspective since we are talking about sustained MT avx2 load, which is exactly what 10900K is not designed for

so lets make a calculation, some basic stuff

Enthalpy change and temperature rise when heating humid air without adding moisture.

www.engineeringtoolbox.com

Moist and humid air calculations. Psychrometric charts and Mollier diagrams. Air-condition systems temperatures, absolute and relative humidities and moisture content in air.

www.engineeringtoolbox.com

NH D15 has a max airflow of 140m3/h which equal with normal relative humidity (50%) the mass flow is around 170kg/h

so with some basic recalculations to remove heat of 200W (J/s)- in 1 hour equals 720000J/h or 720kJ/h

so I add 720kJ to 170kg of air, which means 4,3KJ/kg and that means temp rise when steady flow from intake with ambient air of 22C to around 26C to outtake without VRM, chipset etc

even adding them, that you are right extreme amount of airflow has to be in balance of case airflow, which needs to be higher than the air flow through CPU cooler

that amount of air flow is not the heat removal bottleneck

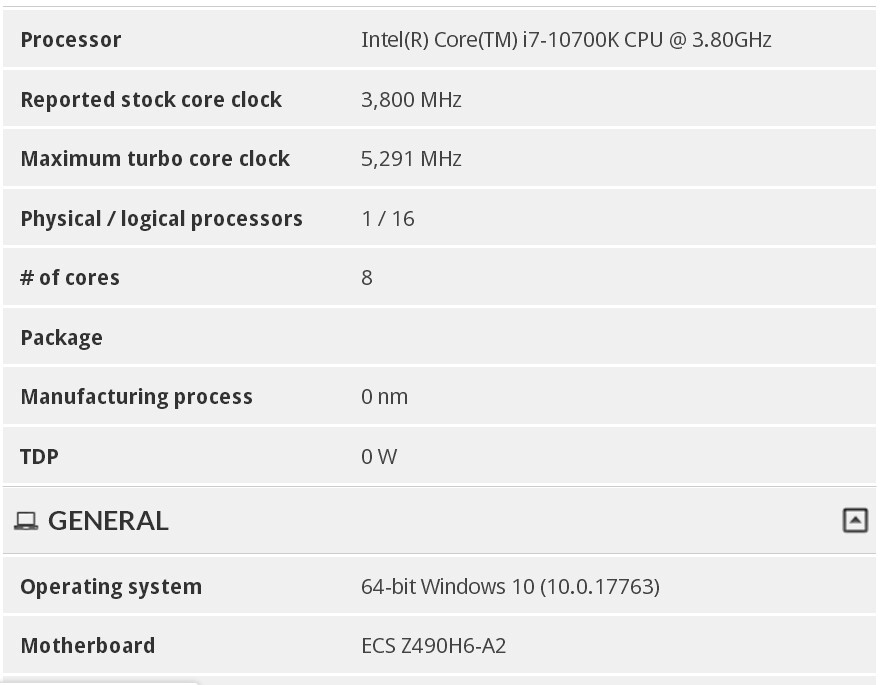

heat flow:

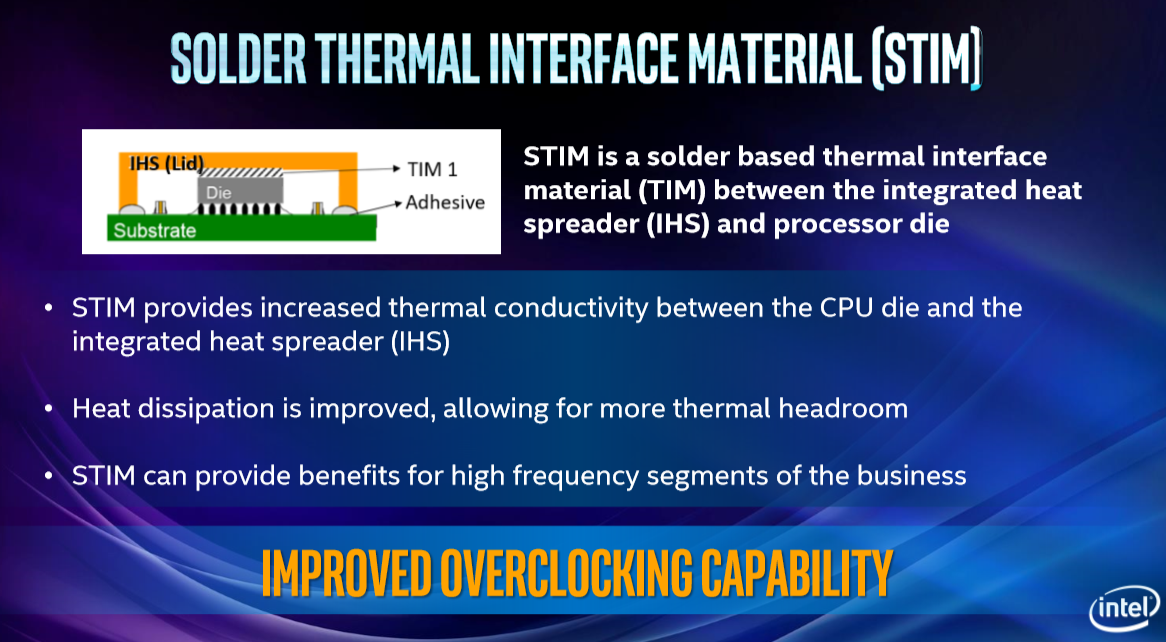

- silicon to the heat spreader

- heat spreader to thermal compound

- thermal compount to the cooler base

- cooler base to the heatpipes

- heatpipes to the cooler fins

- cooler fins to air flowing

- air flow out of the case - this is often underperforming

the bottleneck is point 1 to 4 unless you want toi pull out like 300W from the CPU alone, where no air can help you

if you look at the ryzens, 2700X wraith prism can handle it with 105W TDP with pretty much the same power as my 3900X while it can't handle it

you will get to the temp limit much sooner than to the cooler limit

temperature with the same heat flow has to rise to low 90s to increase the temperature difference and thus compensate the low surface area of ryzen 3k to achieve the same heat flow 142W

NHD15 has exactly zero problems of removing 200W as absolute value, when the other enviroment supports it

so the TDP rating of our beloved CPU makers has exactly zero value as exact number

We'll see how it shakes out, but right now it's looking like a 9900k with two extra cores and an interesting ST boost feature that won't work in a lot of games that are multithreaded.

I guess it will, we will see with benches and especially with 3080Ti

If it's system power draw rather than package power draw, it's completely irrelevant to the discussion at hand,

oh no, it is the only relevant

if you care about power, then you care about the system power

it is like with a car- having a super efficient engine isn''t equal to low fuel consumption, there are other components

so I am observing higher power consumption of my 3900X system than I expect that is it

If you don't want to pay attention to some other company telling you exactly what is the package power for their CPUs will be @ default then so be it.

you are still looking at Intel's absolute value, that is not what Intel means with their TDP

IMO Intel is solving the legal thing

you will be surprised what can customers claim as bad

Intel IMO made decision, that everything about base clock with brutal load (95W 9900K is base 3,6GHz while handbrake is 4,1GHz) so pretty much nobody can claim that they don't fullfill their promise

and then if you want more, do whatever your board/cooling/whatever can handle

but that is not the point

10900K can definitely use 200+W, but with a workload not designed for it

If I buy a Porsche 911 I dont complain that it burns 40 l/100km while pulling a truck

10900K is a muscle car sprinter, not a worker, the same as 9900K

If I need a truck, I already have one so the big fat fanboy screaming messages are just showing their incompetence

any CPU clocked and volted to their limit in history showed the best content consuming performance, but the worst content creating perf/watt