I’m afraid it’s you that needs to take a look at the arguments being presented here. I stated that you can’t infer TDP by simply looking at temperatures, and this is absolutely correct. No amount of your appeal to authority logical fallacy will change this.

I can prove this right now from a leaked review:

http://www.tweaktown.com/reviews/46...th_ivy_bridge_motherboard_review/index11.html

IB has higher temperatures compared to SB, so I guess IB also uses more power too? Actually no, it doesn’t. What more proof do you need that you can’t infer TDP

solely off temperature?

Not relevant. Again, the issue here is claiming ‘A’ uses more power than ‘B’ simply because ‘A’ has a higher temperature. You absolutely cannot make that inference and I’ve shown two real examples (4850 vs 4870 and SB vs IB) that show the higher temperature parts using less power.

If you can’t understand the significance of these examples then you don’t even understand what the issue is.

IB could have a smaller heatsink mass, lowered fan speed profile, or they could’ve run it under higher ambient temperatures. There are many possible reasons why it could have a higher temperature not caused by a higher TDP, like the article seems to imply.

It’s not really relevant to anything but sure, you do whatever floats your boat.

We are talking about two different things. Completely. I am not talking about SB vs IVB, despite your repeated attempts to say that I am. We aren't talking about 4850s vs 4870s, rather one CPU family.

What I get out of this thread is that a certain SKU, the 3770k specifically, is running hotter than expected. It also appears to be getting a 95W TDP rating instead of the 77W TDP rating that was expected.

Does temperature play a role in this?

Maybe. That is all I am saying. If the Intel thermal solution isn't performing as well as expected, the chip could be using more power than what was initially anticipated for a given clock speed. (Just one of many possible reasons a chip might run warmer than intended, no?)

Which you are basically agreeing with? (ie, a better cooled 480 uses less power) I don't see what the argument is?

A separate issue is this: at higher clock speeds, where the temperatures are looking to be an even bigger issue, their

might be a situation where the power leakage of IVB becomes too great and SB becomes a better performing chip for a given amount of power (as it might have a large advantage in clock speed).

But that wasn't what I was talking about, and I am not sure how it came across that way. I am also a bit confused on how that assertion could be so aggravating, we are still in speculation land. We'll just have to see how it shakes out.

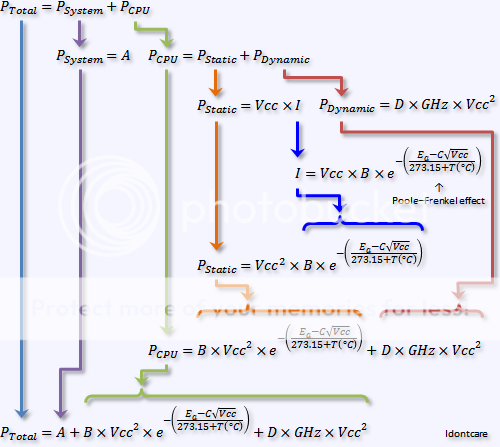

Effect of Temperature on Power-Consumption with the i7-2600K

Temperature directly impacts power-consumption because of the fundamental physics involving the Poole-Frenkel effect and leakage.

Temperature alone does not define TDP, no one parameter on its own can or does dictate TDP. But you are assured that the same chip operating at the same clockspeed and same voltage will require a higher TDP spec if it is to operate at a higher temperature than a cooler one.

(this is part of the motivating factor for spec'ing a lower max operating temperature with AMD CPU's versus Intels, by spec'ing a lower max operating temp AMD is able to operate the CPU at a higher clockspeed while staying within their spec'ed TDP footprint...but it only works if you can keep the temps below that max spec'ed operating temp)

I think that what I am saying

might be the case jives completely with this series of statements.

If the 3770k can't run at it's target temp under load, Intel would simply raise its TDP in relationship to ceiling needed to accommodate the additional power needed at the temperature it does run under load with their provided cooling solution. Who knows under what conditions that additional ceiling might be needed.

Heck, that increase in TDP might be simply from deciding to consolidate to a single cooler SKU for the entire IVB line that is simply a little under built for the the 3770k. All sorts of reasons could be postulated that have little negative bearing on the chip itself.

That's the only point I am making, please don't interpret it as anything else.

Not relevant. Again, the issue here is claiming ‘A’ uses more power than ‘B’ simply because ‘A’ has a higher temperature. You absolutely cannot make that inference and I’ve shown two real examples (4850 vs 4870 and SB vs IB) that show the higher temperature parts using less power.

It was relevant to the point I was making, which is completely different than what you are talking about, evidently.

If we are talking about such disjointed topics, there is not much to be resolved here, I guess.

I agree. There is no call saying that IVB uses more power than SB simply because it has a higher running temperature. Abso-freaking-lutely.

Evidently it is not this thread, but another thread, where folks actually have retail boxes w/the 3770k displaying a 95W TDP. My mistake, I thought that was some where in this thread.

http://forums.anandtech.com/showthread.php?t=2237805&page=14

There is it, a ways down the page...