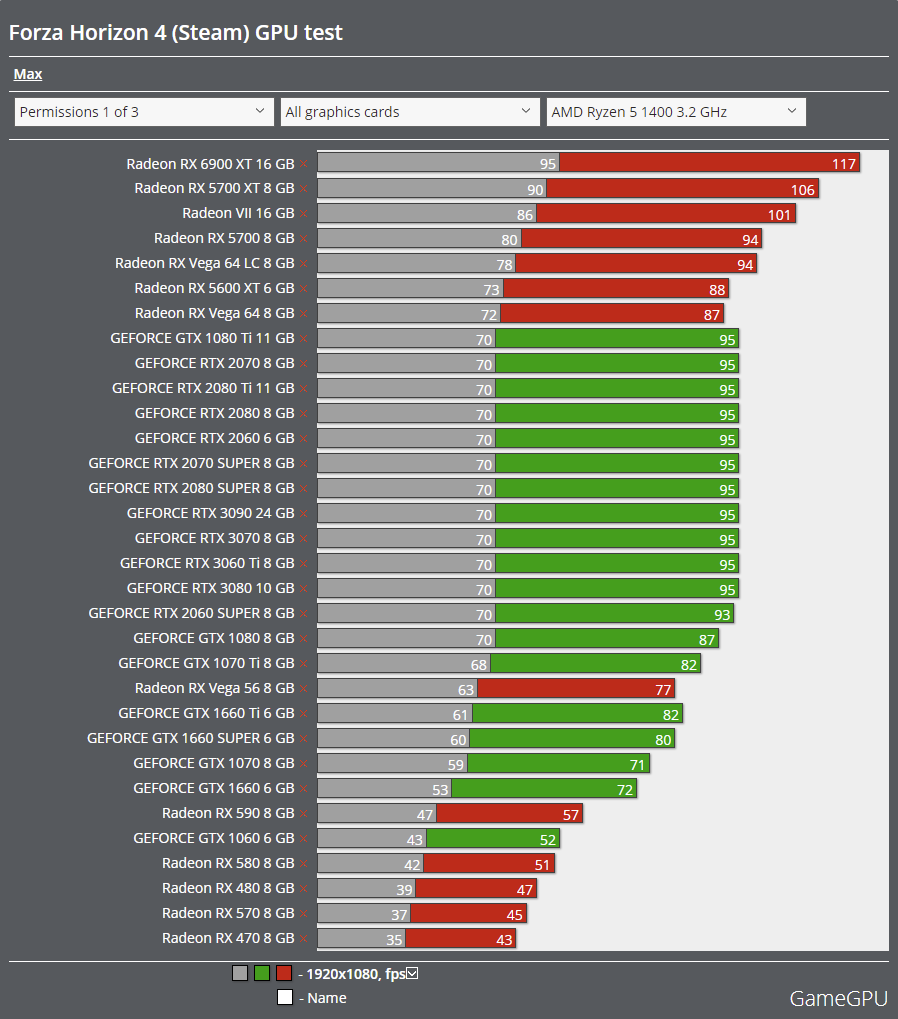

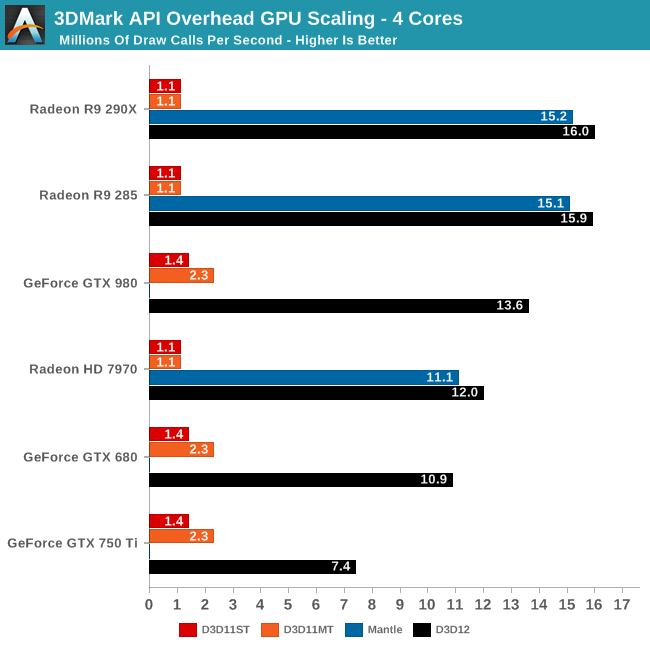

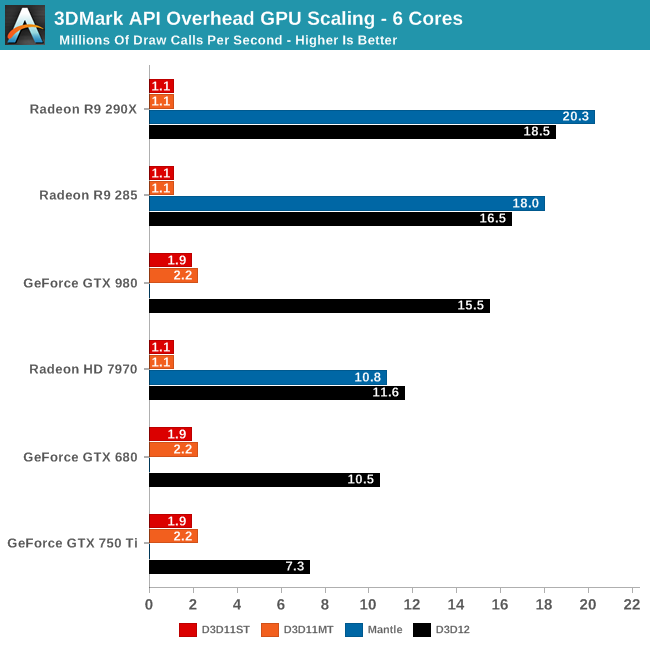

HWUB did an investigation into GPU performance with lower end CPUs for gaming and published their results. In the comments they mention they tested more games than they showed in the video, all with the same results. As we all (should) know, in DX11 AMD had the low end CPU performance issue, but it looks like this has flipped for DX12/Vulkan. HWUB mentions they think their findings hold true for DX11 as well, but as far as I can tell, they only tested DX12/Vulkan titles so I don't think they have the data to backup that statement and doubt it is true.

(342) Nvidia Has a Driver Overhead Problem, GeForce vs Radeon on Low-End CPUs - YouTube

(342) Nvidia Has a Driver Overhead Problem, GeForce vs Radeon on Low-End CPUs - YouTube