unverified speculation:

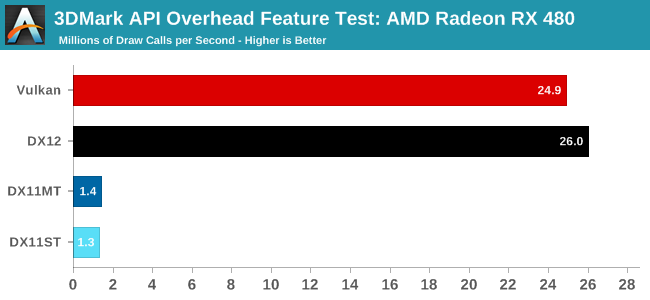

I do not believe it has anything to do with Nvidia's drivers. I would argue it is fundamental to the design of Nvidia's GPUs with their software based scheduler.

It is not that Nvidia's drivers are shitty, but rather Nvidia has committed itself to a design that uses a more flexible software scheduler that requires CPU time to run. They likely made this choice targeting the high end of the market that is more interested in performance then CPU usage. The CPU is arguably a better scheduler anyway, as long as it has the CPU cycles to spare.

This does not make Nvidia's cards inferior to AMD's, it gives them an advantage in systems that have CPU resources to spare. Typically high end gaming PCs where the $$$s are made.

This does not make AMD's cards inferior to Nvidia's, it gives them an advantage in systems with weak CPUs. Typically consoles, upgrade PCs, and low end gaming PCs.

In both cases, those are the traditional markets of the two companies. Nvidia at the high end, AMD at the midrange and low end.

-------------------------------------

I think Nvidia's software overhead CPU time requirements are increasing. Specifically, it likely required less cpu to run a rtx 2000 series because they perhaps had less compute units and less processing power per compute unit. Same with the GTX 1000 series, less compute units, less complexity, no rtx, no dlss, etc.

As Nvidia's graphics cards themselves have become larger, more features, and more complexity, the amount of cpu time needed to run the software scheduler has also increased.