frozentundra123456

Lifer

- Aug 11, 2008

- 10,451

- 642

- 126

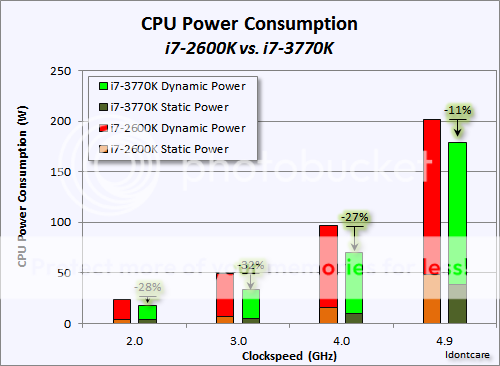

Not that I am in disagreement with the spirit of your post, but 22nm IB did better than 20% over 32nm SB in terms of power consumption, excepting for the corner case of >4.5GHz clockspeeds (well above the intended max clocks for either 32nm or 22nm).

The TDP for IB decreased from 95W @32nm to 77W @22nm for a good reason, the power savings really were there to make it happen.

I think what made 22nm seem so "meh" to us enthusiasts is that 32nm SB was just so awesome. 5GHz clocks on a HKMG process that even now GloFo only wishes they had. It makes for a tough act to follow.

Are these numbers from your personal tests? I was just baseing my estimate on the tdp of a quad core, 77w being slightly more than 80% of 95 watt sandy bridge quad tdp. You are much more of an engineering person than I am so I will accept your numbers. Still seems like that intel is falling into the same pattern of always saying the next chip out will be the big breakthrough.

First, it was wait for ivy bridge, it will really lower power consumption. Then it was wait for Haswell that is a tock, it will really change things, but already some are saying Intel will not really be competitive with ARM until Broadwell. The problem I see is that ARM is becoming so integrated into the landscape that when intel finally gets x86 competitive, it may be too late. And believe me, I really would hate to see ARM force x86 out of the mobile market. I have an ARM tablet, and have never hated a computing device so much since my Windows ME desktop with a celeron and 2mb (yes mb) integrated graphics.