GaiaHunter

Diamond Member

- Jul 13, 2008

- 3,732

- 432

- 126

The downside is that it is grotesquely loud compared to aftermarket and even reference 780 Tis, and has almost no reasonable overclocking headroom and thus gets crashed by quieter, much faster cards.

I know you are you convinced that the 290X and the 290 have no OC headroom.

Why you convinced yourself that these are the only cards that won't reach 1200-1300 MHz core in the 28 nm process is a bit weird though.

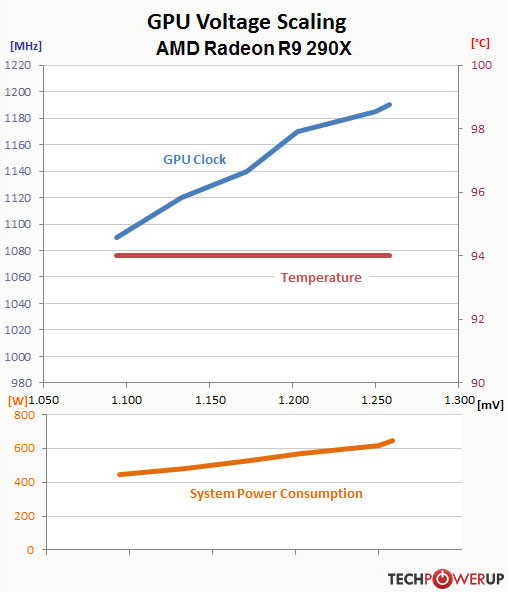

An image that for a bit of time was often posted in these forums to show "absurd power consumption levels" was the following.

AMD's Radeon R9 290X shows fantastic clock scaling with GPU voltage, better than any GPU I've previously reviewed. The clocks do not show any signs of diminishing returns, which leads me to believe that the GPU could clock even higher with more voltage and cooling.